(with apologies to Stephen Colbert on the title of this post)

Now that another Christmas and Boxing Day are behind us, I’ve compiled a series of metrics that I believe capture much of the “flavor” in the multi-dimensional dataset describing the quality assurance and potency testing conducted by the Labs through August of this year.

This sampling represents more than 25,000 potency tests for flower alone. One might expect high variability across flower lots, but one does not necessarily see that (depending on how one cuts the data). There is a good deal of interesting stuff when one looks behind the averages being reported by the 14 labs (2 have closed since Sept – that I know of). Terminal results for the 2 labs no longer servicing the Labbiness needs of the industry are included in the report card. I am planning to look more closely at the pattern of results being reported by the labs over time and any coincident changes in lab “market share” that emerge. (Too little time … gotta be prepared for the Summit. gonna be in front of a crowd … WTF have I done agreeing to be on a Panel?).

The attached represents a lab-level summary covering the period from (primarily) June-Aug, 2015

Each family of quality assurance test results was reduced to a handful of metrics that describe different aspects of the variability (and occasional lack thereof) between the labs regarding what they have reported as results. I have focused on flower in the postings in this series. In part, that is because some tests (moisture) focus on flower. It is also due to the fact that flower tends to represent the lion’s share of testing (80+%) for most tests. When I report on RESIDUAL SOLVENTS, the focus is primarily on extracts (for inhalation).

For Potency (a special class of QA testing if ever there was one), I report only on flower. I have, however, repeated much of this work across ALL relevant inventory types. Flower has the advantage of removing most “processing” variability from the ultimate potency results. I’m sure that trimming, drying, and curing variability has some impact on reported results. I am also betting that in the “real world” the primary variability will be driven by the FARMER farming in her special way and/or the GENETICS growing in HER special way. When one looks at the results cut by lab, it almost seems as if we are dealing with multiple realities.

Bugs, germs and poop are, perhaps, the most intrinsically disgusting part of the whole quality assurance testing process. They are also the coolest, by far. Little things trying to reproduce ON CANNABIS in the semi-ideal conditions of a petri dish. What isn’t more uplifting than that? — except, perhaps, having a scanning electron microscope with which to watch? As you may guess, I have voyeuristic tendencies.

I’ve heard comments from some “in the know” that it takes time for many of the bugs and germs to reproduce. The ones on poop are allegedly particularly vigorous (dirty little bugs). I’ve put on my analytic “to do” list an effort to assess the average duration that each lab holds a sample before reporting their biologic results. Variability here would (for many reasons) be disturbing.

I will share a more in-depth assessment of the lab results (including more current data) next month when I begin posting on (and through) my new “Straight Line Analytics” website. I will be sharing this information with the LCB and/or DOH (and/or the institute of higher education which has put their reputation on the line by accrediting these Labs)

I’m not sure if change is needed. I do know, however, that I do not “trust” our regulated Cannabis as much now as I did before putting the effort into examining the data summarized by this Report Card. It’s nothing personal, it’s just the data speaking to me. Data that are, supposedly, objective.

Below, you will find a series of tables detailing different sections of the Report card. I will make an EXCEL version of the full “wide”worksheet available later this week when I launch the new Straight Line Analytics website — where I will require a valid e-mail address in order to mail it back to you (my regrets to the personal privacy advocates out there – I know at least one 502Cannabis lurker that will be put off by this … too bad, RR will probably just figure out that I’m not screening and will create a throw-away e-mail for a one-off distribution of the report card to add to his alleged mondo data model)..

Making the data available in a more convenient EXCEL form will enable those of you that might wish to putz/sort/re-color/re-cluster/or otherwise play with this information.. It will also enable me to contact you when I believe appropriate (or until you tell me to stop … I’m new to this and not quite sure about etiquette — perhaps I’ll even supply a safety word for each respondent providing an e-mail address).

Below, I provide a definition of each metric, and an indication of what BIG and SMALL means for that metric. (note – BIG is not intrinsically better than SMALL {in spite of what approximately ½ of my acquaintances over the years have generally told me}. Occasionally it may be interpreted as BETTER vs WORSE. Occasionally, it means MORE vs LESS.

I have called a few things out in the table with colors to show interesting within-lab and/or between-lab patterns. These are things that I (ME) found interesting. It does not mean that they have meaning or practical utility. In general, I have followed the convention of coloring “Friendly” results GREEN and “kinda-friendly” results YELLOW.

Aside from that, I will not be commenting on these data publicly for a while. I am hoping that the Community of Jimmy-Readers will do so.

Please share your opinions regarding these data and the metrics I created to describe the testing results (if any are trash, please tell me why). If I’ve missed the opportunity to use a really-cool-metric that came to you in a flash of brilliance, please share. If I agree with your brilliance, I’ll make an effort to include it in the “Final” Report Card.

The “fair weights and measures” aspect of having PRAGUE Lab Testing Results is crucial to the Health and Growth of this industry. When I first posted regarding my concerns about Lab reporting after looking at the original Cannabis Transparency Project data in May, I gave thanks to Chuck of Dime-Bag Scales (now Green Thumb Industries) for taking the time to teach me. I’d also like to now thank the many of you that have taken the time to share your thoughts and insights regarding my work describing the Lab results.

To spend one moment on my soapbox, good quality assurance testing results allow us to know what is in that child-proof package (less the terpenes I’d like to see tested for — -NOT MANDATED, just done). This is crucial to the health of Washington’s State-Legal Cannabis market and is the only tangible advantage that regulated and over-taxed 65% mondo bud will have over the more established channels of distribution known as the Dark Side. I’ve noticed that some of the Farmers and/or Processors are labelling their products with terpene levels. Good for them …. I intend to “look for the Terpene Label” when shopping in 2016. I will repeat … I do not think that terpene labelling should be mandated. However, when it is done, I believe that it should be rewarded. It will be – at least with my dollars.

My prayer for the New Year?

I hope that I never become convinced that the aforementioned mondo bud has been coaxed by superfarmerfragilisticexpialadocious to produce OG Sprinkles. That would be almost too seductive to me …. I am, after all, a lowly consumer —- a vulnerable consumer. PLEASE don’t let me be so convinced.

Here is the long-awaited report card, followed by brief definitions of the metrics I included therein. Your feedback is not only appreciated and desired, it is crucial. I would love all interested members of the community (labs, farmers, retailers, consumers, regulators, legislators, and Santa Claus, too) to comment on this report card if anything in it evokes a strong opinion or question.

The 14 labs are called A though N, as they were in my POTENCY posting. I will use this same consistent Blinding Code going forward, until such time as full transparency is in order. It is likely that the LCB and DOH will enjoy an earlier unblinding than most (and likely that our Farmers will get a heads-up, as well …. GO WASHINGTON FARMERS!).

I’m keeping the report card blinded, as I would prefer everyone’s opinions and questions to be pointed at LAB X, rather than “My Favorite Fair and True Cannabis Testing Laboratory”. This makes it less personal and makes it more objective. It makes any feedback we get from the LABS and/or the FARMERS and/or the RETAILERS that use Lab services much more valuable (in my mind, at least … but I am a bit of an objectivity freak).

I have gone to some lengths to ensure that my A-to-N coding and the metrics in the report card do not provide a “tell” regarding which lab is which. That was not always easy to do, given the disproportionate share of the testing market enjoyed by a handful of the Labs. Your comments and discussion are crucial. Let me know what you find odd or otherwise interesting in these data. Let me know which Lab or Labs seem “off” to you and why? Let me know if my metrics suck. Let me know if I am wasting everyone’s time.

If you should feel that a thank-you is appropriate, I’d like that as well.

On to the Report Card –

Lab Report Card(s)

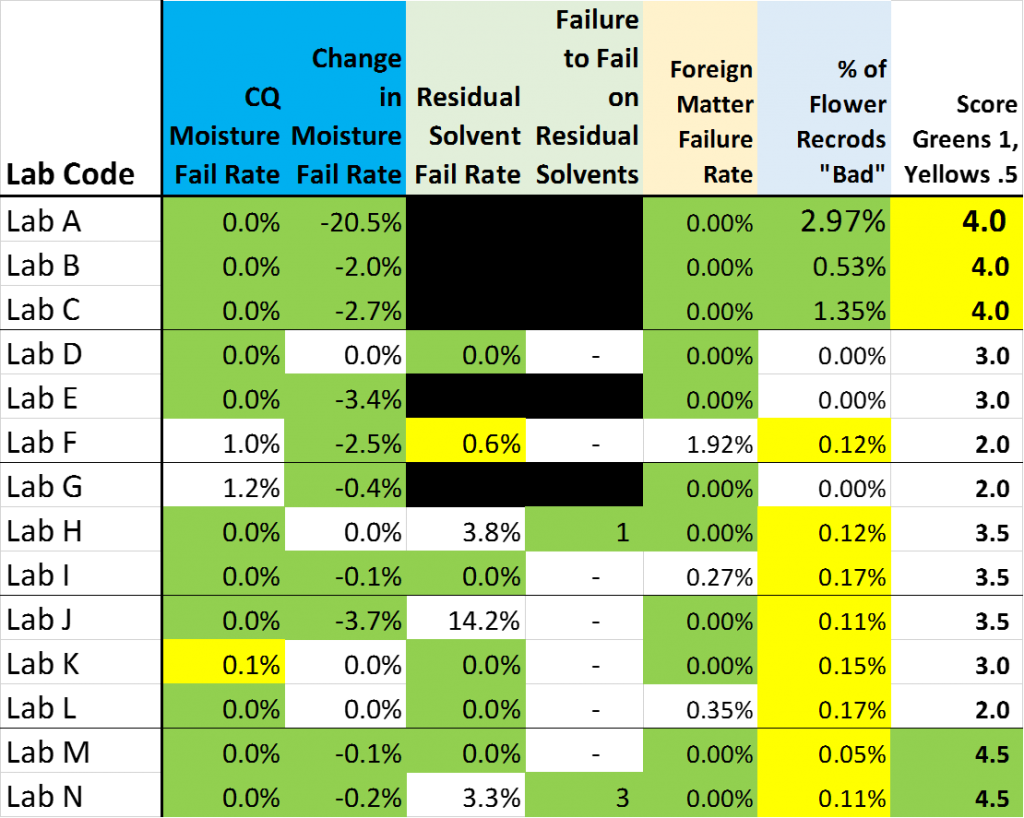

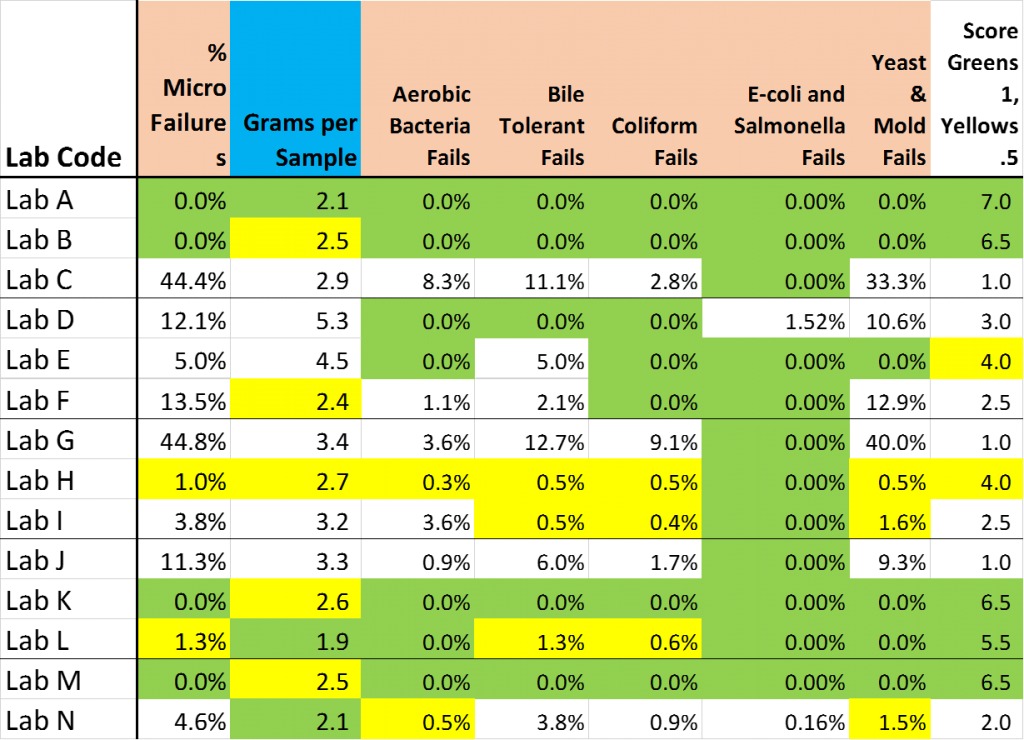

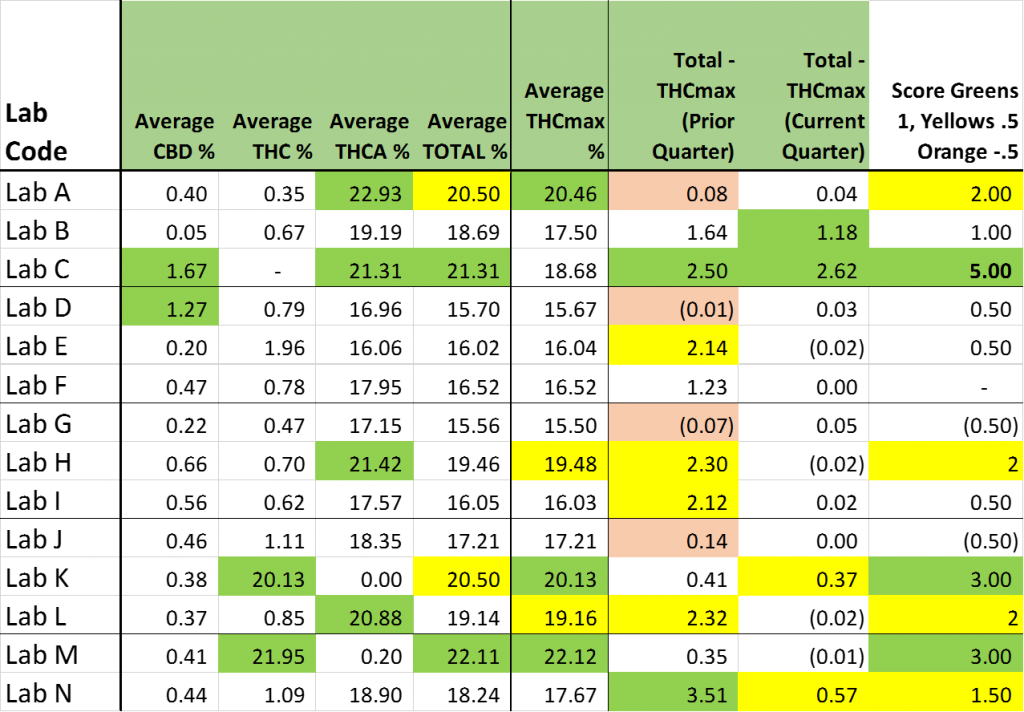

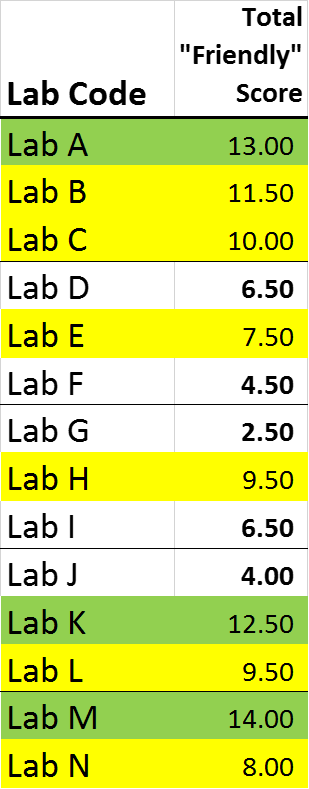

The only “interpretive” sauce that I have added to the following three tables is a convention of shading results that I would classify as “favorable to the testee” financially as GREEN. Results that are somewhat favorable to the testee, but are not so much the apparent outliers as those I shaded GREEN have been shaded YELLOW.

More greens suggests that the results reported by the Lab are of the type that would receive a warm reception from the Farmer and/or Processor associated with the product being tested.

A preponderance of Greens may be fate … it may be great Customer Service ….. or it may be something else (or nothing at all). The one thing I can unequivocally say for sure is that where there is more green, there is more green.

In each table, I have added a final column which scores each lab a “1” for a green cell, a ½ for a yellow cell and (on the potency table) a MINUS ½ for having an orange cell (for labs that were using THCmax in Q4 …. Before they were explicitly told to).

I compile a total of these 3 scores in the final Lab Scoring Table … and then shade the “Total” scores Green and Yellow.

All this green/yellow stuff is just to put some structure on the data. It’s not written in stone – it’s just the data talking to me.

I look forward to hearing what these data say to you.

Metric Definitions

Table 1:

CQ Moisture Fail Rate

- % of moisture samples failing

Change in Moisture Fail Rate

- Change in Moisture Failure rate between first 4 quarters and 5th quarter (-ve % is a decline in failures)

Residual Solvent Fail Rate

- % of Residual solvent samples failing

Failure to Fail on Residual Solvents

- The number of samples that SHOULD have failed Residual Solvents but did not

Foreign Matter Fail Rate

- % of samples failing for Foreign matter

% of Flower Potency Records “Bad”

- % of Flower Potency Records that had at least one % value over 100 or below zero

Score: Greens +1, Yellows +0.5

- Total score of each lab for Table 1 (Higher score is “Friendlier” to the testee)

Table 2:

% Micro Failures

- % of samples failing 1 or more “microbial screening tests”

Grams per Sample

- Average size (in grams) of samples submitted for microbial screening

Aerobic Bacteria Fails

- % of samples failing for aerobic bacteria

Bile Tolerant Fails

- % of samples failing for bile tolerant thingies

Coliform Fails

- % of samples failing for “poop”-associated bugs

E-coli and Salmonella Fails

- % of samples failing for other “poop”-associated and GI-associated bugs that can get you sick

Yeast & Mold Fails

- % of samples failing for molds, mildews (presumably) and/or yeasts

Score: Greens +1, Yellose +0.5

- Total score of each lab for Table 2 (Higher score is “Friendlier” to the testee)

Table 3:

Average CBD%

- Average CBD% reported in tested flower samples

Average THC%

- Average THC% reported in tested flower samples

Average THCA%

- Average THCA% reported in tested flower samples

Average TOTAL %

- Average TOTAL Cannabinoids reported

Average THCmax %

- Average (Calculated) THCmax % (where THCmax = THC + .877*THCA)

Difference TOTAL – THCmax (prior quarter)

- Difference between TOTAL reported in Q4 (previous quarter) and THCmax (TOTAL – THCmax)

Difference TOTAL – THCmax (current quarter)

- Difference between TOTAL reported in Q5 (current quarter) and THCmax (TOTAL – THCmax)

Score: Greens +1, Yellows +0.5, Oranges -0.5

- Total score of each lab for Table 3 (Higher score is “Friendlier” to the testee)

Table 4:

Total “Friendly” Score

- Total score for each lab totaled up across Tables 1-3 (Higher score is “Friendlier” to the testee)

What is very concerning is to have 4 labs essentially passing everyone and 2 that looks to fail half for micros; though this is possible I find it very improbable.

Now to be honest the thc numbers all look relatively similar and I find that very likely. On a personal note I’ve tested samples at 3 different labs, mapped out the results and compared. I found that in the long run all 3 labs report thc on a strain with 5% almost every time, sometimes higher sometimes lower but all 3 labs are in the same range.

Our company has personally decided to use one lab because of the turn around time and customer service. It sucks nothing more than to have a product ready to go, a retailer store waiting to buy but the lab making you wait 7+ days to get results that take 48 hours. We have had about a year though to see results compare and make a decisions and learn what to expect from each lab.

Personally I cannot wait until you show the names, and though by inference I don’t think we’re using them, I would really like to see if we’ve used or are using labs A,K or M, so we can make a switch as thier results seem highly improbable!

Mark

Wicked Weed

Thank you Jim! I’ve been looking forward to this report card & continue to anticipate your further work. More than happy to trade my e-mail so I can look forward to those as well. This seems to correspond to my totally anecdotal observations acting as a consumer ~as far as what I’m seeing on QA labels. You already know I’m totally w/ you on terpenes {& more cannabinoids too} a # of tests performed per lab would be helpful?

Thank-you, Travis.

I agree re: the # of tests per lab being useful.

I have held back from sharing that, as it is a clear “tell” as to which lab is which.

Through Aug., for example, the top 5 volume labs represented over 80% of the testing volume done for flower, while the bottom 5 labs represented less than 3.4% of the testing.

One of the more interesting patterns is the CHANGE in lab market share over time as a function of the “over”-reporting of TOTAL Cannabinoids.

Cause or Effect? Chicken or Egg? Enchilada or Burrito? I don’t know.

I do, however, see disturbing changes in what the labs are reporting across time.

Good Jimmy would like to believe that this is just a function of the labs getting their processes and procedures in shape.

… I just thought that was something more likely to be done prior to having The Center for Laboratory Sciences on the Campus of the Columbia Basin College down in Pasco certifying the labs than to be done over the first 15 months of operations.

a renowned breeder has commented that an entire branch should be submitted for testing. i would argue a step further that as a means of getting labs on the same page & disqualifying the intellectually dishonest; we should be seeing some mandatory equalization done where that branch is homogenized and then divided in to equivalent samples for a comparison of results which should be equivalent. Slip them in w/ the regular flow of samples.

This is a critical aspect of the industry for everyone from the biggest producer to the least consumer. the giants deserve fairness & the little guy deserves accurate QA. The intellectually dishonest players deserve to be eliminated from the game. getting operational underway is just the way it’s had to happen in order to implement legalization. granted certain of the labs have been operating in support of the previous medical system ~& there is fallout where individuals are questioning pre-502 lab work. It makes for an opportunity to identify the bad actors. It’s in the #s & #s don’t lie

Interesting thought, Travis.

I’d like to be clear that I am making no attributions of dishonesty, whether intellectual or otherwise. I’m just describing some non-random patterns and groupings in the data that do not seem to make sense given what tiny amount I know about logic and testing proficiency.

With that said and done, I just finished a fascinating animated bubble chart that shows, across the first 64 weeks during which flower lots were being tested, a very interesting pattern (if you’ve seen the TED talk by the Nordic researcher that shows nation-level annual changes in mortality rates and baby-production rates over the years, you’ll get what I’m doing)

Picture a Y-axis that shows the “inflation” of TOTAL Cannabinoid reporting (vs. THCmax) and an X-axis that shows the value of TOTAL Cannabinoids being reported.

Bubbles are sized by the market-share of flower testing that each lab held during each of the weeks.

Bubbles are colored by Lab.

I fired it up, and let it run … the resulting movie file is almost 500MB in size … and it is a thing of wonder.

There are some very interesting time-linked things that happen as the movie runs (as the plot begins to unfurl).

Such as when the LCB directed last spring that the Labs should begin reporting THCmax in the TOTAL Cannabinoid Field (hint … not all labs followed their direction, and one seemed to think that keeping things the old way for 7-8 weeks was an OK thing to do).

The REALLY interesting thing is what happens to market share of testing as a function of prior “inflationary” reporting by any given lab … and by overall HIGH reporting of Cannabinoid Totals, period.

The patterns seem to fit (with surprising consistency) a story line of “chasing market share by reporting inappropriately large “potency” numbers”. The interesting thing is that there are two very clear groupings of labs that emerge over time. No attribution of cause & effect on my part, but it is one heckuva “correlation”.

It’s easy to make up stories while looking at this thing (I’ve had it on a loop near the top of my big monitor for most of the afternoon). One of the odd things is that some labs that were, previously, reporting THCmax, began reporting in an inflationary way after the market share correlation with high/inflated Cannabinoid levels began to emerge. This seems to imply that they used to think THCmax was the way to go, and then they started “inflating” results relative to that standard, and then they went right back to THCmax when told to do so.

The only thing that I see in the animation that seems to pre-date this change (from proper reporting to inflationary reporting) was the increasing concentration of market share being seen in the labs that I refer to as having inflated their results.

Anyway, I noticed a particular lab that had not popped (much) on the “Friendliness Scale”. It sure pops on this visualization (and not, I believe, in a good way … unless you want to be selling SUPER POTENT BUD).

Another thing … I’ve been bothered by the question of WTF the labs that were not reporting THCmax were reporting, so I did some fairly brute force work and have figured out (I think) what each lab (except one) was measuring. Some labs changed what they were reporting over time (before the LCB gave direction re: THCmax). When they changed, unfortunately, it was often from non-inflationary to inflationary reporting.

The one I could not figure out turns out to be the one that popped in the animated bubble chart.

I may work up a brief questionnaire and distribute to the labs to address some of the blanks in my knowledge and to address some of the questions that have emerged as I’ve gotten to know these data.

This work is fun, but it is increasingly disturbing. I just wish it paid.

Time to write a Hawku (or 2) for the Seattle Times.

Yes, A, K, and M stand out in the charts.

I think a cost chart would also be useful. What are these labs charging for their results?

Thanks for compiling and sharing, Jim. With all the build up, you may need to do a grand unveiling party when you finally reveal the labs! (I’ll bring the party favors…;>)

Nummy … I LIKE Washington Bud Company favors (even though they do not yet enjoy the transparency of State-sanctioned and certified lab testing results).

I agree that cost-per-test is an important component of the overall picture.

If a test costs less at LAB X, and LAB X requires less product in order to run it’s tests, and LAB X returns results in just under 45 minutes, and LAB X’s results almost never fail the QA pass/fail tests, and LAB X’s results almost always hit 20+% THC …. what is not to like about LAB X? (aside from the fact that LAB X has not reached out to me with offers of hush money — yet).

I will say that there is one lab out there whose “THC” results (TOTAL, actually) look very different (distributionally) than any of the other labs. I pretty much know that if I buy product tested by that lab, I am likely to be buying a 20+% product. I also suspect that I don’t have a frigging clue what the “THC” levels of that product actually are — at least not by reading the label.

Then again, perhaps all of the very best Farmers — and all of the Farmers with the very best genetics — just happen to be using that lab. Stranger things have happened in the universe (President Bush’s idiot son being elected President might be one of them).

Hi Jim-

Once again thank you for putting in all of this time crunching the numbers. I have a few comments:

1.From Mark’s comments earlier: “Our company has personally decided to use one lab because of the turn around time and customer service. It sucks nothing more than to have a product ready to go, a retailer store waiting to buy but the lab making you wait 7+ days to get results that take 48 hours. We have had about a year though to see results compare and make a decisions and learn what to expect from each lab. ”

As a lab owner, I’d like to make sure we are talking about the real turn around time. Is it 48 hours from the time the sample was collected or 48 hours from when the sample hit the lab and was checked received in the traceability system? Either way there is not a platform that can reliably quantify microbial counts in 48 hours. The soonest is 72 hours, unless of course someone eyeballs it and calls it good. As for longer turn times, sometimes things don’t look right in the data and things get repeated. If accuracy matters, then real turn times will fluctuate. You may have just “found out” your lab if the turn times are truly 48 hours. Perhaps you want to ask your lab how they turn microbial data so quickly. They aren’t using qPCR, I guarantee it, it’s way too expensive, but likely is the only platform in which you would do an enrichment for 12-18 hours. Most everyone (if they are actually conducting the tests….<——note I keep bringing this up) is using petrifilms or another plating format for microbial testing. These formats take 72 hours for a complete incubation (if you follow the instructions). How is this lab turning results in the 48 hours quoted from above? I wonder of Columbia Basin College has reviewed those SOPS.

2. Terpene testing. I was in a meeting with LCB and DoH along with a majority of the labs and it was declared that terpene testing isn't required as it doesn't pose a health risk. I disagree. Take beta Myrcene for example. It is a prevalent terpene and is associated with "couch lock" if the compound is present in in concentrations over 0.5%. This information along with the generally accepted medical benefits of these compounds are fairly well documented. Anyway, to me, high terpenes tell me that the flower material is great. High terpenes, again to me, tell me what an analogous Robert Parker score may be for all you winos out there. Ultimately the terpene testing conundrum comes down to economics. Is the marketplace going to bear another cost that jacks up testing by a few more bucks? The price points in the industry for real testing (if people are actually doing the tests) is way off to begin with. I'll comment more on the economics in a lower paragraph.

3. Microbial Data. Realistically most of the flower product being produced should fail according to 502 rules. I personally don't agree with the rules, but the State's monograph dictates the testing and ergo should dictate the tests (if people are actually doing the tests) that are being conducted. It is an ugly fact, but it is the reality. How about this, do you think labs like failing people and subsequently losing their customers? Failing a client is like telling someone that there was a terrible accident and no one survived. The folks who routinely don't fail a soul should be tarred and feathered and run out of the industry for being the charlatans that they are. I can't wait for the unblinded data.

4. Lab testing economics. A simple visit to each lab's web page or a phone call will likely get you pricing info in fairly short order. A bunch of the high volume labs have price points at or around $60 for a complete 502 flower test. (I struggle to hold back the laughter as I type this.) Is no one really questioning these prices? Think about what goes into a 502 test. Visual inspection, moisture analysis, potencies, and 6 microbial tests. The data need to be reviewed, a report generated and numbers entered into the traceability system. All this in 48 hours at $60? Anyone else see the BS (or smell it)? If you are missing the BS aroma, please review Jim's data sets. Perhaps some labs have staff working for free or on a work release program. Moreover, are your lab results certified? Has someone meeting the LCB's criteria for a qualified lab worker actually reviewed the data and SIGNED out the report like a real lab would do? These things cost money, if people are actually doing the tests. What about when pesticides, heavy metals and mycotoxin testing is mandated? Perhaps then a MMJ or 502 complete test will run $62.

Here is the truth. Tax revenue appears to be the goal, not public safety. Clearly Jim's data analysis point to unfair methods of competition and unfair or deceptive acts or practices in the conduct of any trade or commerce and are declared unlawful by the State. (More on this topic to come)

What ever happened to just telling the truth? Jim's metric for "friendliness" is outstanding. That is the real truth. If you are grower friendly lab, you don't fail clients and you yield high potencies. It's pretty simple, but here's the real irony…..High numbers and zero fail rates all for around $60? Shouldn't there be an up charge for the BS? The whole testing industry is laughable. My favorite quote is from the is the lab running around declaring "Never Fail Microbial again". LAUGHABLE!

Thanks again Jim for putting forth all of this effort to prove what many have already suspected. I was explaining the state of the lab testing in Washington to a friend. Their question back to me was "Are the labs lying or plain incompetent?"

I’m a scientist by no means, but I’m intelligent enough to know when things aren’t right and I’m being lied to. I’ve talked to every lab that we have used about procedures and I’m confident that labs can test in 48 hours ethically. Our company has had 2 lots fail microbials, and knew this within 48 hours of testing; now if this lab was only selling passing scores, we would not have failed any let alone 2. This tells me that either the lab is honest in their testing and gives an accurate results or the lab is just making up results and arbitrarily failing some companies; in my humble opinion the first option makes more sense.

As to your comment on labs selling $60 services and guaranteed passing results, I’ve yet to see that from any lab, though I do agree some labs are probably gaming the system, I haven’t seen it done so blatantly. I’ve honestly haven’t even a $60 lab tests either, the ranges I’ve seen are $75-90.

Though I think terpenes provide to the entourage effect and I think most people agree, I think requiring is just putting more pressure on the producers to produce a product that is cheap for everyone except the producer, this is not good for our industry. I also think, when will it end? Are we going to have to list everything, I mean there is some evidence showing thcv suppresses appetite, that can’t be good for cancer patients looking to stimulate appetite, we definitely need to show that. And when future evidence shows cbdg inhibits muscle contractions, we must list this for ptsd patients so they don’t get to relaxed. I know I’m being facetious, but that’s the point, we can all make arguments as to what should be included so the patients are safe, but if the patient has no medicine to buy because producers go out business because they can’t keep up with the cost, what’s the point? I much rather buy some legal product with limited testing that shows me it’s safe on a microbial level than have to go back to the old system of buying product with 0 testing and not an iota of evidence to show its been through some resemblance of a quality control check outside of hearsay!

I think we can all agree that there is some flaws in the testing arena, hopefully we call make it all more fair and honest without forcing the producers to take on more cost.

I’m going to close by saying that customer service seems to be something that some labs seem to be missing. You can be the fanciest, highest end, most scientific or whatever you want to say to try and prove how your better than lab X; but if you can’t answer your phone, take samples on the weekend, send results on the weekend, get results in less than 3 days, take the time to discuss passing and failing results, provide multiple payment options to include net 30 or the many other things that producers care about when choosing labs, then you will lose business and lab X is going to get it. Producers don’t have to use your lab just because you say it’s better, we have options.

In regards to the 48 hr test for microbials. A number of FDA BAM / AOAC approved methods exist that require only 48 hrs incubation. To say otherwise shows a lack of knowledge of rapid methods. 48 hour methods for fungi and Salmonella have been a standard in the food and pharmaceutical industry for over a decade. Aerobic plate count, coliforms, E.Coli and gram negative bile tolerant bacteria, all can be tested with a 24 hr incubation. See FDA BAM, USP, or AOAC for references.

In regards to testing costs, I worked for the largest FDA contracted lab in the U.S. and the price points for similiar testing that is required for I-502 testing was in the $50-$100 ballpark. Any lab that says that testing cannot be done for those prices is either inefficient, or lacks industry experience. For labs that also serve other industries, it is also possible to discount cannabis testing in order to gain a foothold in the market, while relying on income from other services to the food or agricultural industry as an alternative revenue stream.

In regards to certifying results, I personally review and sign every single result that comes through the lab. We operate in full ISO17025 compliance, which requires not only LCB audits, but also audits from 3rd party auditors that are not part of the cannabis industry. My partner and I both work 80 plus hours a week to serve our customers the best we can. Rather than insult other labs you might find your time better spent invested in improving your business practices, and the services you can offer your clients.

Thank-you Dustin (and Jason).

I did not see anything in Jason’s comment that I would characterize as “insulting other labs”.

I do, however, see some aggressive posturing in your posting.

I am familiar with what economies of scale can bring to a business.

I am also intimately familiar with variability.

However, I’m not in the business of censorship.

Glad to see we are beginning to have a pithy discussion emerge regarding my Lab work.

Happy New Year

Jim

What I found potentially insulting in Jason’s message is the implication that labs utilizing microbial methods that require 48 hr incubation are not using validated or approved methods, thus are in some way deceiving the client. I find the spread of misinformation to be extremely insulting, and a disservice to everyone in this industry.

One thing not mentioned in above analysis is the potential for sampling bias. What are the sample sizes for each and how much of the business for each lab is comprised of by each producer or processor. I would guess that having the best and highest volume processor testing exclusively at your lab would significantly change the rating of that lab based on your methodology, but there is no mention of that possibility. Interesting data, but needs refinement before publicly calling out labs as more or less “friendly”.

You are correct, Anders.

Thank-you for pointing the sample size issue out.

I’d say the data are less useful for about 5 of the labs because their sample sizes are much smaller than for the others. In many cases, however, even these small samples are not tiny. I’d say (editorially) that although the signal is weaker for some of the labs, there is still a signal (and it is talking to me).

I have INTENTIONALLY not included sample size in the metrics and work that I have reported, as that would pretty much break my lab blinding effort. I DID include it in a trivial way by blacking out the cells for labs that did NO residual solvent testing. For those cells, n was zero. I only did this because it’s not just the smallest-volume labs that are not playing in the residual solvent testing space.

I have, however, been including sample size in the shitloads of analyses that I have done on these data (most of which no one but my wife, David Busby, and a few of the guys that I occasionally hang with down at the Triplehorn brewpub in Woodinville have seen). While we are at it, check out Triplehorn if you are in town. They make one of the best IPAs in the State (and I even get growlers of their Blonde and Red ales upon occasion) — try their “sampler” if you have not been before.

When one takes sample size into account, some even more interesting things come out (such as the degree of “confidence” that I have in the degree of “Friendliness” that seem to reside in the datasets being provided by each lab).

Given that I have spent almost 6 weeks of my life doing this work, I expect that I will eventually be sharing some of the details and subtleties FOR A FEE. Gotta eat, and gotta get my wife off my back with respect to revenue generation.

Also gotta continue to be able to afford those wonderful Triplehorn growlers … and all of that 65%-plus bud that is allegedly running around the State.

Another potential issue outside of sample size is that many growers have in the past submitted kief samples that were entered into biotrack as a sublot of a flower samples. In the cases that this happened it was not a case of a grower trying to pass off kief results for flower, but due to complications or misunderstandings with Biotrack. The LCB was notified of these issues, but samples often remained in the system as a flower. Due to the lack of a straightforward way to submit R&D samples through Biotrack we have also received other non flower items that were labelled as flower material in Biotrack. Growers aren’t technically allowed to send a non manifested R&D samples for testing, one work around that some growers have used is by creating sublots the same size as the sample being submitted. This allows for traceability of the sample, but can result in some anomalies in the data.

Thanks for your response Jim.

Dustin- In response to your question, “What I found potentially insulting in Jason’s message is the implication that labs utilizing microbial methods that require 48 hr incubation are not using validated or approved methods, thus are in some way deceiving the client. I find the spread of misinformation to be extremely insulting, and a disservice to everyone in this industry.”

What is your method? If you say proprietary you are are….well you know. Show me how you can “Never fail microbial again” and how you have reported out microbial testing in record times? Where are those SOPs? They should be public record. Just curious. Thanks friend. I’ll start naming disparities if you feel froggy. Are you operating as ISO 17025 certified or compliant? Your are not ISO 17025 certified. Is that misleading? Also, Dustin do you certify and sign out all of the results for failures prior to your ozone treatments of microbial failed lots? Or do other labs test and fail the product for microbial contamination and then you “treat” them with ozone and then pass them after treatment and don’t use a third party lab for microbial texting? Where is the objectivity? LCB has told me that they refuse to allow your lab to use an “LCB approved” tagline for your ozone treatment.

As for the second line of questioning, volumes are directly proportional to THC numbers and microbial passes. I think Dr. Jim can back this up based on his data analyses. If I’m wrong it won’t be the first time.

Once again Jim, thanks for your analysis and forum. The data speak volumes. So at the end of the day……What’s Washington going to do about these data?

Happy New Year to all.

More data to come.

(Thanks again Jim)

“What is your method?”

For Salmonella and E.Coli we use PCR, For 48 hr results on Yeast and Mold we use 3m petrifilm, which are AOAC approved.

http://news.3m.com/press-release/company/3m-petrifilm-rapid-yeast-and-mold-plate-gains-aoac-oma-validation

We also have the Biomeriux Tempo system that uses Florescence and the MPN method. All presumptive positives are confirmed via FDA BAM methods. Salmonella is further identified via serological methods, and if requested we offer bacterial identification via the Biomeriux Vitek 2 system. Fungal identification if requested is performed via pcr and microscopic methods. Mycotoxin testing is done via LC/MS/MS.

Our methods require 48 hr incubation. No AOAC or BAM method exists that allows for the quantification of yeast and molds with an incubation period of less than 48hrs.

Results are analyzed a report is written, the report goes to QA for review, after signed off by QA the file will pop up on our LIMS system for me to review, once I sign(digitally) the CofA is printed, I stamp the printed copy approved,initial and date it, hand it off to our office qa team, they do a final review, and proceed to enter the data into biotrack and send the Certificate of Analysis to the customer. By being open 7 days a week, and having personnel in the lab from 7 am to midnight throughout the week we are able to return results within 2-3 days. Thus not technically 48 hrs, samples received in the lab on a Monday will be ready for release by Wednesday.

All of our I-502 SOPS are available to any client that would like to review them.

Currently we are ISO17025 compliant, and are undergoing certification via http://www.a2la.org/

In regards to “Never Fail Micro Again” that headline was a bit hyperbolic and was clarified in the email. We do not and have not ever guaranteed that a sample will pass micro testing after the application of microbial reduction methods(depending on harvest size we have available both dry steam and ionization methods), but we have documented that the method does reduce bacterial and fungi contamination by 3-5 logs. This method has been validated and a paper is currently pending publication in a peer reviewed journal. I would be happy to send you a copy of the paper if you like. I did hear from a grower that you are also offering a similiar service. I think it is great that you are being proactive in finding ways growers can reduce bacterial and fungi counts, of harvested material, making product safer for public consumption.

Clients often use microbial reduction methods before testing, while others have opted to use them after a failed result. In either case if we go to the growers facility and use either method of microbial reduction methods, they can a have the material retested at any lab they choose. We do not offer discounts for retests after treatment and will charge the same amount per lot treated regardless of where the material was or will be tested.

You are correct the LCB does not let anyone say LCB Approved” for any service offered, but the LCB has confirmed numerous times, even after you called and complained to them, that the practice is not in violation of I-502 rules. A simple google search will show that both microbial reduction methods are used throughout the spice, herb, grain, and agricultural industries.

Excellent data analysis as always from Straight Line Analytics and Dr. James McRae. Thank you.

Clearly the data suggest possible incompetence and the extreme statistically improbability suggests manipulation of results. Unfortunately this type of behavior is very short-term thinking and negatively impacts the perception of the industry and Washington state’s system in particular. Obviously this also hurts labs that are highly competent and ethical as they are doing what labs SHOULD do – be objective purveyors of information to protect and assure consumers and ‘weed’ out producer / processors who can’t rise to a competitive standard – and must charge for professional services rendered.

Furthermore, not providing absolute assurance of safety for consumers and patients is not in accordance with Federal oversight vis-à-vis the Cole Memo.

Per the Seattle Times story referencing Dr. MacRae’s analysis, the WSLCB has a contract with a third party company to allegedly assure lab competency and accuracy. But in fact, it is merely lab sufficiency certification. There is no testing of proficiency nor are there any double blind or secret shopper confirmation to assure ethics / credibility.

I submit there needs to be a quick and decisive rules change regarding lab oversight and testing to correct this situation immediately and eliminate the folks and business who cannot conduct themselves professionally and ethically.

JUST WONDERFUL DATA!! affirming what I thought I have been seeing… and provides a needed stick to the get the LCB to better do their job, if we’re to have a viable cannabis industry.

Would be great if the majority of growers wouldn’t threaten to leave a lab when a result comes back less than 20%. Every grower seems to think that what they grow is 25%, and if you tell them its not you risk losing that customer. The LCB also requires that all results get entered into biotrack, which includes flowers that get covered in kief, or have oil smeared on them. Also curious as to why the the sample submitted to a lab for testing is less than 1% of the 5 lb lot. This is unheard of in the food or agricultural industries. If growers want more representative results they need to be willing to submit considerably more material for testing. Until then any one data point for a sample tested is only representative of that 2-4 grams submitted, and does not represent the lot as a whole.

I don’t know how food/ag sampling works (specifically), but I know a great deal about sampling theory and the impact of different sampling methodologies on the robustness of estimates based on those samples.

I don’t disagree with most of what you say, but a properly sampled 2-4 gram sample should generalize well. At the same time, I suspect that what defines a “properly sampled” 4 gram sample might just entail a somewhat onerous (costly and/or complicated and exacting) set of requirements.

The larger the sample taken, the easier the definition of a “properly sampled” sample becomes.

I just don’t know what combinations of sample size and how “properly sampled” those samples were will yield acceptable results for this market.

I thought that was why we had experts like the American Herbal Pharmacopoeia gang, the College charged with Lab oversight, and a dedicated regulatory body helping us to ensure that the products making it to market are consistent with the promotion of public health and safety (….. in this case, to the extent that they minimize the chances of the product DEGRADING public health and/or safety).

There is a good deal of official/regulatory ignorance in many things relating to Cannabis. It’s getting a bit tiring to hear that again and again, given the millions of pages of relevant codes and rules and laws and standards that exist and are in place today keeping us all safe from ourselves and all kinds of bad things in the stuff we put into or on our bodies. One might think that some of those might be dusted off and used as analogies …. rather than apparently pulling things out of our arses.

I’ve seen a great range in potency from individual samples tested. For instance from 100 retain samples the remaining flower was sub-dived into 3-5 samples from the original 1-2 gram retain. Each was ran with an internal std. The average range of thc results from the same sample ranged from ~0.5% to 5%. The average being about a 3.5%. The internal std variance between samples was <0.01%. Thus if the sample is not properly homogenized, or depending on the pick of the flower one could get a potency varying up to 5%. This was a relatively small sample set, but I think it makes a good case for reporting thc in a range rather than an exact number.

Is it possible that some labs, based on there region of the state and the demographics might 1. Have more expierenced growers and less mold issues/higher potency from the farms submitting samples around them and 2. Not suffer from microbe as much because of there growing style/climate/humidity/time of year the study was created from ect.

Or have these factors been rules out already?

Thanks

Potential geographic differences have not been addressed, nor has the potential concentration of “all the good farmers” with a subset of the labs and “all the not-so-good-farmers” with another subset of the labs. That does not, of course, mean that these factors are impacting the results I’ve summarized. Seasonality should not be an issue, given the focus on a specific 3-mpnth period.

I appreciate your thoughts (and implied suggestions).

The one thing that came to my attention was:

Table 3

Average THCmax %

Average (Calculated) THCmax % (where THCmax = THC + .877*THCA)

I thought it was: ∆9-THC-A * 0.877 + ∆9-THC

Nancy … I believe that you are correct.

Folks generally are referring to the delta-9 version when they speak of THC. I’m assuming that (most of) the labs are doing the same.

Given what one of the labs did with the “TOTAL” metric, though, I would not be surprised if they added deltas 8 and 9 together in order to calculate their “THC” values. … now, if only I knew how to type the delta symbol in greek.

Thanks.

PS – I can’t type it either, I have to copy & paste.

Adam — I’m glad my lab work was of interest to you … and thank-you for sharing your blog with me.

Folks: Check out cannabiscandor.com ..

It looks as if it focuses on things relevant to the agricultural / horticultural end of the industry.

Some interesting and though-provoking ideas.

Hey Confidence be careful what you wish for when you want to be the WalMart of testing.

http://money.cnn.com/2016/01/15/news/companies/walmart-store-closings/

From 11.3 to less than 3.75% failure rate in a year ! Wow theres some truthiness there….Really, nice work Im glad your team was able to fix all your growers mold issues perhaps you should publish a book on that. Then again you could merge with TT or GG and utilize their 24 hour turn times…then you would be the Willy Wonka of labs too.

Good Stuff

“Dean” – Best to be nice if/when calling out specific individuals and/or businesses.

I’m not sure from where you sourced the 11.3 to 3.75% numbers.

More importantly, although one poster speculated about what code his/her lab was assigned, I have neither confirmed nor denied any such code-to-business links.

Call out a Lab Code, if you wish … but not a person or business.

Unless, of course, your posting is based on something other than the lab summaries I’ve posted.

Thanks

Jim,

Hey brother Im just reporting what I see and hear from others. The data I quoted was right off the Confidence web site. This was another quote right from Nick in the Seattle Times…

“First of all, an experiment really shouldn’t have a predetermined outcome,” said Nick Mosely, the chief scientist at Confidence Analytics. “This guy had a conclusion before he did an experiment. It’s a piss-poor experiment right off the bat.”

I thought that predetermined outcome in science w to as a “hypothesis” which is the basis for all scientific methods ?….

hy·poth·e·sis

hīˈpäTHəsəs/

noun

a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation.

Just pointing out how piss poor “piss poor” looks in scientific jargon

Dean,

You’ve misquoted me there. The Seattle Times article you’re referring to was from May of last year. That was in reference to an investigation by Gill Mobley… the same doctor who said Ebola was about to devastate the US from Africa and warned that Unkle Ike’s was selling eboka-invested weed. His “experiment” was non-empiricle and biased.

Our lab is neither the cheapest, nor the highest potency, nor the lowest pass rate. I don’t know why you’ve compared us to Walmart.

Cheers,

Nick

Dean,

You’ve misquoted me there. The Seattle Times article you’re referring to was from May of last year. That was in reference to an investigation by Gil Mobley… the same doctor who went on Fox News and claimed to be an expert on how ebola was about to devastate the US from Africa. He also stood out front of Unkle Ike’s and warned consumers of ebola-invested weed. His “experiment” was full of confirmation bias.

con-fir-ma-tion bi-as

noun

the tendency to interpret new evidence as confirmation of one’s existing beliefs or theories.

Our lab is neither the cheapest, nor the highest potency, nor the lowest fail rate. We’re right in the middle on all of those metrics. In the middle of the curve is where every good lab wants to be. I don’t know why you’ve compared us to Walmart.

Cheers,

Nick

Thanks for the report Jim it is very helpful and raises some real red flags.

1. When testing bud how is it sampled? The THC rich portion of the uppermost flowers? Until there is a standard protocol for sampling (which could be done under a video camera) I am not sure these numbers are meaningful. Extractions should be simpler, but there is still the potential to treat the samples differently than the product (like enhanced purging).

2. Until producers/processing are required to send out a certain percent of there tests to multiple labs (maybe 10%?), simultaneously for blind head to head comparisons there can’t be an accurate comparison. There are so many variables in the genetics, growing conditions, harvesting, drying, curing, and packaging methods as well as storage time before testing used by producers/processors that better data is essential.

Dave:

Bud is pretty much sampled however the sampler feels like sampling.

For the most part, I’d imagine that has been a sampling of the best that a given plant or lot has to offer.

There is lots of talk about more rigid/objective/representative sampling. We’ll see what is decided.

Without representative sampling, samples are – well – NON-representative.

I’d advocate for occasional blind testing of the labs (give them a sample with a known profile and see what they return for potency / occasionally give them something with a known “pathogen” and see if they catch it). Having them truly blinded to this activity is key to it helping to curb bullshit test results.

Remember that it is the LABS that are the bad actors here (some of them, at least). The farmers and processors and retailers and consumers and patients (eg., the entire friggin’ market) are the victims of their Friendliness.

Pingback to an article that indicates that Canada is seeing issues with non-objective lab results similar to those once thought to have been seen in Washington State.

Thank-you for letting me know you had cited my work.

Much appreciated.

Thanks for the citation. I tend to agree with you.

At least in Washington — and, apparently, Nevada — the labels on the product are not a good predictor of either the potency or the safety/quality of what is actually in the package.

For consumers that care about what they are putting into their bodies, I’d be inclined to recommend that they get to know the growers in question and only go with the ones they trust to be doing the right thing. Either than or go with the grow situation in which you best know what is being done with the plants — e.g. “grow your own”.