Global climate models (GCM) are designed to simulate earth’s climate over the entire planet, but they have a limitation when it comes to describing local details due to heavy computational demands. There is a nice TED talk by Gavin that explains how climate models work.

We need to apply downscaling to compute the local details. Downscaling may be done through empirical-statistical downscaling (ESD) or regional climate models (RCMs) with a much finer grid. Both take the crude (low-resolution) solution provided by the GCMs and include finer topographical details (boundary conditions) to calculate more detailed information. However, does more details translate to a better representation of the world?

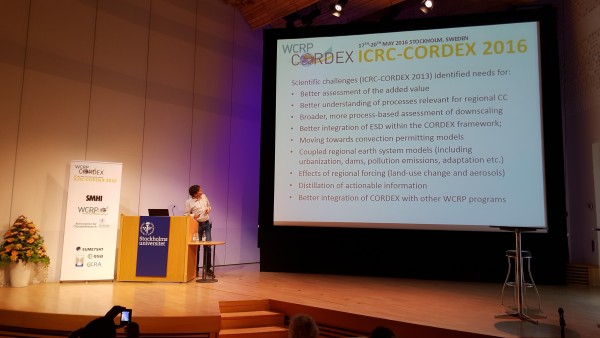

The question of “added value” was an important topic at the International Conference on Regional Climate conference hosted by CORDEX of the World Climate Research Programme (WCRP). The take-home message was mixed on whether RCMs provide a better description of local climatic conditions than the coarser GCMs.

RCMs can add details such as the influence of lakes, sea breeze, mountain ranges, and sharper weather fronts. Systematic differences between results from RCMs and observations may not necessarily be less than those for GCMs, however.

There is a distinction between an improved climatology (basically because of topographic details influencing rainfall) and higher skill in forecasting change, which is discussed in a previous post.

Global warming implies large-scale changes as well as local consequences. The local effects are moderated by fixed geographical conditions. It is through downscaling that this information is added to the equation. The added value of the extra efforts to downscale GCM results depends on how you want to make use of the results.

The discussion during the conference left me with a thought: Why do we not see more useful information coming out of our efforts? An absence of added-value is surprising if one considers downscaling as a matter of adding information to climate model results. Surprising results open up for new research and calls for explanations for what is going on. But added-value depends on the context and on which question is being asked. Often this question is not made explicitly.

There are also complicating matters such as the varying effects that arise when one combines different RCMs with different GCMs (known as the “GCM/RCM matrix”) or whether you use ESD rather than RCMs.

I think that we perhaps struggle with some misconceptions in our discourse on added-value. Even if RCMs cannot provide high-resolution climate information, it doesn't imply that downscaling is impossible or that it is futile to predict local climate conditions.

There are many strategies for deriving local (high-resolution/detailed) climate information in addition to RCM and ESD.

Statistics is often predictable and climate can be regarded as weather statistics. The combination of data with a number of statistical analyses is a good start, and historical trends provide some information. It is also useful to formulate good and clear research questions.

I don't think it's wrong to say that statistics is a core issue in climatology, but climate research still has some way to go in terms of applying state-of-the-art methods.

I have had very rewarding discussions with statisticians from NCAR, Exeter, UCL, and Computing Norway, and looking at a problem with a statistics viewpoint often gives a new angle. It may perhaps give a new direction when the progress goes in circles.

There are for instance still missing perspectives on extremes: present work includes a set of indices and return value analysis, but excludes record-breaking event statistics (Benestad, 2008) and event count statistics (Poisson processes).

Another important issue is to appreciate the profound meaning of random samples and sample size. This aspect also matters for extreme events which always involve small statistical samples (by definition – tails of the distribution) and therefore we should expect to see patchy and noisy maps due to random sampling fluctuations.

Patchy maps were (of course) presented for extreme values at the conference, but we can extract more information from such analyses than just thinking that the extremes are very geographically heterogeneous. Such maps reminded me of a classical mistake whereby different samples of different size are compared, such as zonal mean still found in recent IPCC assessment reports (Benestad, 2005).

There was a number of interesting questions raised, such as “What is information?” Information is not the same as data, and we know that observations and models often represent different things. Rain gauges sample an area less than 1 m2, phenomena producing precipitation often have scales of square kms, and most RCMs predict the area average for 100 km2. This has implications for model evaluation.

When it comes to model performance, there is a concept known as “bias correction” that was debated. It is still a controversial topic and has been described as a way to get the “right answer for the wrong reason”. It may increase the risk of mal-adaptation if it’s not well-understood (due to overconfidence).

Related issues included ethics as well as a term that seemed to invoke a range of different interpretations: ”distillation”. My understanding of this concept is the process of extracting the essential information needed about climate for a specific purpose, however, such terms are not optimal when they are non-descriptive.

Another such term is “climate services“, however, there has been some good efforts in explaining e.g. putting climate services in farmers' hands.

Much of the discussion during the conference was from the perspective of providing information to decision-makers, but it might be useful to ask “How do they make use of weather/climate information in decision-making? What information have they used before? What are the consequences of a given outcome?” In many cases, a useful framing may be in terms of risk management and co-production of knowledge.

The perspective of how the information is made use of cannot be ignored if we are going to answer the question of whether the RCMs bring added-value. However, it is not the task of CORDEX to act as a climate service or get too involved with the user community.

Added-value may be associated with both a science question or how the information is used to aid decisions, and the WCRP has formulated a number of “grand challenges”. These “grand challenges” are fairly general and we need “sharper” questions and hypotheses that can be subjected to scientific tests. There are some experiments that have been formulated within CORDEX, but at the moment these are the first step and do not really address the question of added-value.

On the other hand, added-value is not limited only to science questions and CORDEX is not just about specific science-questions, but should also be topic-driven (e.g. develop downscaling methodology) to support the evolution of the research community and its capacity.

Future activities under CORDEX may be organised in terms of “Flagship pilot studies” (FPS) for scientists who want an official “endorsement” and more coordination of their work. CORDEX may also potentially benefit with more involvement with hydrology and statistics.

Gavin, interesting topic. I can think of processes where storm types and tracks can have dramatic differences across short distance. For instance for a mountain range, the direction the winds are blowing during the event can have a huge influence on whether the east of west slopes get the most precipitation. And this can differ depending upon the type of storm; orographic, or driven by convective instability. For the former, usually the upslope side gets the lion’s share, but for convective precipitation (summer thunderstorms), usually the mountain serves as a seed for convection and the downwind side gets the rain after the storms mature downwind. For many water sheds, it would be valuable to be able to predict how the precipitation would change on each side of the range.

When the global models do not work, there’s little point even trying long term regional models.

However, when we get to the stage of focussing on what is important – then short range regional models will become important to short-range forecasts on the week – to month scale.

@ 2. Comment by Mike Haseler (Scottish Sceptic) — 22 May 2016 @ 11:58 AM

“When the global models do not work, there’s little point even trying long term regional models.”

Which logical fallacy is this?

MH@2

“Global models do not work” Really? I’ve seen plenty of graphs of what can be considered calibration runs of models that simulate past observed climate. The agreement is amazing. With such good agreement one should therefore be confident of projections into the future made by these same models.

Unfortunately the models cannot know the degree of stupidity that humans will display in coming decades. We (collectively) could be amazingly stupid and continue with business as usual. We could be very stupid and make only minor changes. Or we could be just ordinarily stupid and make more but still insufficient changes. How are the people running the models supposed to know? That’s why scenarios were invented.

The global models do work; it’s just that we don’t know how stupid humankind is going to be.

Rasmus, I don’t think I would be the only person who gets confused by the words used in climate science. I often see people being taken to task for saying GCMs failed to predict xyz, and are told the GCMs (especially in the IPCC) were not predictions in the first place, although the glossaries in the IPCC reports do define their word meanings… but these are still confusing for the lay person imo – because they chop and change words in their definitions and are not clear in how or when they apply.

For example: Climate forecast see Climate prediction. Climate projection, Climate scenario. Predictability, Prediction quality/skill, and A projection is a potential future evolution of a quantity or set of quantities … Unlike predictions, projections are conditional on assumptions ….

https://www.ipcc.ch/pdf/assessment-report/ar5/wg1/WG1AR5_AnnexIII_FINAL.pdf and

Climate models are applied as a research tool to study and simulate the climate, and for operational purposes, including monthly, seasonal, and interannual climate predictions.

https://www.ipcc.ch/pdf/assessment-report/ar5/wg2/WGIIAR5-AnnexII_FINAL.pdf

Rasmus in your article you say: “or that it is futile to predict local climate conditions.” and “Statistics is often predictable and climate can be regarded as weather statistics.” and “and “most RCMs predict the area average for 100 km2″ – You don’t use any of the other words listed.

Now this may seem pedantic or irrelevant to some, but I think it’s critical if climate scientists want lay people and politicians (and even deniers) to actually understand what you are trying to get across every time with clarity.

So Rasmus do or will RCMs provide climate predictions or not? and/or does it not really make a difference anymore – because in the past I thought these words really mattered. Thx

http://www.etymonline.com/index.php?term=prediction

http://www.etymonline.com/index.php?term=predict

http://www.oxforddictionaries.com/definition/english/prediction

Warning: http://www.cordex.org wants you to install a new version of flash player. Since flash player wants you to install a new version every time you encounter it, I do not trust flash player. When are they going to get a stable version? Or is it a virus installer?

4 Digby Scorgie is right. Local officials won’t consider the idea that their whole city should be moved 100 miles inland or abandoned. They want You to tell them how to fix the town where it is now as it is now and for no money. And they want definitive answers to political questions. They can’t handle the idea that probability is involved at all.

If you can’t deliver a perfectly accurate 100 year weather forecast, they think that you are no good.

Making models work on past observed climate is not hard. It is curve fitting. What is amazing about that?

@rasmus: ‘climate can be regarded as weather statistics’

Just a quibble, but: is there any other way to regard climate? Wikipedia at least seems unequivocal: ‘Climate is the statistics (usually, mean or variability) of weather'[1].

[1]: https://en.wikipedia.org/wiki/Climate

Another student question, regarding the following pair of statements:

@rasmus: ‘Downscaling may be done through empirical-statistical downscaling (ESD) or regional climate models (RCMs)’

but

@rasmus: ‘There are many strategies for deriving local (high-resolution/detailed) climate information in addition to RCM and ESD.’

What are the other strategies for *deriving* high-resolution climate information? One presumably only seeks to derive or model information when it does not already exist–in this case, when one does not have sufficiently-reliable observations of sufficiently-fine resolution over the desired spatiotemporal domain. Given that, IIUC, there are only 2 ways to downscale: either deterministically (i.e., RCM) or statistically (i.e., ESD) … or am I missing something? Pointers to doc esp appreciated.

Many are familiar with time-based spectral concepts like aliasing and band limiting. Yet these apply to spatial samples as well, with frequency represented as essentially wavenumbers. The same bandwidth product uncertainty relations apply there as well as in time.

The conversation about informing policy ought not be one way. Loss functions over space and time are very useful when constructing recommendations for leadership, not to mention inference.

DC@4

“Unfortunately the models cannot know the degree of stupidity that humans will display in coming decades.”

Can the models deal with infinity? :-)

As Einstein said: “There are two things that are infinite, the universe and human stupidity, and I’m not sure about the former.”

I am very skeptical that on a global population level, humans will bother to do anything other than lip service when it comes to addressing climate change and the changes to our lifestyle required to significantly reduce our emissions, until it is far too late. I would love to be proved wrong, but (my limited) observations so far suggest I’m not far out.

Mike Haseler (Scottish Sceptic),2: Read the next post, on the AMOC and the specified CM2.6 coupled climate model, please. It’s quite accessible. When you have finished, I’ll suggest a second step, and I’ll name your fallacy. Thanks for your attention.

Alf:

I’ll take Impossible Expectations for $6 trillion, Alf.

@3 Alf: “Which logical fallacy is this?”

I believe that would be the argument from false premises.

Denier Mike Haseler wrote: “the global models do not work”

Moderators, please Bore Hole this troll.

the three laws of climate models

1. All climate models are wrong.

2. Earth climate is chaotic and unpredictable.

3. Climate models can be useful.

that is my current position. I think this is more nuanced and accurate than “climate models are shite” or “climate models don’t work”.

Mike

I’m curious, what role do recent advances in deep learning have in helping infer local effects from larger-scale climate model results? While this probably should be considered a statistical approach, it does seem to be of a different kind than the common usage of that phrase. Some searching indicates this has at least been tried – is there any effort to make it more common or operational? Would a well-trained network be fast enough to generate useful sub-grid-scale info during a model run? The more common use seems to be pattern detection after the fact, which in itself could be quite beneficial.

On the reliability of climate models, the InsideClimateNews report on Exxon’s climate research efforts of the late 1970s and early 1980s makes for interesting reading:

http://insideclimatenews.org/news/15092015/Exxons-own-research-confirmed-fossil-fuels-role-in-global-warming

Those estimates have been refined since then, but the projections are within range of how things are turning out. Other successful projections include modeling the atmospheric response to the Pinatubo eruption. All in all, confidence is pretty good, by any rational measure.

On global vs. local, how about the global model prediction of a deepening and widening of the tropical atmospheric circulation, which leads to the Hadley cell expansion and the projection of the dry zones expanding polewards. This general prediction seems ominous, but what does it mean for California, India, Spain, etc.? Will southern Europe end up looking like North Africa? Central California like Baja California? Will El Nino years be the only years with anything like 20th century ‘normal’ rainfall levels across the southwestern United States, as we move into a permanent drought regime? Can these regional models answer these questions with much certainty, over the next 50 years, say?

If politicians and media and businesses can trust these projections, then it has implications for infrastructure planning (perhaps we can all live underground, like termites in the desert with those nifty air conditioning systems their tunnels provide?).

This is where bad policy choices and human fallibility come into play. As others have noted, Katrina the Hurricane didn’t have to give rise to Katrina the Human Disaster; scientists and engineers had given much advance warning about the need for new levees and better infrastructure. Given that warming over the next 50 years seems inevitable, some serious long-term planning is needed – but financial centers seem to have a hard time looking beyond next quarter’s results, and the politicians don’t seem to look much farther than the next election cycle. How do we move to more long-term thinking?

Similar issues apply to helping out farmers in the developing world – for example, while shiploads full of grain might seem like a good response to regional drought, in practice that may be the worst option as it destroys local markets for poor farmers who then can’t afford to buy seed and fertilizer for the next growing season. Solar- or wind-powered water pumps that allow such farmers to tap into aquifers in dry spells are a much better kind of aid.

At #8,Dan:

Making models work on past climate is not curve fitting–you should do more research on climate models.

In a hindcast GCM, the model is forced with the known changes in insolation, volcanism, human sulfate aerosols, and GHG emissions and see if the model follows the known trajectory in temperature or precipitation, etc. This has been done multiple times, since with a model it is possible to remove or add one of the influences and then see how important that influence has been to the total change. You should look at Chapter 9 from the latest IPCC report, downloadable from IPCC.ch

“Making models work on past observed climate is not hard. It is curve fitting.”

No, it isn’t. ‘Curve fitting’ is a statistical procedure; GCMs numerically simulate the actual physics. An entirely different thing.

For Dan Da Silva:

https://www.realclimate.org/index.php/archives/2011/12/curve-fitting-and-natural-cycles-the-best-part/

Shorter: yes, there are people who try to model climate by curve-fitting.

Humlum did. So did Spencer:

https://bbickmore.wordpress.com/2011/03/01/roy-spencers-great-blunder-part-3/

No, it doesn’t work out well.

If you think that’s how it’s done, you’re reading the wrong people.

Dan wouldn’t know a computer model if it smacked him in the face. Curve-fitting my arse.

Very good new book on climate models: “Demystifying Climate Models” by Andrew Gettelman and Richard B. Rood. It is open access and can be downloaded as an ebook or PDF for free. Print book is around $50. This is well written for the layman. I highly recommend it especially for those that believe “climate models don’t work.” No excuses for the denier ignorant since it can be obtained absolutely free.

#20, #21 OK, it is not curve fitting. If you adjust variables to fit history and the process helps future models then it is useful. Otherwise it is somewhat a tiny bit like curve fitting. My question is how do you know when you are making useful improvements.

techish-optimist-today asked “I’m curious, what role do recent advances in deep learning have in helping infer local effects from larger-scale climate model results? While this probably should be considered a statistical approach, it does seem to be of a different kind than the common usage of that phrase. Some searching indicates this has at least been tried – is there any effort to make it more common or operational? Would a well-trained network be fast enough to generate useful sub-grid-scale info during a model run? The more common use seems to be pattern detection after the fact, which in itself could be quite beneficial.”

I’ve experimented quite a bit with machine learning on climate data. For example, one series of experiments found that QBO is likely forced by seasonally-aliased monthly tidal cycles. This should be used as input to the larger models, as I don’t know if anyone has realized the tidal connection before. Lindzen hinted at it but he failed to find any connection (and is now retired, so that’s that).

Doing the same kind of machine learning with ENSO is a tougher nut to crack, but from what I have learned with QBO, one can also find related forcings with ENSO. For example,the biennial component in the ENSO forcing is very strong. Again, simpler models are needed to “prime the pump” for the larger GCM’s, as it seems almost impossible to generate the deterministic output necessary to simulate QBO and ENSO behavior. These climate behaviors are better described as non-autonomous systems and so require the correct forcing. From the looks of the way the GCMs are set up, they appear to be formulated as autonomous systems, expected to spontaneously oscillate — which I think is not physically correct. Think in terms of ocean tides; these are not spontaneous oscillations but are always forced by lunisolar cycles. QBO and ENSO are closer to that than I think anyone realizes, or is willing to admit (check out NASA JPL memos for some contrarian views).

And as far as “curve fitting” is concerned, name a physics model that does not involve a curve of some type! It could be a 2D curve or a 3D surface or some other manifold, but everything in physics is described by curves. The act of curve fitting can be used to extract parameters, and doesn’t have to be statistical. In fact, something like ENSO is not statistical at all — it is a single behavior described by a single standing-wave that covers a large expanse of the equatorial Pacific. There is not a set of ENSO behaviors to draw from, as if it was a statistical phenomenon. So the curve to be fit in the case of ENSO is a complicated standing-wave oscillation — likely more complex than a tidal gauge time series, but potentially doable. Why can’t GCMs model this behavior in terms of a curve fit for long stretches of time? I think climatologists punt on this task, believing it hopeless and following Tsonis’ suggestion that it is likely chaotic (Tsonis is the guy who just joined the GWPF as committee member alongside Lindzen this month, ugh).

This may all sound provocative, but you never know what you will find until you try it. To get back to the original question, machine learning, deep learning, and data mining are proper for these kinds of analyses because you can let a computer waste its time looking down dead-ends and you don’t have to do that yourself. Only a few climate science groups are looking at this approach.

My analysis is at ContextEarth.com, with more threaded discussions at AzimuthProject.org under the ENSO and QBO topic headings. Good place for an extended discussion on deep learning topics since comments are not moderated and equation markup, graphs, charts, and CURVES TOO! are easy to post.

We recently had a longish “pause” in global temperatures where it seems the excess heat sequestered itself in the oceans, only to emerge quite spectacularly in the last couple of years. I imagine this sort of thing would be even more of a problem with regional models.

For example, since the mid 1970’s winter rainfall in Perth, Western Australia has plummeted. Is this a genuine regional feature of climate change, or has this rain temporarily gone elsewhere, and will come back as quickly as it left?

I’m quite happy with efforts to predict the big scale climate – global warming, polar amplification, minimum temps rising faster than maximums, etc. But trying to figure out how some small particular part of a chaotic system responds to gradual heating seems a bit ambitious.

The answer from our research activities to this question can be found, for example, in these papers

Pielke Sr., R.A., and R.L. Wilby, 2012: Regional climate downscaling – what’s the point? Eos Forum, 93, No. 5, 52-53, doi:10.1029/2012EO050008. http://pielkeclimatesci.files.wordpress.com/2012/02/r-361.pdf

Rockel, B., C.L. Castro, R.A. Pielke Sr., H. von Storch, and G. Leoncini, 2008: Dynamical downscaling: Assessment of model system dependent retained and added variability for two different regional climate models. J. Geophys. Res., 113, D21107, doi:10.1029/2007JD009461. http://pielkeclimatesci.wordpress.com/files/2009/11/r-325.pdf

Lo, J.C.-F., Z.-L. Yang, and R.A. Pielke Sr., 2008: Assessment of three dynamical climate downscaling methods using the Weather Research and Forecasting (WRF) Model. J. Geophys. Res., 113, D09112, doi:10.1029/2007JD009216. http://pielkeclimatesci.wordpress.com/files/2009/10/r-332.pdf

Pielke Sr., R.A. 2013: Comments on “The North American Regional Climate Change Assessment Program: Overview of Phase I Results.” Bull. Amer. Meteor. Soc., 94, 1075-1077, doi: 10.1175/BAMS-D-12-00205.1. http://pielkeclimatesci.files.wordpress.com/2013/07/r-372.pdf

In the past, Gavin agreed with me on the very limited value of downscaling multidecadal climate predictions. I posted his comment in a reply to a tweet by Larry Kummer.