Abstract

The demand for automatic detection of Novel Coronavirus or COVID-19 is increasing across the globe. The exponential rise in cases burdens healthcare facilities, and a vast amount of multimedia healthcare data is being explored to find a solution. This study presents a practical solution to detect COVID-19 from chest X-rays while distinguishing those from normal and impacted by Viral Pneumonia via Deep Convolution Neural Networks (CNN). In this study, three pre-trained CNN models (EfficientNetB0, VGG16, and InceptionV3) are evaluated through transfer learning. The rationale for selecting these specific models is their balance of accuracy and efficiency with fewer parameters suitable for mobile applications. The dataset used for the study is publicly available and compiled from different sources. This study uses deep learning techniques and performance metrics (accuracy, recall, specificity, precision, and F1 scores). The results show that the proposed approach produced a high-quality model, with an overall accuracy of 92.93%, COVID-19, a sensitivity of 94.79%. The work indicates a definite possibility to implement computer vision design to enable effective detection and screening measures.

Similar content being viewed by others

1 Introduction

With the advent of the COVID-19 pandemic, a massive amount of multimedia healthcare data is generated. The analysis of this data is critical for a technology-driven solution. To process massive data for disease diagnosis, machine learning (ML) and deep learning (DL) techniques have exhibited noticeable performance. Many applications have been developed using ML and DL techniques over the conventional computer-aided systems in disease diagnosis. Deep learning models are mainly used when there is a huge medical dataset and automatically extracting features from the images for developing a prediction and detection model. DL methods greatly lessen the comprehensive data engineering and feature extraction process. Particularly, deep learning techniques have shown significant potential to detect Lung-based abnormalities by processing chest X-rays [1, 2].

Effective detection and screening measures, along with proper and speedy medical action, are the need of the hour. The Reverse Transcription Polymerase chain reaction (RT-PCR) test is a useful screening technique for the COVID-19. This method is complicated and time-consuming, with an accuracy of about 63% [3]. Thus, with complex manual testing procedures and a shortage of testing kits, the infected are interacting with the healthy world-over leading to an exponential rise in active cases [4]. The medical symptoms of severe COVID-19 infection are bronchopneumonia, causing fever, cough, dyspnoea, and pneumonia [4,5,6,7].

The similarity in visual aesthetics of chest X-rays of COVID-19 patients with Viral Pneumonia [8,9,10,11] can sometimes lead to misdiagnosis of the disease. There have also been instances of misdiagnosis of chest X-rays by radiologists. There is a similarity in visual aesthetics of chest X-rays of COVID-19 patients [12] with those of Viral Pneumonia.

This study is of significance as the transfer learning models, namely EfficientNetB0, InceptionV3. and VGG16, have been proven suitable for practical implementation due to their balance of accuracy and efficiency with fewer parameters suitable for mobile networked applications as a means to detect COVID-19 [56]. This study provides substantial evidence that computer vision technology can be a path to achieve better accuracy with lower human intervention to screen COVID-19 disease.

The rest of the article is structured as follows. Section 2 presents the literature review; Sect. 3 describes the methodology, including data sets, databases, model selection and pre-processing, etc. Section 4 provides a performance evaluation and discussion. Section 5 concludes the paper with findings and further research.

2 Literature of review

Recent developments in deep learning have been seen over the years in many fields such as big multimedia data, business analytics for medical multimedia research, and finally, managing media-related healthcare data analytics [13,14,15,16,17,18, 54]. Computer-aided diagnosis (CAD) for lung diseases has been a part of medical research for nearly half a century. It was based on simple rule-based algorithms for prediction but has now developed into ML via deep neural networks [10, 19,20,21, 53]. Recent times have made CAD in lung disorder analysis imperative due to the extreme workload on radiologists [22]. Convolution networks can now extract features from images hidden from the naked eye [23,24,25,26]. This technique of Deep learning is widely acknowledged and utilized for research [14, 27,28,29]. In medical image analysis, the application of CNN was established by [30] to enhance low light images. They used it to identify the nature of the disease through CT and chest X-ray images. CNN has also proven to be reliable in feature extraction and learning by image recognition from endoscopic videos. For Chest X-ray analysis, CNN has gathered interest as it is low in cost with an abundance of training data for computer vision models. For classification, Rajkomar et al. applied GoogleLeNet with data augmentation and pre-training on ImageNet to classify chest X-rays with 100 percent accuracy [31]. This is essential evidence of deep learning applications in clinical image classification.

Transfer learning through pre-trained models was implemented by Vikash et al. [32] in a study for Pneumonia detection. Classification models for lung mapping and abnormality detection were built through a customized VGG16 transfer learning model [33]. Studies by training CNN models on a large training set were performed by Wang et al. [34] and with data augmentation by Ronneburger et al. [35]. Accurate detection of 14 different diseases by feature extraction techniques through Deep Learning CNN models was reported [36]. Sundaram et al. [37] achieved an AUC of 0.9 by transfer learning techniques through AleXNet and GoogleLeNet for Lung disease detection. A ResNet50 model [38] delivered an outcome with 96.2% accuracy. The inception V3 model has been successfully used to achieve the classification of Bacterial and Viral Pneumonia impacted chest X-rays (CXRs) from those which are normal with an AUC of 0.940 [39]. In a different. An attempt was made to screen and identify the disorder in chest X-rays with an area under a curve of 0.633 [40]. A gradient visualization technique was used to localize ROI with heatmaps for lung disease detection. A 121-layer deep neural network achieved an area under a curve of 0.768 for pneumonia identification [41]. Philipsen et al. [42] experimented on the performance of T.B. detection based on computerized chest and reported an AUC value of 0.93. Bharathi et al.[43] proposed a successful hybrid deep learning framework called “VDSNet” for time-efficient lung disease diagnosis through machine learning. Yoo et al. [44] proposed a prediction of COVID-19 based on a deep learning-based decision tree for fast decision making. The study reported an accuracy of 98%, 80%, and 95% for three decision trees.

A comparative analysis of the study is tabulated in Table 1.

3 Methodology

The process of medical image-based COVID-19 detection CNN-based classification model is shown in Fig. 1. A deep convolution neural network model’s classification capability is based on the amount and quality of data available for training. The amount of data, when sufficiently large, is observed to outperform the models trained on a smaller set. Utilizing pre-trained weights by transfer learning is a method wherein a model previously trained on a more extensive training set is used on a relatively small one with modifications as required. This benefits in reducing the time of training the model as it would not be done from scratch. This also reduces the load on the system’s hardware being used and can be done on general-purpose computers like the one used in this work. Transfer learning was achieved by using the Tensor flow library. Post loading the respective model, learning weights were modified to the suitability of the present dataset.

The details of the dataset used, details of model selection and process of model architecture are mentioned in the following sections.

3.1 Description of dataset

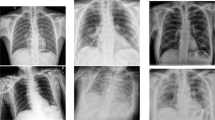

This study has used a posterior-to-anterior view of chest X-ray images. Figure 2 demonstrates some sample X-ray images of different classes. This view is most commonly referred to by radiologists in the detection of pneumonia.

There are two broad subsections from where images have been sourced, details of which are as follows.

3.1.1 COVID-19 radiography database

M.E.H. Chowdhury, et al. [45], in their research “Can AI help in screening Viral and COVID-19 pneumonia?” collected chest X-ray images of positive COVID-19 patients along with normal and those suffering from Viral Pneumonia which is available for public use in Kaggle.com.

3.1.2 Actualmed-COVID-chest X ray-dataset

Medical data compiled by Actualmed, and José Antonio Heredia Álvaro and Pau Agustí Ballester of Universitat Jaume I (UJI) for research [46].

The training of models is done on 3106 images, 0.16 of which used for validation. Testing of three different algorithms was done on 806 non-augmented images of different categories to evaluate each algorithm’s performance. The details of the splitting of the dataset are illustrated in the Table 2.

3.2 Model selection and pre-processing

The models selected for the research were due to their significance. Based on the assumption that better accuracy and efficiency can be achieved by setting the balance between all networks, EfficientNetB0 has been suggested. EfficientNetB0 surpasses CNN in gaining better accuracy while significantly reducing the number of parameters, as shown in Fig. 3 [47].

VGG-16 [48], shown in Fig. 4, developed in 2014, is a popular model already trained in image classification.

VGG16 model [48]

The InceptionV3 [49] network, developed in 2015, as shown in Fig. 5 [49]. The main idea is to install modules using a few weights. InceptionV3 costs are suitable for mobile applications and big data.

Image pre-processing is done to resize the X-ray images to have standard input. As per the model requirement, the images are resized to and 224 × 224 pixels and were normalized according to the pre-trained model standards.

The chest X-rays were subjected to augmentation before training by rotation, scaling, and translation, including nearest neighbor fill techniques, as shown in Fig. 6.

3.3 Process of model architecture

The following steps were incorporated to implement the classification model.

The architecture depicted in Fig. 7 was incorporated by training some layers and keeping others frozen to finetune the model. In the CNN model, the layers at the bottom refer to features that do not depend upon the classification problem, whereas layers at the top refer to the problem-dependent features. Steps 3, 4, and 5 are frozen, and the final layers are unfrozen post feature transfer. This unfrozen, fully connected layer is the network head and responsible for classification. Backpropagation and weight decay were used to reduce the over-fitting in the models. The total no. of epochs for training is 25 with a batch size of 18. The base learning rate is chosen to be 0.00001.

4 Results and discussion

In this section, we present the multi-classification results followed by a brief discussion of the results given by each model.

A confusion matrix is used to check how well a model can perform for new data. Following Eqs. (1) to (5) shows the formulae for different performance metric to measure the performance of binary classification models.

The results by VGG16 (Table 3) indicate that Normal CXR’s were detected with reasonable sensitivity (89%) due to low false negatives. The precision and specificity (91.01% and 93%) with an accuracy of is 91.8% is reported. Viral Pneumonia (Table 3) is reported within acceptable values. COVID-19 class (Table 3) is reported with good specificity (90%) but low precision (68%). It is observed, the accuracy is 82.34% (Fig. 8a).

The results by InceptionV3 (Table 4) indicate that Normal CXR’s were detected with good sensitivity (93%). Better precision and specificity (95% and 94%) with accuracy is 94.42%. Viral Pneumonia (Table 4) is reported with an accuracy of 94%. COVID-19 class (Table 4) is reported with better specificity (95%) and acceptable precision (77%). It is observed, the accuracy is 93.38% (Fig. 8b).

The results by EfficientNetB0 (Table 5) indicate that Normal CXR’s were detected with very good sensitivity (94%). The highest precision and specificity (95% and 96.53%) with an accuracy of is 95.53% is reported. Viral Pneumonia (Table 5) is reported with an accuracy of 95%. COVID-19 class (Table 5) is reported with high specificity (96%) and reasonable precision (79%). It is observed, the accuracy is 94.79% (Fig. 8c).

Table 6 shows the description of the overall performance parameters of the three classification models. The results are observed to be the best for EficientNetB0.

It is observed that the main cause of misclassification of COVID-19 as normal was due to less opacity in the left and right upper lobe and suprahilar on posterior-to-anterior x-ray images, which is very similar to normal x-ray images.

5 Conclusion and future scope

The COVID-19 pandemic has clearly put a threat to human existence. Efforts leading to curb the spread of the disease are observed to burden the healthcare sector. Testing measures to detect the presence are costly and may be insufficient to reach a wider population. Deep learning methods have proven to be an essential aid to screen big data with greater accuracy. This study aimed to provide evidence on the successful application of deep learning techniques to help detect the presence of COVID-19 infection. The results of this study confirm that deep CNN computer vision models are capable of practical implementation in the healthcare sector to screen and detect the presence of COVID-19 from chest X-rays. Transfer learning techniques have proven beneficial in enhancing the learning capabilities of the model. The EfficientNetB0 model reported the highest accuracy of 94.79% in detecting and classifying COVID-19 chest X-rays from other categories of chest abnormalities and an overall accuracy 0f 92.93%. This paper provides evidence that medical facilities’ burden can be lowered through AI technology’s effective use. Implementation of this technique also reduces the risk of spreading the disease and rises in cases as the doctors and patients will not require any physical at the screening level. The images that were misclassified were due to less opacity in the left and right upper lobe and suprahilar on posterior-to-anterior x-ray images, which is very similar to normal x-ray images.

The observations are from a limited amount of data set, which can be enhanced as more data becomes available for future research. The models then can be made country-specific to provide more detailed insights. The models have been trained on 20 epochs which can be increased on computer systems with enhanced processing capabilities. Further different deep learning techniques and models may be implemented for comparison of results with respect to multimedia medical image screening. The models selected and implemented in this study can be a base for further research in this domain.

Data set link

Code availability

The experimental code is available on request.

References

Cengil, E., Çinar, A.: A deep learning based approach to lung cancer identification. International conference on artificial intelligence and data processing (IDAP), Malatya, Turkey, pp. 1–5. (2018). doi: https://doi.org/10.1109/IDAP.2018.8620723

Shorfuzzaman, M., Hossain, M.S.: MetaCOVID: a siamese neural network framework with contrastive loss for n-shot diagnosis of COVID-19 patients. Pattern Recogn. 113, 107700 (2021)

Wang, D., Hu, B., Hu, C., Zhu, F., Liu, X., Zhang, J., et al.: Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in Wuhan, China. JAMA 323(11), 1061–1069 (2020)

Shorfuzzaman, M., Hossain, M.S., Alhamid, M.F.: Towards the sustainable development of smart cities through mass video surveillance: a response to the COVID-19 pandemic. Sustain. Cities Soc. 64(2021), 102582 (2021)

Li, Q., Guan, X., Wu, P., Wang, X., Zhou, L., Tong, Y., et al.: Early transmission dynamics in Wuhan, China, of novel coronavirus–infected pneumonia. N. Engl. J. Med. (2020). https://doi.org/10.1056/NEJMOa2001316

Huang, C., Wang, Y., Li, X., Ren, L., Zhao, J., Hu, Y., et al.: Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 395, 497–506 (2020)

Corman, V.M., Landt, O., Kaiser, M., Molenkamp, R., Meijer, A., Chu, D.K., et al.: Detection of 2019 novel coronavirus (2019-nCoV) by real-time RT-PCR. Eurosurveillance 25(3), 2000045 (2020)

Chung, M., Bernheim, A., Mei, X., Zhang, N., Huang, M., Zeng, X., et al.: CT imaging features of 2019 novel coronavirus (2019-nCoV). Radiology 25, 200230 (2020)

Muhammad, G., Hossain, M.S.: COVID-19 and non-COVID-19 classification using multi-layers fusion from lung ultrasound images. Inf. Fusion 72, 80–88 (2021)

Salehi, S., Abedi, A., Balakrishnan, S., Gholamrezanezhad, A.: Coronavirus disease 2019 (COVID-19): a systematic review of imaging findings in 919 patients. Am. J. Roentgenol. 215, 1–7 (2020)

Rahman, A., et al.: Adversarial examples—security threats to COVID-19 deep learning systems in medical IoT devices. IEEE Internet Things J. (2021). https://doi.org/10.1109/JIOT.2020.3013710

Rahman, M.A., Hossain, M.S.: An internet of medical things-enabled edge computing framework for tackling COVID-19. IEEE Internet Things J. (2021). https://doi.org/10.1109/JIOT.2021.3051080

Muhammad, G., Hossain, M.S., Kumar, N.: EEG-based pathology detection for home health monitoring. IEEE J. Sel. Areas Commun. 39(2), 603–610 (2021)

Verma, P., Sah, A., Srivastava, R.: Deep learning-based multi-modal approach using RGB and skeleton sequences for human activity recognition. Multimed. Syst. 26, 671–685 (2020). https://doi.org/10.1007/s00530-020-00677-2

Tan, M., Le, Q.: EfficientNet: rethinking model scaling for convolutional 545 neural networks, In: Chaudhuri, K., Salakhutdinov, R. (Eds.) Proceedings of the 36th international conference on machine learning, Vol. 97 of proceedings of machine learning research, PMLR, Long Beach, California, USA, pp. 6105–6114. (2019). URL http://proceedings.mlr.press/v97/tan19a.html

Alhussein, M., et al.: Cognitive IoT-cloud integration for smart healthcare: case study for epileptic seizure detection and monitoring. Mob. Netw. Appl. 23, 1624–1635 (2018)

Amin, S.U., Alsulaiman, M., Muhammad, G., Bencherif, M.A., Hossain, M.S.: Multilevel weighted feature fusion using convolutional neural networks for EEG motor imagery classification. IEEE Access 7, 18940–18950 (2019)

Li, W., Chai, Y., Khan, F., et al.: A comprehensive survey on machine learning-based big data analytics for IoT-enabled smart healthcare system. Mob. Netw. Appl. 1, 1–19 (2021)

Min, W., Bao, B., Xu, C., Hossain, M.S.: Cross-platform multi-modal topic modeling for personalized inter-platform recommendation. IEEE Trans. Multimed. 17(10), 1787–1801 (2015)

Hossain, M.S., Muhammad, G., Guizani, N.: Explainable AI and mass surveillance system-based healthcare framework to combat COVID-I9 like pandemics. IEEE Netw. 34(4), 126–132 (2020)

Doi, K.: Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput. Med. Imaging Graph. 31, 198–211 (2007)

van Ginneken, B., Schaefer-Prokop, C.M., Prokop, M.: Computer-aided diagnosis: how to move from the laboratory to the clinic. Radiology 261(3), 719–732 (2011)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012)

Hossain, M.S., Amin, S.U., Muhammad, G., Al Sulaiman, M.: Applying deep learning for epilepsy seizure detection and brain mapping visualization. ACM Trans. Multimed. Comput. Commun. Appl. (ACM TOMM) 15, 17 (2019)

Sharma, D., Gaur, L., Okunbor, D.: Image compression and feature extraction using neural network. Allied academies international conference. academy of management information and decision sciences. Proceedings, 11:1:33 Jordan Whitney Enterprises, Inc. (2007)

Lim, M., Abdullah, A., Jhanjhi, N.Z.: Data fusion-link prediction for evolutionary network with deep reinforcement learning. Int. J. Adv. Comput. Sci. Appl. (IJACSA) (2020). https://doi.org/10.14569/IJACSA.2020.0110644

Lim, M., Abdullah, A., Jhanjhi, N., Hidden, S.M.: Link prediction in criminal networks using the deep reinforcement learning technique. Computers 8, 8 (2019). https://doi.org/10.3390/computers8010008

Hossain, M.S., Muhammad, G., Alamri, A.: Smart healthcare monitoring: a voice pathology detection paradigm for smart cities. Multimed. Syst. 25(5), 565–575 (2019)

Hossain, M.S.: Cloud-supported cyber-physical localization framework for patients monitoring. IEEE Syst. J. 11(1), 118–127 (2017)

Gómez, P., Semmler, M., Schützenberger, A., Bohr, C., Döllinger, M.: Low-light image enhancement of high-speed endoscopic videos using a convolutional neural network. Med. Biol. Eng. Comput. 57, 1451–1463 (2019)

Rajkomar, A., Lingam, S., Taylor, A.G., Blum, M., Mongan, J.: High-throughput classification of radiographs using deep convolutional neural networks. J. Digit. Imaging 30, 95–101 (2017)

Chouhan, V., Singh, S.K., Khamparia, A., Gupta, D., Tiwari, P., Moreira, C., et al.: A novel transfer learning based approach for pneumonia detection in chest X-ray images. Appl. Sci. 10, 559 (2020)

Gershgorn, D.: The data that transformed AI research—and possibly the world. (2017). https://qz.com/1034972/the-data-that-changed-thedirection-of-ai-research-and-possibly-the-world/

Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., Summers, R.: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In: IEEE CVPR. (2017)

Ronneberger, O., Fischer, P., Brox, T-N.: Convolutional networks for biomedical image segmentation, in paper presented at: International conference on medical image computing and computer-assisted intervention. (2015)

Ho, T.K.K., Gwak, J.: Multiple feature integration for classification of thoracic disease in chest radiography. Appl. Sci. 9, 4130 (2019)

Lakhani, P., Sundaram, B.: Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 284, 574–582 (2017)

Joaquin, A. S.: Using deep learning to detect pneumonia caused by NCOV-19 from X-Ray images. (2020). https://towardsdatascience.com/using-deep-learning-to-detect-ncov-19-from-x-ray-images-1a89701d1acd

Kermany, D.S., Goldbaum, M., Cai, W., Valentim, C.C.S., Liang, H., Baxter, S.L., McKeown, A., Yang, G., Wu, X., Yan, F., et al.: Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172, 1122–1131 (2018)

Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., Summers, R. M.: ChestX-ray8: hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Honolulu, HI, USA, 21–26; pp. 3462–3471. (2017)

Rajpurkar, P., Irvin, J., Zhu, K., Yang, B., Mehta, H., Duan, T., Ding, D., Bagul, A., Langlotz, C., Shpanskaya, K. et al.: CheXNet: radiologist-level pneumonia detection on chest X-rays with deep learning. (2018)

Philipsen, R.H.H.M., Sánchez, C.I., Melendez, J., Lew, W.J., van Ginneken, B.: Automated chest X-ray reading for tuberculosis in the Philippines to improve case detection: a cohort study. Int. J. Tuberc. Lung Dis. 23(7), 805–810 (2019). https://doi.org/10.5588/ijtld.18.0004

Bharati, S., Podder, P., Mondal, M.R.H.: Hybrid deep learning for detecting lung diseases from X-ray images. Inf. Med. Unlocked (2020). https://doi.org/10.1016/j.imu.2020.100391

Yoo, S.H., Geng, H., Chiu, T.L., Yu, S.K., Cho, D.C., Heo, J., Lee, H.: Deep learning-based decision-tree classifier for COVID-19 diagnosis from chest X-ray imaging. Front. Med. (2020). https://doi.org/10.3389/fmed.2020.00427

Chowdhury, M. E. H. et al.: (2020) Can AI help in screening Viral and COVID-19 pneumonia?. https://arxiv.org/abs/2003.13145. Accessed date 23 May 2020

Data Set Link https://github.com/agchung/Figure1-COVID-chestxray-dataset/tree/master/images. Accessed date 1 June 2020

Ul Hassan, M.: Vgg16–convolutional network for classification and detection. Neurohive. (2018) Dostopno na: https://neurohive.io/en/popular-networks/vgg16/. Accessed date 10 Apr 2019

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2818–2826 (2020)

Das, D., Santosh, K.C., Pal, U.: Truncated inception net: COVID-19 outbreak screening using chest X-rays. Phys. Eng. Sci. Med. 43(3), 915–925 (2020). https://doi.org/10.1007/s13246-020-00888-x

Cohen, J. P. et al.: Predicting COVID-19 pneumonia severity on chest X-ray with deep learning. arXiv:.11856v1 [eess.IV]. (2005)

Yujin, O. et al.: Deep learning COVID-19 features on CXR using limited training data sets arXiv:.05758v2 [eess.IV]. (2004)

Rajaraman, S., et al.: Deep learning for abnormality detection in chest X-Ray images. Appl. Sci. 8, 1715 (2018). https://doi.org/10.3390/app8101715

Shorfuzzaman, M., Masud, M.: On the detection of covid-19 from chest x-ray images using cnn-based transfer learning. Computers, Materials & Continua. 64(3), 1359–1381 (2020)

Hossain, M.S., Muhammad, G.: Emotion-Aware Connected Healthcare Big Data Towards 5G. IEEE Internet Things J. 5(4), 2399-2406 (2018)

Wang, L., Lin, Z.Q., Wong, A.: COVID-net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images. Sci. Rep. 10(1), 1–12 (2020)

Abdulsalam, Y., Hossain, M.S.: COVID-19 networking demand: an auction-based mechanism for automated selection of edge computing services. IEEE Trans. Netw. Sci. Eng. (2020). https://doi.org/10.1109/TNSE.2020.3026637

Acknowledgements

No Applicable.

Funding

This work was supported by the Deanship of Scientific Research at King Saud University, Riyadh, Saudi Arabia, through the Vice Deanship of Scientific Research Chairs: Research Chair of Pervasive and Mobile Computing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gaur, L., Bhatia, U., Jhanjhi, N.Z. et al. Medical image-based detection of COVID-19 using Deep Convolution Neural Networks. Multimedia Systems 29, 1729–1738 (2023). https://doi.org/10.1007/s00530-021-00794-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-021-00794-6