Are you the sort of person who annoys, frustrates, and offends lots of people on Twitter—but manages to avoid technically violating any of its policies on abuse or hate speech? Then Twitter’s newest feature is for you. Or, rather, it’s for everyone else but you.

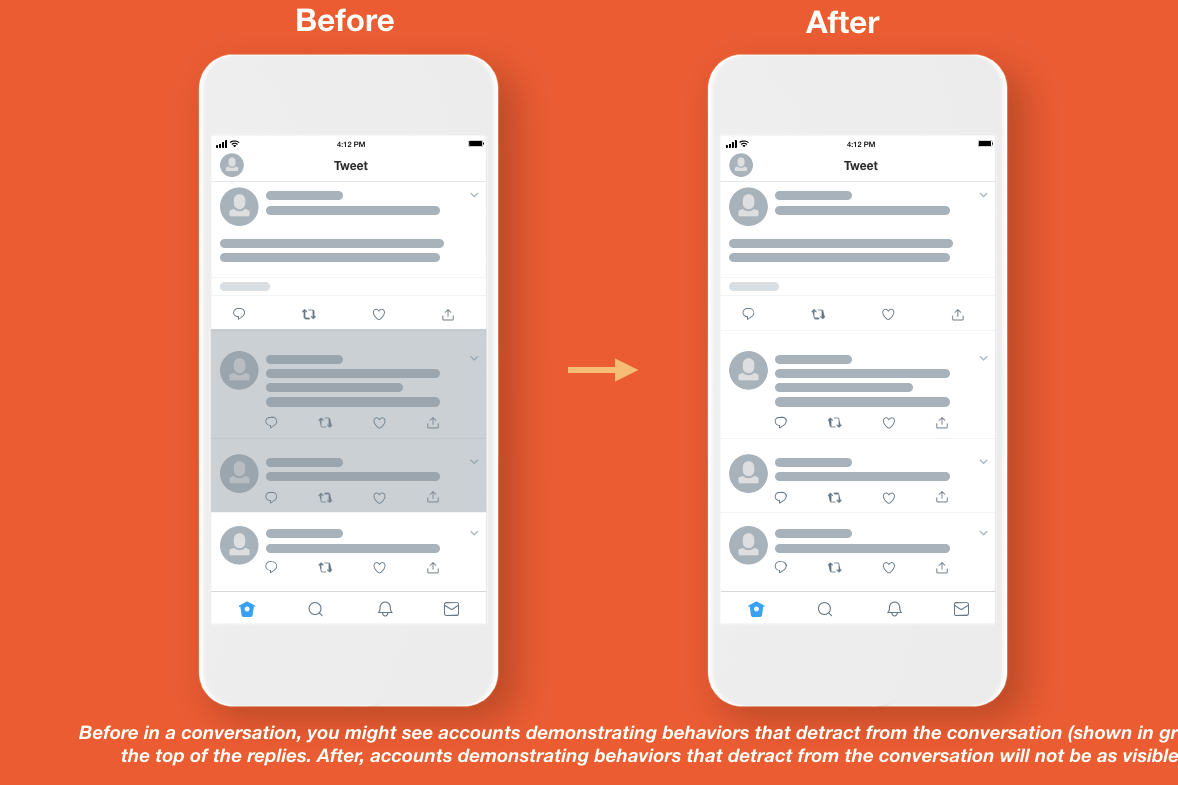

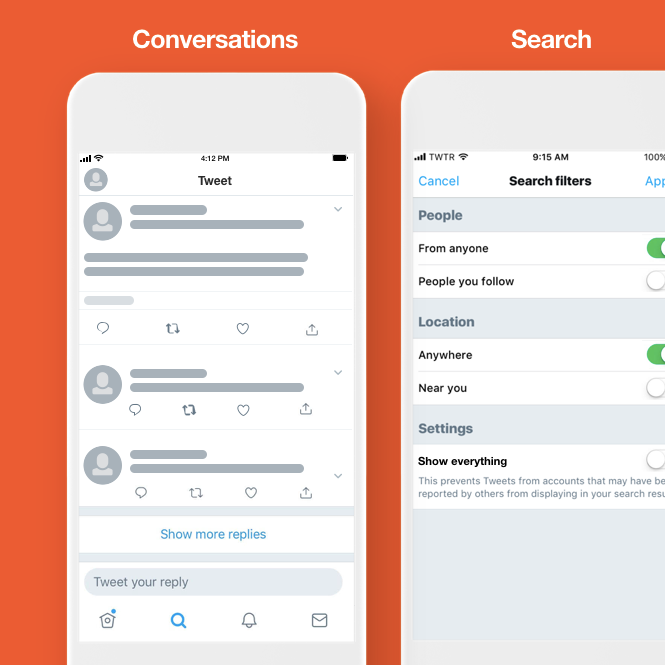

Twitter is announcing on Tuesday that it will begin hiding tweets from certain accounts in conversations and search results. To see them, you’ll have to scroll to the bottom of the conversation and click “Show more replies,” or go into your search settings and choose “See everything.” Think of them as Twitter’s equivalent of the Yelp reviews that are “not currently recommended” or the Reddit comments that have a “comment score below threshold.”

But there’s one difference: When Twitter’s software decides that a certain user is “detract[ing] from the conversation,” all of that user’s tweets will be hidden from search results and public conversations until their reputation improves. And they won’t know that they’re being muted in this way; Twitter says it’s still working on ways to notify people and help them get back into its good graces. In the meantime, their tweets will still be visible to their followers as usual and will still be able to be retweeted by others. They just won’t show up in conversational threads or search results by default.

You’ve heard of Twitter jail? Let’s call this Twitter purgatory. (Note: This is not Twitter’s preferred nomenclature, as the company’s representatives made clear to me when I suggested the term in a phone call Monday. “That kind of makes me cringe,” a spokesperson said.)

The change will affect a very small fraction of users, explained Twitter’s vice president of trust and safety, Del Harvey—much less than 1 percent. Still, the company believes it could make a significant difference in the average user’s experience. From the company’s blog post announcing the change:

[L]ess than 1% of accounts make up the majority of accounts reported for abuse, but a lot of what’s reported does not violate our rules. While still a small overall number, these accounts have a disproportionately large—and negative—impact on people’s experience on Twitter. The challenge for us has been: how can we proactively address these disruptive behaviors that do not violate our policies but negatively impact the health of the conversation?

In early testing of the new feature, Twitter said it has seen a 4 percent drop in abuse reports in its search tool and an 8 percent drop in abuse reports in conversation threads.

It’s the first major update that Twitter has announced since CEO Jack Dorsey unleashed an epic tweetstorm on March 1 acknowledging that the service had become divisive and prone to abuse and harassment and pledging to fix it. Specifically, Dorsey said the company would work on ways to measure and improve “the health of conversation” on Twitter, and he solicited proposals from outside experts to help with that.

The problem, Dorsey suggested, was that Twitter had focused simply on enforcing its terms of service, rather than on building a framework that would promote productive conversations and dialogue. In other words, the company had been fighting its war on trolls with blunt instruments: removing tweets, suspending accounts, and banning the worst repeat offenders altogether. Now it’s looking for some subtler levers.

Twitter has received more than 230 proposals for its “conversational health” improvements, Harvey told me in a phone call Monday. It’s still in the process of reviewing those.

Meanwhile, it’s moving forward with changes like this one, which allow it to automatically deprecate some users’ tweets in certain contexts without the hard, manual work of suspending or banning them.

How will Twitter determine that a user is “detracting from the conversation”? Its software will look at a large number of signals, Harvey said, such as how often an account is the subject of user complaints and how often it’s blocked and muted versus receiving more positive interactions such as favorites and retweets. The company will not be looking at the actual content of tweets for this feature—just the types of interactions that a given account tends to generate. For instance, Harvey said, “If you send the same message to four people, and two of them blocked you, and one reported you, we could assume, without ever seeing what the content of the message was, that was generally a negative interaction.”

Asked whether the change will mostly affect fake accounts and bots or real people who are behaving in aggressive and divisive ways, Harvey said it could be either. The software’s goal is to hide tweets from “accounts that are having the maximum negative impact on the conversation,” she said.

The company will work continually to improve the software, Harvey added, and to help users affected understand why and how to change. Twitter has not yet perfected it, she acknowledged: “This is a new step for us and a new direction in terms of expanding behavioral signals to do a better job of how we organize and present content. We want to be as transparent as possible about the fact we’re working on this and continue collecting feedback from people around what their experience is.”

The system certainly sounds imperfect. The fact that Twitter won’t notify accounts affected by this seems particularly problematic. Harvey said it’s because the company is still in the early phases of improving the software and doesn’t want to needlessly alarm people who might be affected only for a brief time. Still, disclosure seems like it would be a better way to go.

It’s also dicey any time you get into the business of suppressing people on a social network based on the output of an algorithm. That said, you could argue that this is a relatively minor form of suppression compared to the way an algorithm like Facebook’s ranks users’ entire feeds. At least on Twitter, your tweets will still be visible to all of your followers in their main feeds.

More broadly, it does make sense for Twitter to think about how it can use software to address its abuse and harassment and trolling issues. Manually reviewing tweets for clear-cut policy violations simply isn’t sufficient given the scale of the problem. If Twitter can get this newest initiative right, we’ll all be better off. And if not, well, at least it probably won’t make things much worse than they already are.