Bigger, Better Google Ngrams: Brace Yourself for the Power of Grammar

Back in December 2010, Google unveiled an online tool for analyzing the history of language and culture as reflected in the gargantuan corpus of historical texts that have been scanned and digitized as part of the Google Books project. They called the interface the Ngram Viewer, and it was launched in conjunction with a blockbuster paper in the journal Science that baptized this Big Data approach to historical analysis with the label "culturomics."

The appeal of the Ngram Viewer was immediately obvious to scholars in the digital humanities, linguistics, and lexicography, but it wasn't just specialists who got pleasure out of generating graphs showing how key words and phrases have waxed and waned over the past few centuries. Here at The Atlantic, Alexis Madrigal collected a raft of great examples submitted by readers, some of whom pitted "vampire" against "zombie," "liberty" against "freedom," and "apocalypse" against "utopia." A Tumblr feed brought together dozens more telling graphs. If nothing else, playing with Ngrams became a time suck of epic proportions.

As of today, the Ngram Viewer just got a whole lot better. For starters, the text corpus, already mind-bogglingly big, has become much bigger: The new edition extracts data from more than eight million out of the 20 million books that Google has scanned. That represents about six percent of all books ever published, according to Google's estimate. The English portion alone contains about half a trillion words, and seven other languages are represented: Spanish, French, German, Russian, Italian, Chinese, and Hebrew.

The Google team, led by engineering manager Jon Orwant, has also fixed a great deal of the faulty metadata that marred the original release. For instance, searching for modern-day brand names -- like Microsoft or, well, Google -- previously revealed weird, spurious bumps of usage around the turn of the 20th century, but those bumps have now been smoothed over thanks to more reliable dating of books.

While these improvements in quanitity and quality are welcome, the most exciting change for the linguistically inclined is that all the words in the Ngram Corpus have now been tagged according to their parts of speech, and these tags can also be searched for in the interface. This kind of grammatical annotation greatly enhances the utility of the corpus for language researchers. Doing part-of-speech tagging on hundreds of billions of words in eight different languages is an impressive achievement in the field of natural language processing, and it's hard to imagine such a Herculean task being undertaken anywhere other than Google. Slav Petrov and Yuri Lin of Google's NLP group worked with a universal tagset of twelve parts of speech that could work across different languages, and then applied those tags to parse the entire corpus. (The nitty-gritty of the annotation project is described in this paper.)

A final enhancement of the Ngram Viewer is a set of mathematical operators allowing you to add, subtract, multiply, and divide the counts of Ngrams. (An "Ngram," by the way, typically hyphenated as n-gram, is a sequence of n consecutive words appearing in a text. For Google's Ngram Corpus, n can range from 1 to 5, so the maximum string that can be analyzed is five words long. The "5-grams" in A Tale of Two Cities would include "It was the best of," "was the best of times," and so forth. That keeps the datasets from spinning out of control, and it's also handy for guaranteeing that the data extracted from the scanned books doesn't run afoul of copyright considerations, a continuing legal headache for Google.)

Orwant, in introducing the new version on the Google blog, reckoned that these new advanced features will be of primary interest to lexicographers. "But then again," Orwant writes, "that's what we thought about Ngram Viewer 1.0," which he says has been used more than 45 million times since it was launched nearly two years ago. I was given early access to the new version, and after playing with it for a few days I can see how the part-of-speech tags and mathematical operators could appeal to dabblers as well as hard-core researchers (who can download the raw data to pursue even more sophisticated analyses beyond the pretty graphs).

Let's look at some examples. With the earlier version, you could track the rise of a word like "telephone" and its clipped form "phone." But what if you're only interested in how "telephone" and "phone" developed as verbs? The graph indicates that "telephone" held strong as a verb for much of the 20th century but is now on its way out.

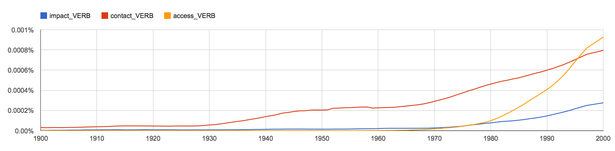

Other nouns-turned-verbs have faced resistance from traditionalists. "Contact" was long disfavored as a verb, much as some people dislike the verbing of "access" and "impact" today. The graph shows that all three verbs were nonexistent in the early decades of the 20th century (despite the anachronistic use of "contact" on Downton Abbey). After the mid-century rise of "contact," the verbs "access" and "impact" have followed suit.

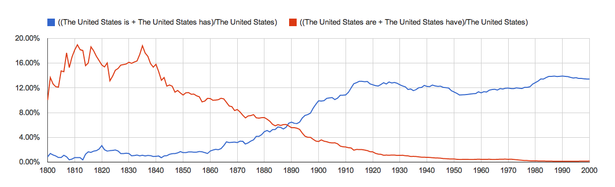

The mathematical operators are useful for aggregating different kinds of expressions and determining ratios of usage. One oft-posed question is this: When did "the United States" begin to be treated as a singular entity, agreeing with verbs like "is" and "has"? Using Google's operators, we can combine "is"/"has" usage and contrast it to "are"/"have" usage. And in both cases we can calculate the proportions of these sequences compared to overall use of "the United States." (I checked for capitalized "The United States" to avoid false matches like "The presidents of the United States are...") The graph reveals a steady rise of singular usage after the Civil War, but the plural usage didn't start losing out in the head-to-head matchup until around 1890.

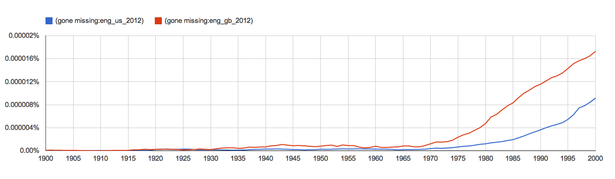

The Ngrams Viewer also allows you to compare major slices of the corpus, like British English and American English. Here, you can see how an expression like "gone missing" has taken off in British English, with American English usage lagging a decade or so behind.

What if you wanted to look for "go missing," "goes missing," "going missing," "gone missing," and "went missing," all at once? You could use the mathematical operators to combine them, but that points to a shortcoming of the Ngram Viewer compared to some other publicly available corpus tools. With the corpora compiled by Mark Davies at Brigham Young University, such as the Corpus of Contemporary American English and the Corpus of Historical American English, it's possible to search for all the different forms of "go" at once. "Go," in other words, can be treated as a lemma, like a headword in a dictionary.

The BYU corpus tools offer greater flexibility than the Ngram Viewer in other ways. For instance, they can be used to zero in on word combinations that appear frequently in literature, or to find out which nouns are most often modified by the adjective "personal" (a question that came up in last year's Supreme Court case about whether corporations are entitled to "personal privacy"). Google's tagset for parts of speech is also relatively coarse, compared to the elaborate tagsets that linguists often use for parsing English texts. But this coarseness is intentional, since it allows Google to apply the same grammatical categories across all the languages in the Ngram Corpus, not just English.

That broad-brush approach may pay off for Google's NLP team in the long run as it moves from parsing printed texts to parsing the Web in all of its glorious messiness. The Ngram Viewer is a supremely useful tool for both casual and serious historical research, but it's also a showcase for some cutting-edge work in converting mountains of "noisy" text into orderly streams of language data.