New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Cannot use GPU support in Windows 10 preview build 21327 #6617

Comments

Hello. |

Clean installations refer to Windows itself in this case. |

Didn't see this earlier today. I have cuda_11.2.r11.2 installed in WSL2 Ubuntu and, on the Windows 10 side, have Nvidia driver 465.42 installed. I can compile deviceQuery but it fails with "CUDA driver version is insufficient for CUDA runtime version." Would this issue produce that message? I suppose the answer is "all over the above" but wanted to ask anyway. |

If you upgraded to build 21327 or higher you'll see this issue. If that's not the case then you might have a different issue. I'd recommend you check to see if |

Thanks very much for posting this, and for clarifying. I upgraded to 21327 last night and noticed this issue today. I removed all distros and reinstalled wsl completely but see the same error. Glad to know it should be patched soon, got an ETA? |

Thanks. /dev/dxg doesn't exist. |

We have created the fix internally but don't yet have an ETA for when it will hit Insiders. @patfla seems like you are hitting this issue. I'll post updates to this thread when we have them! |

For anyone else that was struggling with the latest build to even open settings or the Explorer to revert back to old build.. Win+R, run Check Updates Will list all updates. I deleted all the updates listed. (May vary for you?) Once I deleted these updates, I was then able to open my settings after restart as follows: Win+I, Update&Security > Recovery > Go Back to previous version of Windows 10. Now I'm on Build 21322 and GPU support is back |

Are you sure ? I just ran a fresh install of windows 10. There is no /dev/dxg in my linux subsystem. I did not find a way to directly install this version though :/ the last version with an installer seems to be 21286 EDIT: I think I found a way by using this website: https://www.uupdump.net/selectlang.php?id=400d4226-19cc-41a1-b4b9-7bb97adad088 |

Hello! |

This is now fixed in Windows 10 preview build 21332! Please upgrade to that build or higher and you'll be resolved. Thanks all for your patience. |

/fixed 21332 |

This bug or feature request originally submitted has been addressed in whole or in part. Related or ongoing bug or feature gaps should be opened as a new issue submission if one does not already exist. Thank you! |

Hello. I’m trying to deploy a docker container with GPU support on Windows Subsystem for Linux. These are the commands that I have issued (taken from here : https://dilililabs.com/zh/blog/2021/01/26/deploying-docker-with-gpu-support-on-windows-subsystem-for-linux/ sudo apt-key adv --fetch-keys http://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/7fa2af80.pub sudo sh -c 'echo "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64 /" > /etc/apt/sources.list.d/cuda.list' sudo apt-get update sudo apt-get install cuda-toolkit-11-0 curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list curl -s -L https://nvidia.github.io/libnvidia-container/experimental/$distribution/libnvidia-container-experimental.list | sudo tee /etc/apt/sources.list.d/libnvidia-container-experimental.list sudo apt-get update sudo apt-get install nvidia-docker2 cuda-toolkit-11-0 cuda-drivers sudo service docker start I’m not able to run this docker container : docker run --rm --gpus all nvidia/cuda:11.0-cudnn8-devel-ubuntu18.04 Unable to find image 'nvidia/cuda:11.0-cudnn8-devel-ubuntu18.04' locally in addition : root@DESKTOP-N9UN2H3:/mnt/c/Program Files/cmder# nvidia-smi NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running. Failed to properly shut down NVML: Driver Not Loaded (I’m using windows 10 build 21376co_release.210503-1432 On the host I have installed the nvidia driver vers. 470.14 and inside WSL2 I have ubuntu 20.04. |

I have exactly the same issue as above. On windows build 21382.co_release.210511-1416. |

I am having this issue as well on build 21387.1 |

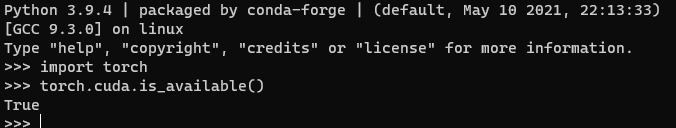

Hey y'all, I had similar issue running build 21390. Turns out there was an update missing for wsl. When you check for updates, make sure "receive updates from other microsoft products" is enabled. Also, hit that check for update button a few times, then it's going to take a while, and you know it really went to check if there was something new or missing. After installing the update and restarting wsl, /dev/dxg was there, and running deviceQuery from cuda samples was successful. I also can use gpu in pytorch, which is awesome, thanks. |

I'll try updating again and see what happens. |

|

Fammi sapere come va zakkaria.

|

Which tutorial did you use for setting up CUDA? I can't find one that's worked so far |

@spriggsyspriggs it really wasn't just one, unfortunately. Mainly, I followed:

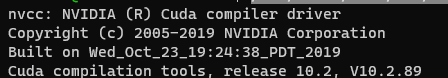

The rest I was kinda picking up a little bit here and there. For example, after I made sure that I strictly followed nvidia's guide, I needed a way to check the driver version in wsl2. For the toolkit I installed both 11.3 and 11.1 and 10.2, as the nvidia doc asked for 11.1, but the previous nvidia-smi command showed that my driver version was 11.3, and, finally, I installed 10.2 because I read here that. 11.1 alone wouldn't cut it, for some libraries were missing. To restart wsl, first open powershell, then run To run samples, in the nvidia doc, they talk about that BlackScholes application. I had better results running deviceQuery sample, as it already checks for you runtime and driver API version, and presents great details in case the application runs successfully. Btw, I'm running CUDA Runtime 10.2. This is fine, as long as the runtime is not higher than the driver, otherwise you might get "CUDA driver version is insufficient for CUDA runtime version" error. Even with all of this, deviceQuery was failing. So ruling out everything else, I started to look into issues specific with windows builds, and trust me there are plenty. But none specific to my version. So I arrived at this issue with /dev/dxg and by doing what I said previously, the issue was solved. Here is the output for some of the commands I've been mentioning throughout this answer:

|

I tried everything and I cannot make the OS build : 22000.120 |

@utiq please open a new issue for this if you're experiencing this problem. |

@craigloewen-msft I solved it by re-compiling the WSL Kernel from here https://github.com/microsoft/WSL2-Linux-Kernel using the branch linux-msft-wsl-5.10.y in case anyone got the same problem. |

Notes from the dev team

Hey everyone we're filing this issue on ourselves to track the fact that GPU Compute support is unavailable in preview build 21327. This will affect any users who upgrade to this build but will not affect clean installations. We've identified a fix for this bug and have checked it in, and will need to wait for it to be validated internally before releasing it as part of the Windows Insiders program, we estimate that it will be available within the next couple of Insider builds. Thanks for your patience here!

Environment

Steps to reproduce

Trying to use any Linux application that leverages the GPU on Windows will not work. Additionally /dev/dxg is not visible.

Expected behavior

I should be able to use Linux applications that leverage the GPU.

Actual behavior

The /dev/dxg device is not available in my Linux distros.

The text was updated successfully, but these errors were encountered: