Computed tomography–based COVID–19 triage through a deep neural network using mask–weighted global average pooling

- 1Department of Radiology, the Fifth Medical Center of Chinese PLA General Hospital, Beijing, China

- 2Algorithm Center, Keya Medical Technology Co., Ltd, Shenzhen, China

Background: There is an urgent need to find an effective and accurate method for triaging coronavirus disease 2019 (COVID-19) patients from millions or billions of people. Therefore, this study aimed to develop a novel deep-learning approach for COVID-19 triage based on chest computed tomography (CT) images, including normal, pneumonia, and COVID-19 cases.

Methods: A total of 2,809 chest CT scans (1,105 COVID-19, 854 normal, and 850 non-3COVID-19 pneumonia cases) were acquired for this study and classified into the training set (n = 2,329) and test set (n = 480). A U-net-based convolutional neural network was used for lung segmentation, and a mask-weighted global average pooling (GAP) method was proposed for the deep neural network to improve the performance of COVID-19 classification between COVID-19 and normal or common pneumonia cases.

Results: The results for lung segmentation reached a dice value of 96.5% on 30 independent CT scans. The performance of the mask-weighted GAP method achieved the COVID-19 triage with a sensitivity of 96.5% and specificity of 87.8% using the testing dataset. The mask-weighted GAP method demonstrated 0.9% and 2% improvements in sensitivity and specificity, respectively, compared with the normal GAP. In addition, fusion images between the CT images and the highlighted area from the deep learning model using the Grad-CAM method, indicating the lesion region detected using the deep learning method, were drawn and could also be confirmed by radiologists.

Conclusions: This study proposed a mask-weighted GAP-based deep learning method and obtained promising results for COVID-19 triage based on chest CT images. Furthermore, it can be considered a convenient tool to assist doctors in diagnosing COVID-19.

Introduction

At the beginning of 2020, the coronavirus disease 2019 (COVID-19) infection spread rapidly worldwide. The symptoms of COVID-19 infection are similar to common pneumonia such as pneumonia caused by bacteria and influenza viruses, mainly including fever, malaise, dry cough, and sore throat (Guan et al., 2020; Jiang et al., 2020). Early detection and diagnosis of patients with COVID-19 infection can greatly hinder the spread of the disease and alleviate the patient’s symptoms. Therefore it is an urgent need to find an effective and accurate method for diagnosing COVID-19 patients from common pneumonia.

Currently, diagnosing COVID-19 relies largely on reverse transcription-polymerase chain reaction (RT-PCR) testing of samples from the throat (Ai et al., 2020). However, RT-PCR for COVID-19 diagnosis has some limitations: the test is not universally available, turnaround times can be lengthy, and the reported sensitivities vary. Patients with respiratory symptoms who do not have a confirmed diagnosis of COVID-19 may undergo computed tomography (CT) for different indications, including the diagnosis of suspected pneumonia. CT imaging is another critical tool in the initial screening of COVID-19 pneumonia, serves as an alternative or adjunct to RT-PCR diagnosis, and plays a vital role in early detection, observation, and evaluation of the disease (Bao et al., 2020; Ye et al., 2020; Garg et al., 2022). However, chest CT images usually consist of approximately 100 slices, and it is very time-consuming for radiologists to check whether they are COVID-19 images. With the rapid spread of COVID-19 virus and the large increase in CT data, the development of computer-aided detection system with artificial intelligence to assist radiologists in the diagnosis of COVID-19 patients has become an urgent and necessary task.

Therefore, this study aims to propose a mask-weighted global average pooling (GAP)-based deep learning method for COVID-19 triage based on chest CT images. COVID-19 triage is a slightly different problem compared to common classification problems (Jaipurkar et al., 2018; Wodzinski et al., 2019; Prakash et al., 2022; Ker et al., 2019). Conventional CNNs perform convolution operations in the lower layers of the network and concatenate the last convolution layer’s feature map to the fully connected layer, followed by a softmax logistic regression layer, for classification. This structure bridges the convolutional structure with traditional neural network classifiers. It treats convolutional layers as feature extractors, and the resulting feature is classified traditionally. However, fully connected layers have many more parameters, and GAP layers were proposed (Li et al., 2022; Bao et al., 2023). General GAP calculates the mean value of the entire feature image. The fully connected layer maps all pixels as the input for the classifier, and the GAP maps the entire image as one pixel as the input for the classifier. Therefore, the number of parameters and the model complexity are significantly reduced.

In medical images, the pixel information is much more associated with the concrete clinical structure. Furthermore, GAP with different weight factors according to the different tissues will be useful in reducing the inference of background noise. Therefore, in the medical image classification task, the entire image is sometimes unrequired, and a specified organ region of the image is sufficient. In the COVID-19 triage particularly, COVID-19-related suspicions are all in the lung region, and clinical information is much more useful for this triage problem.

In this paper, we developed an AI algorithm using a mask–weighted GAP method for COVID-19 triage. The main novelty of this paper are summarized:

1. We developed a computer-aided diagnostic algorithm with a 3D convolutional neural network to achieve the diagnosis of COVID-19 patients from common pneumonia based on chest CT images.

2. A mask-weighted GAP method which used the segmented lung region mask to reduce the non-lung region inference for the COVID-19 classification was proposed to improve the accuracy of the COVID-19 triage.

3. The amount of data used in this study is large, containing a total of 2809 cases, covering several public datasets and a private datasets.

Related work

During the past two years, many classifications and segmentation deep learning algorithms have been developed to assist radiologists in COVID-19 identification (Harmon et al., 2020; Islam et al., 2020; Fan et al., 2022; Shaik and Cherukuri, 2022) and severity qualification (Li et al., 2020; Qin et al., 2020).

Md. Islam (Islam et al., 2020) proposed a deep learning-based system combining a convolutional neural network (CNN) and long short-term memory (LSTM) networks to detect COVID-19 automatically based on radiographs. In the proposed system, CNN was used for feature extraction, and LSTM was used to classify COVID-19 based on these features. This can help doctors diagnose and treat COVID-19 patients easily. Harmon S A (Harmon et al., 2020) developed and evaluated an AI algorithm for the detection of COVID-19 on chest CT using data from a globally diverse, multi-institution datasets. Fan X (Fan et al., 2022) built a parallel bi-branch model (Trans-CNN Net) based on Transformer module and CNN module is proposed by making full use of the local feature extraction capability of CNN and the global feature extraction advantage of Transformer. Bosowski et al. (Bosowski et al., 2021) introduced deep ensembles that benefit from a wide range of architectural advances, alongside a new fusing approach to deliver accurate predictions of COVID-19 cases on a number of datasets of chest X-ray images.

Li et al. (Li et al., 2020) developed a fully automated artificial intelligence system to quantitatively assess the disease severity and progression of COVID-19 using thick-section chest CT images. Le Qin (Qin et al., 2020) developed a predictive model and scoring system to enhance the diagnostic efficiency for COVID-19, and CT features and scores were evaluated at the lung segment level according to the lesion position, attenuation, and form. In most of these studies, the disease severity and progression of COVID-19 have been assessed.

These methods above applied AI techniques to the detection and evaluation of COVID-19 pneumonia based on medical imaging data in different modalities and achieved better performance. However, there are some shortcomings in these methods, either some studies use a small amount of data from a single source, which makes it difficult to verify the generalization ability of the model, or some studies use non-CT data, such as X-ray images, which have low sensitivity in clinical applications. Inspired by the above-mentioned researches, this study proposed a mask–weighted global average pooling–based deep learning method for COVID–19 triage based on chest CT images.

Material and methods

Patients

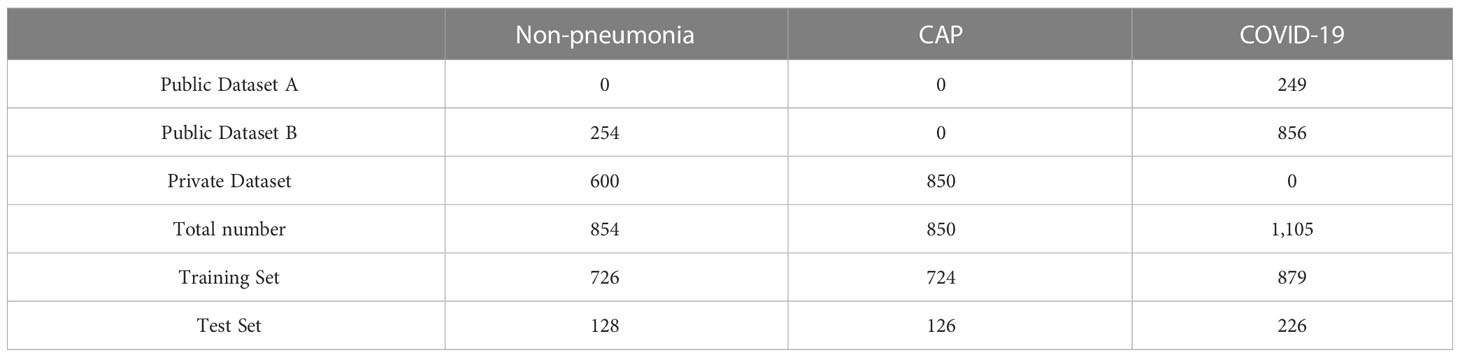

This study included two types of datasets: public and private. The public dataset consisted of two different data sources: COVID-19 lung CT lesion segmentation challenge (An et al., 2020; Roth et al., 2022) (https://covid-segmentation.grand-challenge.org/Data/) and MosMeddata (Morozov et al., 2020) (https://www.kaggle.com/datasets/andrewmvd/mosmed-covid19-ct-scans). The first public dataset contained images of 249 COVID-19 patients, while the second contained images of 856 COVID-19 patients and 254 non-pneumonia patients (n = 1,110). The private dataset was acquired from our Hospital, which contained images of 850 pneumonia and 600 normal patients.

These data were mixed, and a total of 2,809 scans were used in this study, including 854 normal, 850 common pneumonia, and 1,105 COVID-19 cases. For each category, 15%-20% of the scans were randomly selected as the test set and the remaining as the training set. To verify the performance of the COVID-19 triage, we made the test set have roughly the same number of CT scans between COVID-19 and non-COVID-19 patients. Information on these datasets is presented in Table 1.

Workflow

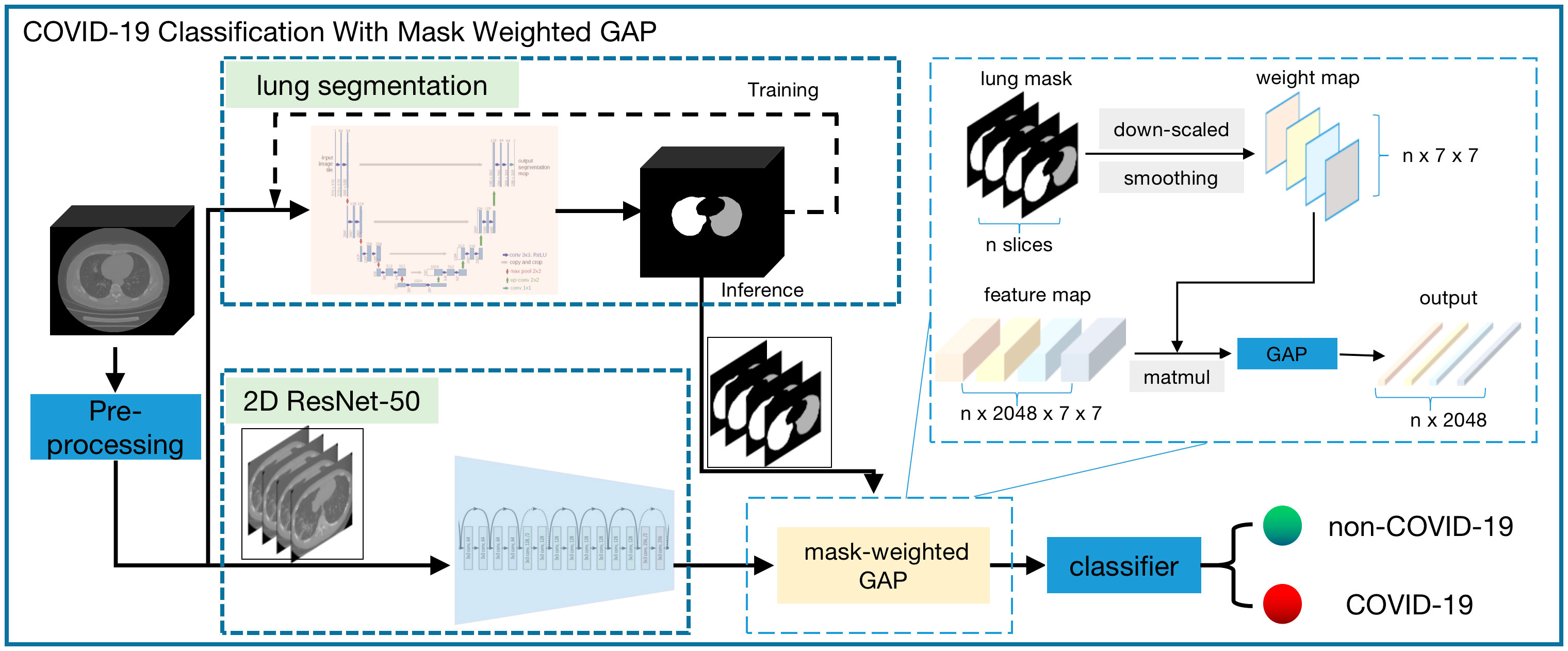

The workflow chart of the COVID-19 triage algorithm is illustrated in Figure 1. First, pre–processing was performed on all the data. Second, segmentation of the lung region and cutting out of the lung region mask and CT image were performed. Third, according to the lung region mask and CT image, a deep neural network was used to perform the COVID-19 classification.

Figure 1 Workflow chart of the whole COVID-19 triage algorithm. There are the following modules: pre-process, lung segmentation, and classification. A 3d-Unet model was used for lung segmentation and the input of this module is the isotropic volume after pre-process module. The cropped isotropic image by the lung mask region is the input of classification module. Before the classifier, a weight-mask GAP is applied to the feature maps extracted by resnet-50 model.

CT image pre-processing

Because the data had different spacings and sizes, the first pre–processing step was image resampling to obtain the isotropic volume, and the pixel spacings between the x, y, and z directions were the same. Subsequently, to reduce the variation between different datasets, we calculated each scan’s mean and standard deviation and used Z-score normalization to normalize the images.

Lung segmentation

Lung segmentation was based on the CNN method using the U-Net model (Kalpana et al., 2022; Papetti et al., 2022). There are two paths in the model network: the left path, which encodes the image features, and the right path, which decodes the features and localizes the target tissue. The left-contracting path follows the typical architecture of a convolutional network. It consists of the repeated application of two 3 × 3 × 3 convolutions, each followed by a rectified linear unit (ReLU) and a 2 × 2 × 2 max pooling operation with stride 2 for downsampling. Every step in the expansive path consists of an up–sampling of the feature map, followed by a 2 × 2 × 2 convolution that halves the number of feature channels, concatenation with the correspondingly cropped feature map from the contracting path, and two 3 × 3 × 3 convolutions, each followed by a ReLU. Herein, the input of our three–dimensional (3D) U-net model was the output of the pre-processing step, which was the resampled isotropic volume with a size of 128 × 128 × 128. The output of the 3D U-net model was the segmentation mask of the lung region.

The training dataset for lung segmentation was an open dataset named LUNA16 (Armato et al., 2011), which can be accessed on the LUNA16 website. LUNA16 has 888 volumes of lung data, with a slice thickness greater than 2.5 mm. Furthermore, lung segmentation is sufficient for COVID-19 classification, considering the application scenario and cost of manual labeling. Therefore, we randomly selected 300 volumes of data for training and 30 for validation. The same pre–processing method was applied to these data to reduce both the graphics processing unit (GPU) memory limitation and training time.

COVID-19 classification using mask-weighted GAP

The COVID-19 classification method was based on the residual network (ResNet) (Kibriya and Amin, 2022; Malik et al., 2022; Suganya and Kalpana, 2023), which received the state-of-the-art performance award at the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2015 for classification, localization, detection, Common Objects in Context (COCO) detection, and segmentation tasks.

A conventional ResNet was used for one-image classification. However, our input was one series of images, and these 3D CT images can be used in two ways. One method used 3D convolution and modifies the original ResNet to 3D ResNet, and the other used two–dimensional (2D) convolution for the series of images and combines several 2D output features. Herein, we adopted the second method, considering the sparse information on suspected COVID-19 and the large memory usage of 3D convolution. In our classification method, the input CT image and mask were first resized to 224 × 224, and the ResNet model of one image outputted feature maps with a size of 2048 × 7 × 7. Then, a weight-mask GAP method was applied to the feature maps before the classifier layer. Inspired by the idea of attention, we increased the weight factors of lung regions closely related to the COVID-19 classification during GAP operation, which was used to improve the sensitivity of lung regional lesions and reduce the interference of background noise. The mask-weighted GAP calculation is shown in Equation (1).

Ifeature is the output feature image from the last convolution layer of the ResNet50. Ismoothedmask is the lung mask region, downscaled to 7 × 7, followed by Gaussian smoothing; the last normalized summation of all pixel values was 1. The downscale operation made the lung mask the same size as the final feature map, and the smoothing operation made the weighting factor vary smoothly considering the spatial relationship. The conventional GAP was used to obtain the average value of Ifeature; however, the mask–weighted GAP combined the different weights from Ismoothedmask and Ifeature to obtain the final value of IfeatureweightedGAP. If the weighted mask values were all the same, it would be equal to the conventional GAP; particularly, the weighting value of the lung region area would be larger than that of the non–lung region area to guarantee that the feature focused on the lung region containing the suspected COVID-19.

In the COVID-19 classification method, the model’s input was CT image and lung region masks according to the results of the lung segmentation model. The model’s output was the probability of each case being predicted as COVID-19.

Statistical analysis

We compared the classification performance using several metrics such as accuracy, sensitivity, specificity, F1 score, and area under the curve (AUC) (Lu et al., 2023).

The accuracy of a test is its ability to differentiate between positive and negative cases correctly. The sensitivity of a test is its ability to identify positive cases correctly. The specificity of a test is its ability to identify negative cases correctly. The F1 score is the weighted average of precision and recall.

True positive (TP) is the number of cases correctly predicted as positive, true negative (TN) is the number of cases correctly predicted as negative, false positive (FP) is the number of cases incorrectly predicted as positive, and false negative (FN) is the number of cases incorrectly predicted as negative.

The AUC is an index used to measure the performance of a classifier. The AUC provides a method to measure the accuracy of a diagnostic test. The larger the area, the more accurate the diagnostic test. The AUC of the receiver operating characteristic (ROC) curve can be measured using the following equation (6), where t = (1 - specificity) and ROC (t) is sensitivity.

For the segmentation task, the validation metric is usually the Dice coefficient (DC). The DC measures the spatial overlap between the two segmentation regions. The DC is 2 × area of overlap divided by the total number of pixels in both images. The larger the DC value, the better the segmentation result. Therefore, DC was adopted to measure the lung segmentation performance in this study.

Results

All experiments in this study were performed on ubuntu 20.04. The model was trained using the deep learning framework Pytorch 1.8.1 and CUDA 11.4. All experiments were run on one NVIDIA DGX Station, with four NVIDIA Tesla V100 DGXS 32GB GPUs and one Intel(R) Xeon(R) CPU E5-2698 CPU. The model was trained using the optimizer Adam with an initial learning rate of 1e-5, and the learning rate was adjusted using warm up and CosineAnnealingLR.

There were two models for training: lung region segmentation and COVID-19 classification models. The total training epoch for lung segmentation and COVID-19 classification were 100 and 60 epochs, and the training time were around 6 and 10 hours respectively. The following subsections describe the qualitative and quantitative results of the two models.

Lung region segmentation results

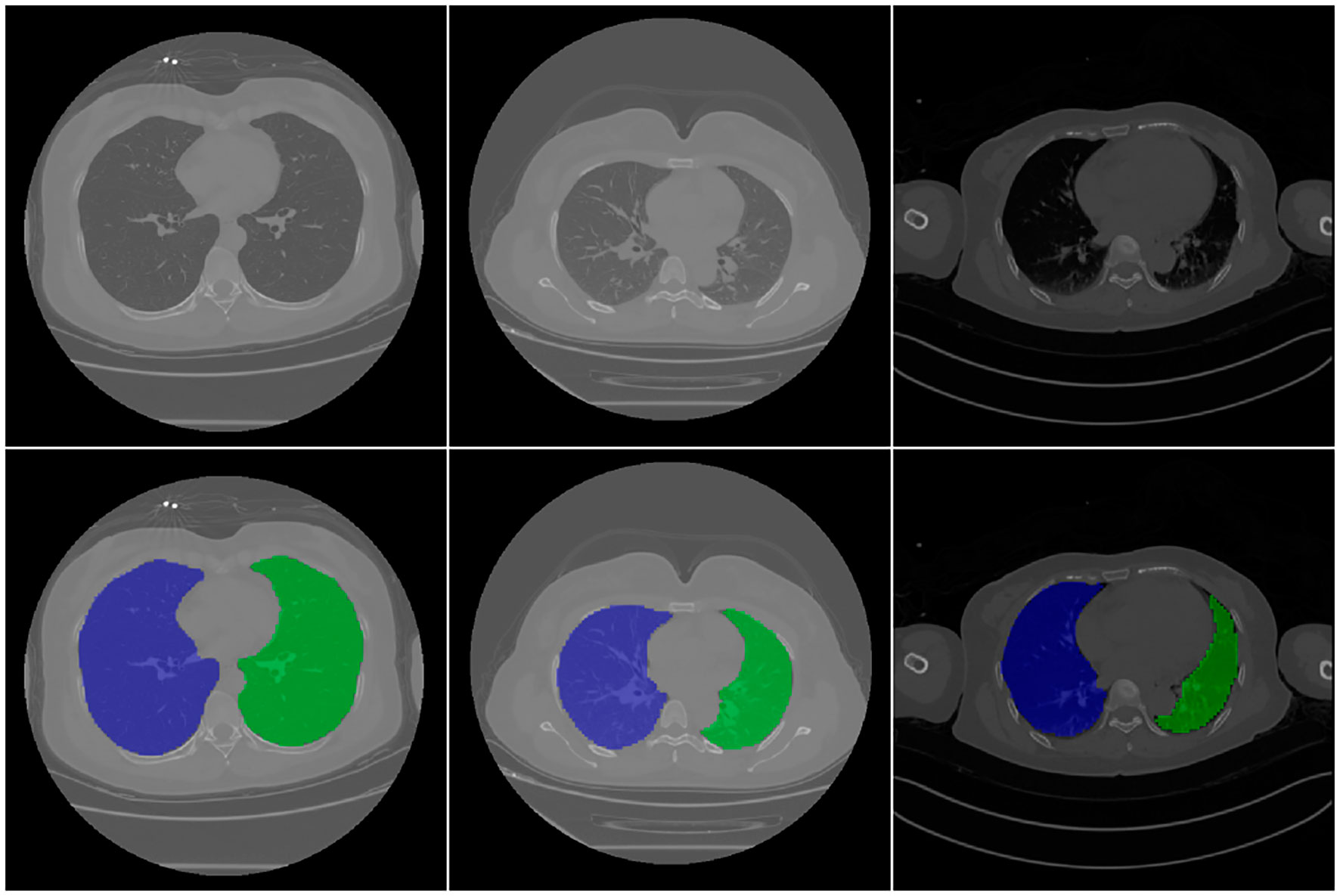

The lung region mask was generated using a 3D U-net model. The input was the original CT data, and the output was the mask of the left and right lungs. Figure 2 shows the different image slices and their related mask. Because lung segmentation is used for COVID-19 classification, being very accurate is unnecessary. Our segmentation result was validated using the DC, which was approximately 96.5% on 30 independent CT scans.

Figure 2 CT images and related mask. First raw are original CT image, second raw are the corresponding segmentation results. In the figure, the background is shown in black, the right lung is shown in blue, and the left lung is shown in green.

COVID-19 classification results

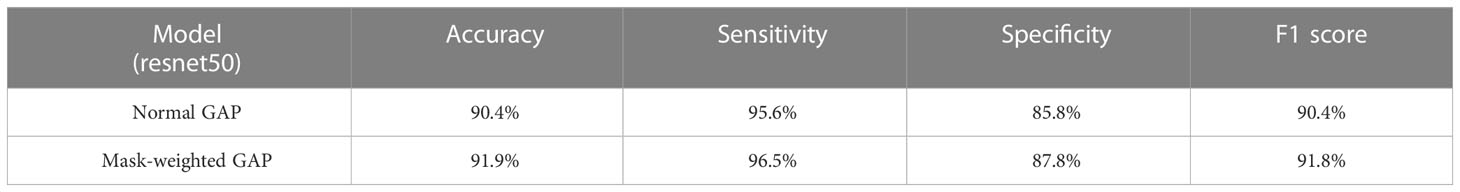

The performance of proposed mask-weighted GAP method was evaluated on test set which included 226 COVID-19 CT scans and 254 non-COVID-19 scans. The evaluation metrics were calculated according to the formulations described in the previous section. Our proposed method achieved a sensitivity of 96.5% and a specificity of 87.8%.

For comparison, we also trained a COVID-19 classification models using general GAP and evaluated the performance on the same test set. The results of the quantification metrics comparison between the general GAP and mask-weighted GAP are shown in Table 2. Our proposed method achieved a better performance, both the accuracy and F1 score improved by more than 1%. Based on the results, we found that mask-weighted GAP will be useful for medical image classification of suspicions in special clinical organs.

Table 2 The classification results of the proposed Mask-weighted GAP and the comparison with normal GAP based on resnet50.

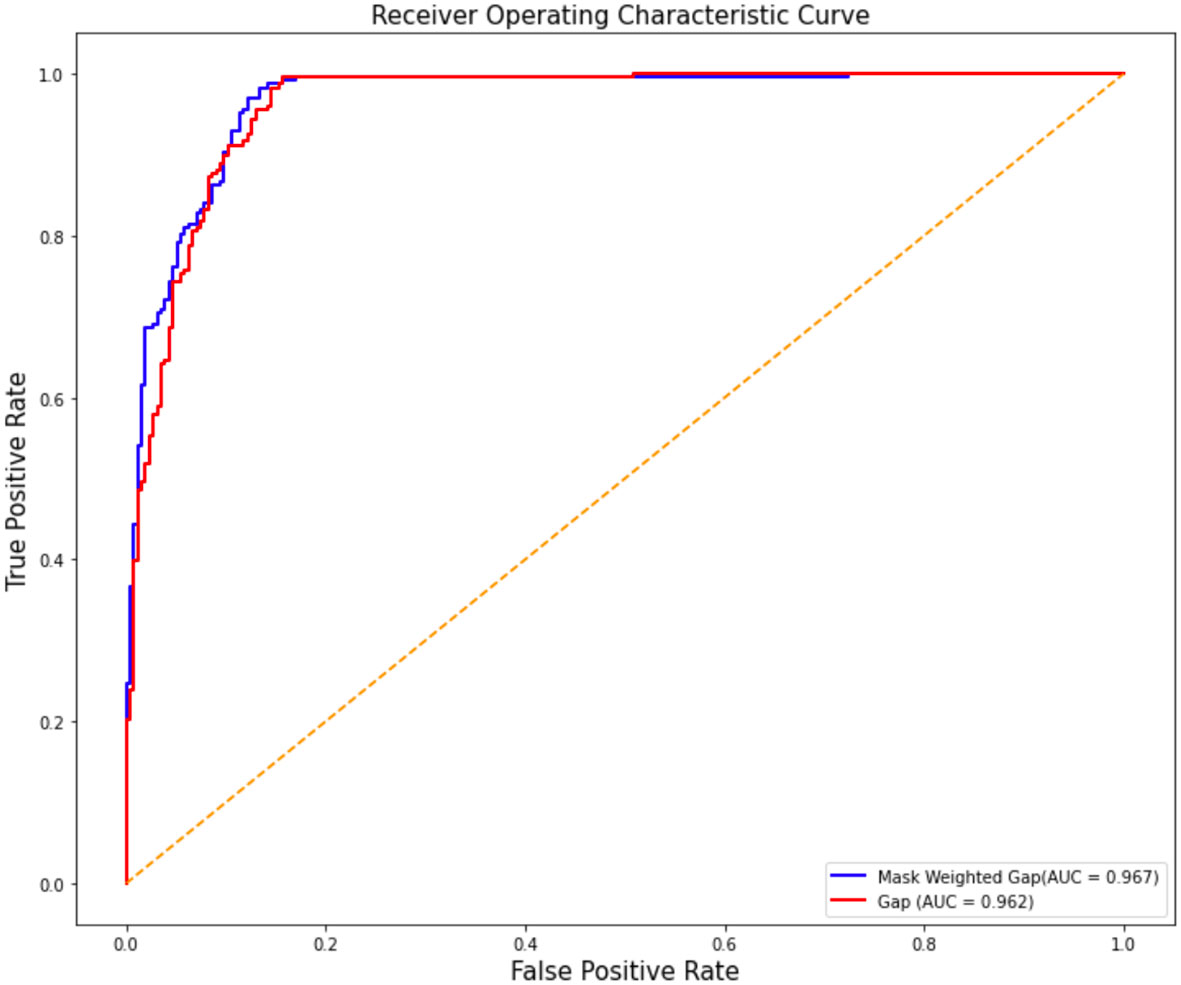

The ROC curve comparison between the experimental results of ResNet50 with GAP and mask-weighted GAP is shown in Figure 3. The mask-weighted GAP obtained an AUC value of 0.967 for COVID-19 classification, whereas the general GAP method obtained an AUC value of 0.962. Therefore, the mask-weighted GAP will be more useful for medical image classification of suspicions in special clinical organs because of the attention to regions containing these suspicions.

Figure 3 The ROC cure comparison of the two experiment results of resnet50 with GAP and with mask weighted GAP. Vertical axis is false positive rate, while horizontal axis is true positive rate. The blue cure is the result of mask weighted GAP, while the red cure is the result of GAP.

Visualization check of COVID-19 triage

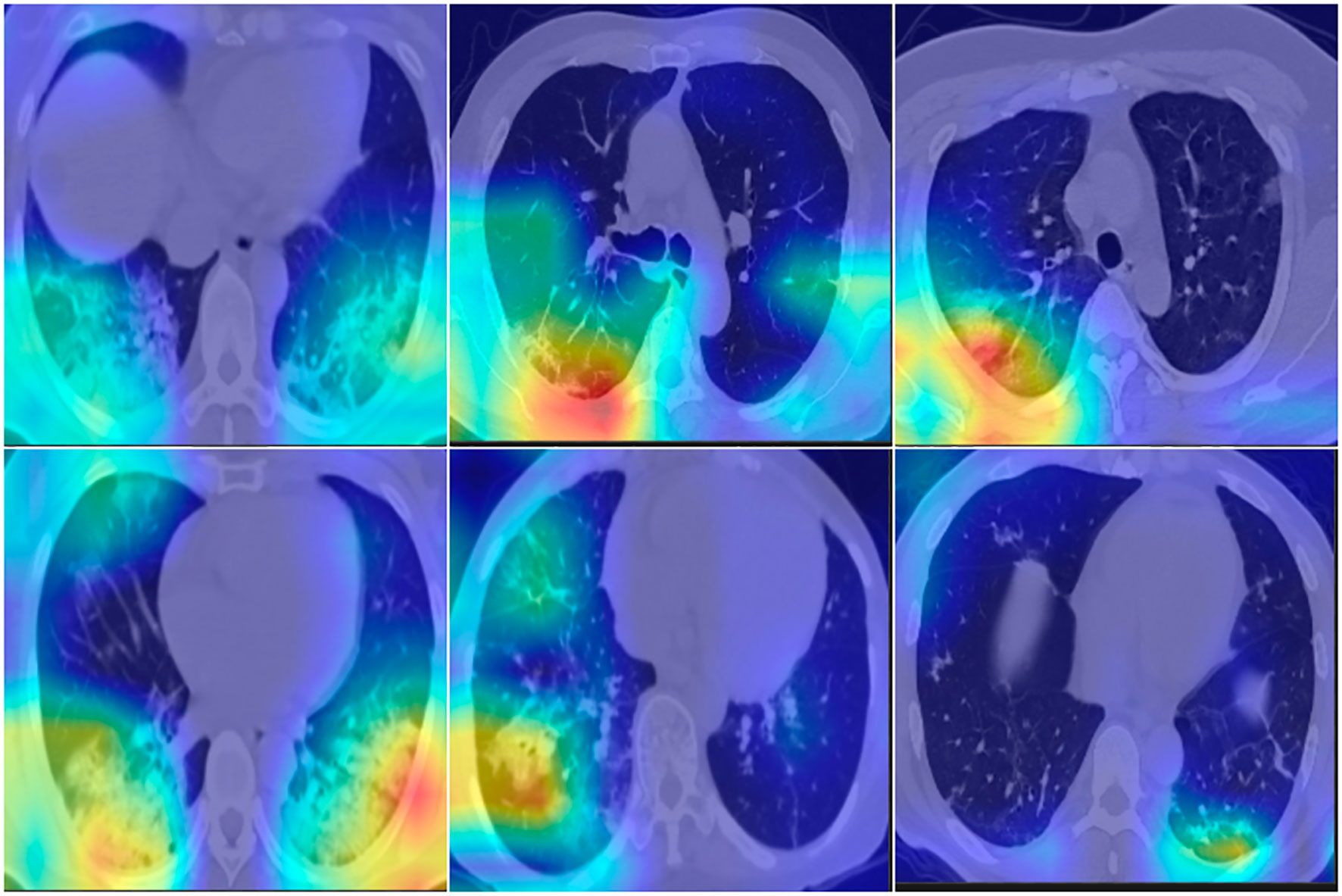

To confirm the COVID-19 triage result, Grad-CAM (Zhao et al., 2023) was adopted to fuse the key region for the classification decision on the original image. A good visualization method uses heavy colors to highlight the suspected region and light colors to indicate the normal region. The colored region is the most important in deciding whether the current image is a COVID-19 image; red indicates a high probability, while green and blue are the next priority. The fusion image will not include a significantly colored region if the CT image is normal. Figure 4 shows the suspected COVID-19 region highlighted in red; however, the normal region on the CT image is without a significant color. The fusion color map is useful in confirming the importance of CNN feature regions to distinguish COVID-19 suspects.

Figure 4 Fusion images with Grad-CAM, which indicate the import region for classification. First row are normal cases, second row are COVID-19 cases. RGB color indicate the high risk for suspects, light color indicate the normal region.

Discussion

In this study, we developed and evaluated a lung mask-weighted GAP-based deep learning method for COVID-19 triage based on chest CT scans. A total of 2,809 scans, including 854 normal, 850 common pneumonia, and 1,105 COVID-19 cases, were used for classification, and 330 data volumes with lung masks were used for lung segmentation. The U-net-based model achieved a DC of approximately 96.5% in the lung segmentation task, and the mask-weighted GAP method achieved an accuracy of 91.9%, sensitivity of 96.5%, specificity of 87.8%, and AUC of 96.7% in the COVID-19 classification task. In addition, a Grad-CAM method was adopted to confirm the COVID-19 triage results.

The lung mask-weighted GAP achieved better performance than the normal GAP classification method. The lung mask-weighted GAP showed a 1% improvement in all metrics. This may be because the lung mask-weighted GAP method focuses the model on the lung region containing the suspected COVID-19, reduces the influence of the background region, and highlights the classification features of tissue regions. In addition, the lung mask-weighted GAP could also improve the sensitivity of suspect feature contribution and specificity by reducing the effects of artifacts in the lung region, such as ground–glass opacity or consolidation features. In addition, according to the ROC curve, our COVID-19 classification model is a high-sensitivity model, which is important in screening COVID-19 patients. In our experiments, the AI algorithm took only 10s to complete the classification of one case, which can reduce the pressure of radiologists and improve the efficiency of diagnosis.

This study also had several limitations. First, we only performed COVID-19 diagnosis based on chest CT scans using the deep learning method. While we developed an algorithm to detect the infection lesion, this study did not report a quantitative analysis of the infection lesion. Second, respiration and heart motion due to motion artifacts may reduce the accuracy of the deep learning method. However, this study excluded several severe motion artifact cases. In the future, the training data should include motion artifact cases for both COVID-19 and normal scans. Finally, our study data included COVID-19, pneumonia, and normal cases; therefore, the diagnosis of these three cases should be developed using a deep learning method in future work.

In this study, we have verified the effect of mask-weighted on GAP. In the future, we will apply the mask-weighted method to more methods such as attention model (Rehman et al., 2023) and transformer model (Yang et al., 2023), hoping to further improve the performance.

Conclusions

A lung mask–weighted GAP-based deep learning method was developed to diagnose COVID-19 and non-COVID-19 cases based on chest CT scans. The evaluation results confirmed that this deep learning-based method was feasible.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/supplementary material.

Ethics statement

This study has been approved by the appropriate ethics committee and all persons gave their informed consent prior to their inclusion in the study.

Author contributions

H-TZ and F-GS conceived and designed the study. H-TZ, JZ and SG wrote the paper and analysed data. J-HD, YL, XB collected data and analysed imaging. J-LM and ML analysed imaging. Z-YS and GL provided technical support. J-MC was responsible for revising the content manuscript. All authors contributed to the article and approved the submitted version

Acknowledgments

We sincerely thank all patients in the study.

Conflict of interest

Author Z-YS and GL were employed by Keya Medical Technology Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ai, T., Yang, Z., Hou, H., Zhan, C., Chen, C., Lv, W., et al. (2020). Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases. Radiology 296 (2), E32–E40. doi: 10.1148/radiol.2020200642

An, P., Xu, S., Harmon, S. A., Turkbey, E. B., Sanford, T. H., Amalou, A., et al. (2020). CT images in covid-19 [Data set]. Cancer Imaging Archive.

Armato, S. G., McLennan, G., Bidaut, L., McNitt-Gray, M. F., Meyer, C. R., Reeves, A. P., et al. (2011). The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Med. Phys. 38 (2), 915–931. doi: 10.1118/1.3528204

Bao, C., Liu, X., Zhang, H., Li, Y., Liu, J. (2020). Coronavirus disease 2019 (COVID-19) CT findings: A systematic review and meta-analysis. J. Am. Coll. Radiol. 17 (6), 701–709. doi: 10.1016/j.jacr.2020.03.006

Bao, H., Zhu, Y., Li, Q. (2023). Hybrid-scale contextual fusion network for medical image segmentation. Comput. Biol. Med. 152, 106439. doi: 10.1016/j.compbiomed.2022.106439

Bosowski, P., Bosowska, J., Nalepa, J. (2021). “Evolving deep ensembles for detecting covid-19 in chest X-rays,” in 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, Vol. 2021. 3772–3776. doi: 10.1109/ICIP42928.2021.9506119

Fan, X., Feng, X., Dong, Y., Hou, H. (2022). COVID-19 CT image recognition algorithm based on transformer and CNN. Displays 72, 102150. doi: 10.1016/j.displa.2022.102150

Garg, M., Prabhakar, N., Muthu, V., Farookh, S., Kaur, H., Suri, V., et al. (2022). CT findings of COVID-19–associated pulmonary mucormycosis: A case series and literature review. Radiology 302 (1), 214–217. doi: 10.1148/radiol.2021211583

Guan, W.-J., Ni, Z.-Y., Hu, Yu, Liang, W.-H., Ou, C.-Q., He, J.-X., et al. (2020). Clinical characteristics of coronavirus disease 2019 in China. New Engl. J. Med. 382 (18), 1708–1720. doi: 10.1056/NEJMoa2002032

Harmon, S. A., Sanford, T. H., Xu, S., Turkbey, E. B., Roth, H., Xu, Z., et al. (2020). Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. 11 (1), 4080. doi: 10.1038/s41467-020-17971-2

Islam, Md. Z., Islam, Md. M., Asraf, A. (2020). A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inform Med. Unlocked 20, 100412. doi: 10.1016/j.imu.2020.100412

Jaipurkar, S. S., Jie, W., Zeng, Z., Gee, T. S., Veeravalli, B., Chua, M. (2018). Automated classification using end-to-End deep learning. Annu. Int. Conf IEEE Eng. Med. Biol. Soc. 2018, 706–709. doi: 10.1109/EMBC.2018.8512356

Jiang, F., Deng, L., Zhang, L., Cai, Y., Cheung, C. W., Xia, Z. (2020). Review of the clinical characteristics of coronavirus disease 2019 (COVID-19). J. Gen. Internal Med. 35 (5), 1545–1549. doi: 10.1007/s11606-020-05762-w

Kalpana, G., Durga, A.K., Karuna, G. (2022). CNN Features and optimized generative adversarial network for COVID-19 detection from chest X-ray images. Crit. Rev. BioMed. Eng. 50 (3), 1–17. doi: 10.1615/CritRevBiomedEng.2022042286

Ker, J., Singh, S. P., Bai, Y., Rao, J., Lim, T., Wang, L. (2019). Image thresholding improves 3-dimensional convolutional neural network diagnosis of different acute brain hemorrhages on computed tomography scans. Sensors (Basel) 19 (9), 2167. doi: 10.3390/s19092167

Kibriya, H., Amin, R. (2022). A residual network-based framework for COVID-19 detection from CXR images. Neural Comput. Appl. 15, 1–12. doi: 10.1007/s00521-022-08127-y

Li, Y., Lei, M., Cheng, Y., Wang, R., Xu, M. (2022). Convolutional neural network with Huffman pooling for handling data with insufficient categories: A novel method for anomaly detection and fault diagnosis. Sci. Prog. 105 (4), 368504221135457. doi: 10.1177/00368504221135457

Li, Z., Zhong, Z., Li, Y., Zhang, T., Gao, L., Jin, D., et al. (2020). From community-acquired pneumonia to COVID-19: A deep learning–based method for quantitative analysis of COVID-19 on thick-section CT scans. Eur. Radiol. 30 (12), 6828–6837. doi: 10.1007/s00330-020-07042-x

Lu, Z., Miao, J., Dong, J., Zhu, S., Wu, P., Wang, X., et al. (2023). Automatic multilabel classification of multiple fundus diseases based on convolutional neural network with squeeze-and-Excitation attention. Transl. Vis. Sci. Technol. 12 (1), 22. doi: 10.1167/tvst.12.1.22

Malik, H., Anees, T., Din, M., Naeem, A. (2022). CDC_Net: Multi-classification convolutional neural network model for detection of COVID-19, pneumothorax, pneumonia, lung cancer, and tuberculosis using chest X-rays. Multimed Tools Appl. 20, 1–26. doi: 10.1007/s11042-022-13843-7

Morozov, S. P., Andreychenko, A. E., Pavlov, N. A., Vladzymyrskyy, A. V., Chernina, V. Y. (2020). Data from: Mosmeddata: Chest CT scans with COVID-19 related findings dataset. Digital diagnostics 1, 49–59.

Prakash, J. A., Ravi, V., Sowmya, V., Soman, K. P. (2022). Stacked ensemble learning based on deep convolutional neural networks for pediatric pneumonia diagnosis using chest X-ray images. Neural Comput. Appl. 7, 1–21. doi: 10.1007/s00521-022-08099-z

Papetti, D. M., Abeelen, K. V., Davies, R., Menè, R., Heilbron, F., Perelli, F. P., et al. (2022). An accurate and time-efficient deep learning-based system for automated segmentation and reporting of cardiac magnetic resonance-detected ischemic scar. Comput. Methods Programs BioMed. 229, 107321. doi: 10.1016/j.cmpb.2022.107321

Qin, Le, Yang, Y., Cao, Q., Cheng, Z., Wang, X., Sun, Q., et al. (2020). A predictive model and scoring system combining clinical and CT characteristics for the diagnosis of COVID-19. Eur. Radiol. 30 (12), 6797–6807. doi: 10.1007/s00330-020-07022-1

Rehman, Z. Ur, Ijaz, N., Ye, W., Ijaz, Z. (2023). Design optimization and statistical modeling of recycled waste-based additive for a variety of construction scenarios on heaving ground. Environ. Sci. pollut. Res. Int. doi: 10.1007/s11356-022-24853-1

Roth, H., Xu, Z., Diez, C. T., Jacob, R. S., Zember, J., Molto, J., et al. (2022). Rapid artificial intelligence solutions in a pandemic - the COVID-19-20 lung CT lesion segmentation challenge. Med. Image Anal. 82, 102605. doi: 10.1016/j.media.2022.102605

Shaik, N. S., Cherukuri, T. K. (2022). Transfer learning based novel ensemble classifier for COVID-19 detection from chest CT-scans. Comput. Biol. Med. 141, 105127. doi: 10.1016/j.compbiomed.2021.105127

Suganya, D., Kalpana, R. (2023). Prognosticating various acute covid lung disorders from COVID-19 patient using chest CT images. Eng. Appl. Artif. Intell. 119, 105820. doi: 10.1016/j.engappai.2023.105820

Wodzinski, M., Skalski, A., Hemmerling, D., Orozco-Arroyave, J. R., Noth, E. (2019). Deep learning approach to parkinson’s disease detection using voice recordings and convolutional neural network dedicated to image classification. Annu. Int. Conf IEEE Eng. Med. Biol. Soc. 2019, 717–720. doi: 10.1109/EMBC.2019.8856972

Yang, X., Tian, J., Wan, Y., Chen, M., Chen, L., Chen, J. (2023). Semi-supervised medical image segmentation via cross-guidance and feature-level consistency dual regularization schemes. Med. Phys. doi: 10.1002/mp.16217

Ye, Z., Zhang, Y., Wang, Yi, Huang, Z., Song, B. (2020). Chest CT manifestations of new coronavirus disease 2019 (COVID-19): A pictorial review. Eur. Radiol. 30 (8), 4381–4389. doi: 10.1007/s00330-020-06801-0

Keywords: coronavirus disease 2019 (COVID-19), computed tomography (CT), deep learning, global average pooling (GAP), artificial intelligence

Citation: Zhang H-T, Sun Z-Y, Zhou J, Gao S, Dong J-H, Liu Y, Bai X, Ma J-L, Li M, Li G, Cai J-M and Sheng F-G (2023) Computed tomography–based COVID–19 triage through a deep neural network using mask–weighted global average pooling. Front. Cell. Infect. Microbiol. 13:1116285. doi: 10.3389/fcimb.2023.1116285

Received: 05 December 2022; Accepted: 13 February 2023;

Published: 03 March 2023.

Edited by:

Sreekanth Gopinathan Pillai, Indian Institute of Chemical Technology (CSIR), IndiaReviewed by:

Jakub Nalepa, Silesian University of Technology, PolandJun Xia, Shenzhen Second People’s Hospital, China

Copyright © 2023 Zhang, Sun, Zhou, Gao, Dong, Liu, Bai, Ma, Li, Li, Cai and Sheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jian-Ming Cai, beili12345@sina.cn; Fu-Geng Sheng, fugeng_sheng@163.com

†These authors have contributed equally to this work

Hong-Tao Zhang

Hong-Tao Zhang Ze-Yu Sun

Ze-Yu Sun Juan Zhou1

Juan Zhou1  Jian-Ming Cai

Jian-Ming Cai Fu-Geng Sheng

Fu-Geng Sheng