Abstract

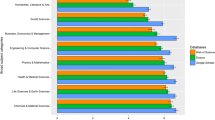

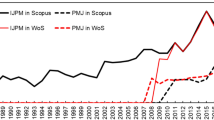

The top-1% most-highly-cited articles are watched closely as the vanguards of the sciences. Using Web of Science data, one can find that China had overtaken the USA in the relative participation in the top-1% (PP-top1%) in 2019, after outcompeting the EU on this indicator in 2015. However, this finding contrasts with repeated reports of Western agencies that the quality of China’s output in science is lagging other advanced nations, even as it has caught up in numbers of articles. The difference between the results presented here and the previous results depends mainly upon field normalizations, which classify source journals by discipline. Average citation rates of these subsets are commonly used as a baseline so that one can compare among disciplines. However, the expected value of the top-1% of a sample of N papers is N / 100, ceteris paribus. Using the average citation rates as expected values, errors are introduced by (1) using the mean of highly skewed distributions and (2) a specious precision in the delineations of the subsets. Classifications can be used for the decomposition, but not for the normalization. When the data is thus decomposed, the USA ranks ahead of China in biomedical fields such as virology. Although the number of papers is smaller, China outperforms the US in the field of Business and Finance (in the Social Sciences Citation Index; p < .05). Using percentile ranks, subsets other than indexing-based classifications can be tested for the statistical significance of differences among them.

Graphical Abstract

Similar content being viewed by others

Notes

-

The National Science Board of the United States establishes the policies of the National Science Foundation and serves as advisor to Congress and the President. NSB’s biennial report—Science and Engineering Indicators (SEI)—provides comprehensive information on the nation’s S&E.

-

The “Flagship Collection” of WoS includes the Science Citation Index-Expanded (SCIE), the Social Sciences Citation Index, (SSCI), and the Arts & Humanities Citation Index (A&HCI), and the Emerging Sources Citation Index.

-

Interestingly, since 2017, the differences between the EU with or without the UK are not statistically significant (z ≤ 1.96; p > .05).

-

The Institute for Scientific Information (ISI) in Philadelphia (PA) was the owner and producer of the Science Citation Indexes at the time.

-

One can formalize I3 as follows:

$$I3 = \sum\nolimits_{i = 1}^{C} {f(X) \times X_{i} } \quad (1)$$where Xi indicates the percentile ranks and f(Xi) denotes the frequencies of the ranks with i = [1,C] as the percentile rank classes.

References

Ahlgren, P., Persson, O., & Rousseau, R. (2014). An approach for efficient online identification of the top-k percent most cited documents in large sets of Web of Science documents. ISSI Newsletter, 10(4), 81–89.

Archambault, É., Beauchesne, O. H., & Caruso, J. (2011). Towards a multilingual, comprehensive and open scientific journal ontology. In Proceedings of the 13th international conference of the International Society for Scientometrics and Informetrics (pp. 66–77). Durban.

Bensman, S. J. (2007). Garfield and the impact factor. Annual Review of Information Science and Technology, 41(1), 93–155.

Bornmann, L, De Moya Anegón, F., & Leydesdorff, L. (2010). Do scientific advancements lean on the shoulders of giants? A bibliometric investigation of the Ortega hypothesis. PLoS ONE, 5(10). https://doi.org/10.1371/journal.pone.0013327.

Bornmann, L., & Mutz, R. (2011). Further steps towards an ideal method of measuring citation performance: The avoidance of citation (ratio) averages in field-normalization. Journal of Informetrics, 5(1), 228–230.

Boyack, K. W., Klavans, R., & Börner, K. (2005). Mapping the backbone of science. Scientometrics, 64(3), 351–374.

Braun, T., Glänzel, W., & Schubert, A. (1989). National publication patterns and citation impact in the multidisciplinary journals Nature and Science. Scientometrics, 17(1–2), 11–14.

Gauffriau, M., & Leino, Y. (2020). Understanding SciVal’s calculation of field-weighted percentile indicators. . The Bibliomagician, https://thebibliomagician.wordpress.com/2020/2010/2015/guest-post-understanding-scivalscalculation-of-field-weighted-percentile-indicators/.

Glänzel, W., & Schubert, A. (2003). A new classification scheme of science fields and subfields designed for scientometric evaluation purposes. Scientometrics, 56(3), 357–367.

Griliches, Z. (1994). Productivity, R&D and the data constraint. American Economic Review, 84(1), 123.

Jin, B., & Rousseau, R. (2004). Evaluation of research performance and scientometric indicators in China. In H. F. Moed, W. Glänzel, & U. Schmoch (Eds.), Handbook of quantitative science and technology research (pp. 497–514). Kluwer Academic Publishers.

Jonkers, K., Fako, P., Goenaga, X., & Wagner, C. S. (2021). China overtakes the EU in high impact science. Office of publication of the European Union.

King, D. (2004). The scientific impact of nations. Nature, 430, 311–316. https://doi.org/10.1038/430311a.

Klavans, R., & Boyack, K. (2009). Towards a consensus map of science. Journal of the American Society for Information Science and Technology, 60(3), 455–476.

Leydesdorff, L. (1988). Problems with the ‘measurement’ of national scientific performance. Science and Public Policy, 15(3), 149–152.

Leydesdorff, L. (2006). Can scientific journals be classified in terms of aggregate journal-journal citation relations using the journal citation reports? Journal of the American Society for Information Science and Technology, 57(5), 601–613. https://doi.org/10.1002/asi.20322

Leydesdorff, L., & Bornmann, L. (2011). Integrated impact indicators compared with impact factors: An alternative research design with policy implications. Journal of the American Society for Information Science and Technology, 62(11), 2133–2146. https://doi.org/10.1002/asi.21609

Leydesdorff, L., & Bornmann, L. (2012). Testing differences statistically with the Leiden ranking. Scientometrics, 92(3), 781–783.

Leydesdorff, L., & Bornmann, L. (2016). The operationalization of “fields” as WoS subject categories (WCs) in evaluative bibliometrics: The cases of “library and information science” and “science & technology studies.” Journal of the Association for Information Science and Technology, 67(3), 707714. https://doi.org/10.1002/asi.23408

Leydesdorff, L., Bornmann, L., & Adams, J. (2019). The integrated impact indicator revisited (I3*): a non-parametric alternative to the journal impact factor. Scientometrics, 119(3), 1669–1694. https://doi.org/10.1007/s11192-019-03099-8

Leydesdorff, L., Wagner, C. S., & Bornmann, L. (2014). The European Union, China, and the United States in the top-1% and top-10% layers of most-frequently cited publications: Competition and collaborations. Journal of Informetrics, 8(3), 606–617. https://doi.org/10.1016/j.joi.2014.05.002

Milojević, S. (2020). Practical method to reclassify Web of Science articles into unique subject categories and broad disciplines. Quantitative Science Studies, 1(1), 183–206.

Moed, H. (2002). Measuring China’s research performance using the Science Citation Index. Scientometrics, 53(3), 281–296.

Narin, F. (1976). Evaluative bibliometrics: The use of publication and citation analysis in the evaluation of scientific activity. National Science Foundation.

National Science Board. (2020). Research and Development: U.S. Trends and International Comparisons. Retrieved 6 March, 2021, form https://ncses.nsf.gov/pubs/nsb20203.

Opthof, T., & Leydesdorff, L. (2010). Caveats for the journal and field normalizations in the CWTS (“Leiden”) evaluations of research performance. Journal of Informetrics, 4(3), 423–430. https://doi.org/10.1016/j.joi.2010.02.003

Organization for Economic Cooperation and Development. (2015). Frascati Manual. Retrieved, September, 2021, from https://www.oecd.org/sti/inno/frascati-manual.htm.

Pudovkin, A. I., & Garfield, E. (2002). Algorithmic procedure for finding semantically related journals. Journal of the American Society for Information Science and Technology, 53(13), 1113–1119.

Rafols, I., & Leydesdorff, L. (2009). Content-based and algorithmic classifications of journals: Perspectives on the dynamics of scientific communication and indexer effects. Journal of the American Society for Information Science and Technology, 60(9), 1823–1835.

Robinson, W. D. (1950). Ecological correlations and the behavior of individuals. American Sociological Review, 15, 351–357.

Science-Metrix. (2021). Bibliometric indicators for the science and engineering indicators, 2022. https://www.science-metrix.com/wp-content/uploads/2021/10/Technical_Documentation_Bibliometrics_SEI_2022_2021-09-14.pdf

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9(5), 281–291.

Scopus (2021) What is field-weighted citation impact? Retrieved December, 2021, from https://service.elsevier.com/app/answers/detail/a_id/14894/supporthub/scopus/~/what-is-field-weighted-citation-impact-%28fwci%29%3F/.

Sivertsen, G., Rousseau, R., & Zhang, L. (2019). Measuring scientific contributions with modified fractional counting. Journal of Informetrics, 13(2), 679–694.

Tijssen, R. J., Visser, M. S., & van Leeuwen, T. N. (2002). Benchmarking international scientific excellence: Are highly cited research papers an appropriate frame of reference? Scientometrics, 54(3), 381–397.

van den Besselaar, P., & Sandström, U. (2016). What is the required level of data cleaning? A research evaluation case. Journal of Scientometric Research, 5(1), 07–12.

Veugelers, R. (2017). The challenge of China’s rise as a science and technology powerhouse (No. 2017/19). Bruegel Policy Contribution.

Wagner, C. S. (2008). The new invisible college. Brookings Press.

Wagner, C. S., & Leydesdorff, L. (2012). An Integrated Impact Indicator: A new definition of ‘Impact’with policy relevance. Research Evaluation, 21(3), 183–188.

Waltman, L., & van Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. Journal of the American Society for Information Science and Technology, 63(12), 2378–2392.

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & van Raan, A. F. J. (2011). Towards a new crown indicator: Some theoretical considerations. Journal of Informetrics, 5(1), 37–47.

Zhang, L., & Sivertsen, G. (2020). The new research assessment reform in China and its implementation. Scholarly Assessment Reports, 2(1), 3. https://doi.org/10.31235/osf.io/9mqzd

Zhou, P., & Leydesdorff, L. (2006). The emergence of China as a leading nation in science. Research Policy, 35(1), 83–104.

Acknowledgements

We thank Koen Jonkers, Xabier Goenaga, Ronald Rousseau and two anonymous referees for advice, critiques, and suggestions. Loet Leydesdorff is grateful to ISI/Clarivate for JCR data.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wagner, C.S., Zhang, L. & Leydesdorff, L. A discussion of measuring the top-1% most-highly cited publications: quality and impact of Chinese papers. Scientometrics 127, 1825–1839 (2022). https://doi.org/10.1007/s11192-022-04291-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04291-z