Transforming Wikipedia into an accurate cultural knowledge quiz

Building “Test Your Culture” by taking the world’s largest encyclopedia… and combining it with data science

📊 To try the quiz, visit Test Your Culture.

Summary: by using Wikipedia “infoboxes” to identify cultural content, together with the mathematical techniques of binomial regression and maximum likelihood estimation, it’s possible to create a fun, educational, and scientifically accurate quiz of cultural knowledge — and use the results to answer research questions.

Recently, I wanted to see if it was possible to measure someone’s cultural knowledge. After all, we already have IQ tests which (arguably) measure intelligence, along with standardized tests like the SAT which measure academic knowledge in specific areas. But what about general cultural knowledge — things like movies, books, and music? Politicians, celebrities, and historical figures? And I wasn’t interested in trivia quizzes — I wanted to know if you could create something that measured your actual cultural knowledge, in a scientifically accurate way.

To the best of my knowledge, no one had ever attempted an accurate, wide-ranging test of “all” cultural knowledge. But if you could create such a test, you could answer all sorts of interesting questions: how much does cultural knowledge vary between people? At what rate does it grow, and how does that change with age? And what other patterns could you find? Plus, it would just be fun to take. Would it be possible?

Fortunately, I had some experience with this kind of thing. Eight years ago, I wanted to know how English vocabulary sizes varied among people, and I created Test Your Vocab. It’s a five-minute quiz which gives users a randomly-selected list of 160 words, which they mark whether they know or not, and in return it reports back the size of their vocabulary. It’s mathematically accurate, and once it had been taken a couple million times I was fortunate enough to have enough data to be able to turn it into a collection of research results. (For example, discovering that the average adult test-taker learns almost 1 new word a day until middle age).

I wanted to try the same approach with culture, where users would be given a series of cultural items and asked to mark if they knew each one or not. (Trying to do something more complicated like multiple choice questions wouldn’t work for several reasons — it wouldn’t be feasible to write tens of thousands of questions, it would take too much time for users to answer them, and I wanted to measure how many items test-takers knew, not what they knew about them.)

Measuring vocabulary had been relatively straightforward, since there’s already an authoritative list of words to sample from: the dictionary. But how could you define something so amorphous as “culture”? What could I measure from? I realized the answer was staring me in the face, in the form of a website I use almost every day: Wikipedia. Unlike print encyclopedias, it contains an entry not just for notable topics and people, but also for movies, books, music albums, and more… over 5,000,000 entries total (compared with just 40,000 for the 2013 print Encyclopædia Britannica). A veritable cultural cornucopia!

Could I turn Wikipedia into a scientifically accurate test of cultural knowledge? And make it educational too? The answer was yes… but it would turn out to be a greater challenge than building Test Your Vocab. The difficulties lay not just in figuring out how to import and process all the data, but also in determining the right mathematical techniques for it, as well as making it intuitive, clear, and fun to use.

Retrieving cultural items from Wikipedia

Download and import Wikipedia

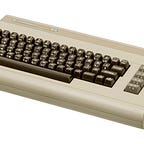

The first step was is to download Wikipedia, which is as easy as going to dumps.wikipedia.org and downloading enwiki-latest-pages-articles.xml.bz2. It’s not small — over 15 GB compressed, and over 60 GB uncompressed. But it’s easy to use: a single XML file with the current text of all Wikipedia articles. Which, whenever I stopped to think about it, would never fail to blow my mind: Here I was, carrying the world’s largest and most comprehensive collection of general human knowledge, over three billion words… on my laptop, with me to the coffee shop. Technology sure had come a long way since I grew up learning to program on a computer with 64 KB of memory.

Importing it into a database was easy enough, too. I didn’t bother with any XML parsing libraries since I was worried about how they’d handle tens of gigabytes. I didn’t even worry about uncompressing the file first. Instead, I wrote a script to open the compressed file directly, pipe it through bunzip2 to decompress it on-the-fly, used some simple string processing to extract the unique ID, title, and full “Wikitext” of each article, and then wrote each article as a row to the database. And actually, I didn’t even have to download the file separately first: I could pipe it straight from Wikipedia’s server. (The magic of streams!)

Article categories

I didn’t want to import all of Wikipedia — I only wanted articles that were distinct cultural items. I didn’t want topics like “Physics”, I didn’t want things like “List of X…”, and I certainly didn’t want disambiguation pages, talk pages, user pages, and so on. Instead, I had a specific list of the types of articles I did want: people, musical artists (because they can be groups of people), and creative works (like books, movies, music, and so on).

My first thought was to use the categories listed at the bottom of each Wikipedia article. After all, they covered all the types of items I was looking for, and appeared to follow a hierarchy. For example, “Albert Einstein” belongs to over 80 categories, but they’re all categories of people:

One of them is “20th-century physicists.” That belongs to the category “Physicists by century,” which belongs to “Scientists by century,” and so on through “People by occupation and century,” “People by century,” “People by time,” and finally… “People.” Bingo! It seemed to work for creative works too — e.g. Moby Dick belonged to “1851 American novels” which, after a number of hops, eventually belonged to “Books”:

All I needed to do was identify all the subcategories underneath certain top-level categories such as “People” and “Books”, and I’ve have the cultural items I wanted. Excitedly, I added code to my script to record in my database the categories each article belonged to (which included the categories each “category article” belonged to, so I had the full tree). Then to check the results, I wrote a query to run the hierarchy in reverse, and find all the articles covered by subcategories of “People” a certain number of levels deep. I hit “execute”, and…

…it was a disaster. Every kind of article was popping up! For example, it listed “Apple” the company, which was certainly not a person. Digging in, it turned out “Apple” belonged to the category Steve Jobs which eventually belonged to… “People,” of course. It turned out Wikipedia categories aren’t strictly hierarchical at all, but are used for so many “related” things as to make them useless for determining what kind of a thing an article represented. Deflated, I wondered if there was another way?

Infoboxes

I remembered that in the top-right corner of many Wikipedia articles, there’s box of information, usually with a photo… an “infobox” in Wikipedia parlance, it turned out. And looking at the Wikitext of each article, each infobox seemed to belong to a clearly-defined category as well. Steve Jobs had a “person” infobox, Apple had a “company” infobox, and Moby-Dick had a “book” infobox. Promising… how many infoboxes were there? More than 1,500, I discovered. Could it work?

I manually made a list of the couple-hundred infoboxes referring to people (“person”, “NFL biography”, “Christian leader”, etc.), and found the couple-dozen infoboxes for the creative works I wanted (“film”, “book”, “album”, etc.). I wrote some further simple string processing to locate the infobox (if present) in the Wikitext of each article and extract its name, mapped it to my manual list of desired categories, and then saved it to my database.

The good news: it did work! The bad news: it needed a lot of further tweaks. While virtually all creative works have infoboxes, most of the “long tail” of people in Wikipedia don’t, so I had to find another way of identifying them (solution: detect string matches for birth-year categories like “1923 births”). Some articles have infoboxes not for the main topic but for something related later in a page (e.g. an author doesn’t have an infobox of their own, but has an infobox for a book of theirs later on) so I needed to limit myself to infoboxes at the start of an article. But sometimes there’s valid text that comes before the infobox (e.g. various types of headers) so I had to develop various heuristics to find infoboxes that “count.” But in the end, I successfully wound up with:

- 1,500,000 people both current and historical (also including groups like musical artists)

- 200,000 albums and songs, 120,000 movies, 40,000 TV shows, and 20,000 video games

- 40,000 books and short stories, 9,000 comics, anime and manga, and 5,000 plays, musicals and operas

- 6,000 artworks (such as paintings and sculptures), and 1,500 compositions (such as symphonies)

So I had my database of cultural items — but that was just the start.

Determining item popularity

Now that I had my database of nearly two million cultural items, I needed a way to determine their relative levels of popularity. After all, I couldn’t simply randomly sample quiz items from all two million, since that would result in a quiz where nobody would usually know any of the items. One of the keys to Test Your Vocab’s success was in making the quiz adaptive — showing easier words to people with smaller vocabularies, and harder words to people with larger ones. How could I determine the level of difficulty of a Wikipedia article?

Import Wikipedia pageviews

Fortunately, along with the text of articles, Wikipedia makes another piece of data public as well: the number of times an article is viewed. Wikipedia provides raw pageviews data in separate downloadable files for each hour of traffic of Wikipedia’s history (2007–2016 and then 2015 onwards). But since each (compressed) file is about 50–100 MB, completely analyzing even a full year would involve downloading something like 600 GB across close to 9,000 files. Yikes! Fortunately, some further digging revealed that starting in 2011, Wikipedia processed these into monthly files — phew. So I wrote a second script to download a range of months of pageviews files, and import the lines into a new database table.

It wasn’t quite that simple, however — pageviews files list article URL’s, not article titles, and a single article can be listed under a wide variety of different URL’s thanks to Wikipedia redirects, which include things like alternate names, common misspellings, etc. So I added code to my original import script to create an additional table of Wikipedia redirects. With the new table, a simple join maps an article to the pageviews associated with all its redirects and sums them together to determine its popularity. And fortunately, Wikipedia doesn’t support redirects to redirects, so a single join is all that’s needed.

(There is the minor detail that a redirect now isn’t necessarily the same as a redirect in the past, and articles can be renamed — so historical traffic could wind up getting sent to the wrong present-day article, or being lost entirely when an article turns into a disambiguation page, for example. But it’s a relatively rare occurrence, and something I’ll just have to accept for now.)

Improving pageviews quality

Initial results were encouraging: at a glance, pageviews really did seem to reflect the cultural popularity of items. It passed the smell test. But it still needed some tweaks.

Initially I tried ranking items by their current popularity (the most recent full month) — but this made the list feel too current, giving cinema blockbusters and musical artists of the moment far too much importance. Experimenting with different time ranges, I settled on averaging the popularity of items over the previous five years, which seemed to strike the right balance between ensuring that cultural items felt durable, while still changing with the times. (I also had to normalize pageviews for any given month by the month’s total, so Wikipedia’s overall growing traffic wouldn’t given stronger weight to more recent months.)

But there were still a few oddball items with unexpectedly high scores. Digging into the data, I saw that certain articles would have unexpectedly massive spikes in traffic for a month or two, and then level off again (sometimes clearly due to a news event, other times with no obvious explanation). So to remove outliers, for each article I ignored the 5% of months with the highest traffic.

With these strategies combined, the list felt intuitively right and I was on my way. The top 5 items were:

- Donald Trump

- Game of Thrones

- Elizabeth II

- Barack Obama

- Cristiano Ronaldo

But already from this list, I found myself questioning which culture I would be measuring. American? British? Or even international, when it comes to soccer (football)?

Test whose culture?

I was basing my measure of cultural popularity on English-language Wikipedia pageviews. When I looked up traffic by country, the majority was American. But there was still significant traffic from the UK and India, which explained the presence of a bit more British royalty and Bollywood stars than Americans would be accustomed to — as well as footballers and mixed martial artists.

Culture isn’t something universal: there’s American culture and British culture, French culture and Chinese culture, and even very different culture for a 7-year-old (children’s authors, songs and TV shows) versus an adult. Ideally you create separate tests for all of them. But unfortunately, Wikipedia doesn’t make pageview statistics available per-country, only per-language. (And certainly not per age group either.) So for the time being, I realized I had no choice but to make the quiz cover “adult English-speaking culture” as determined by the proportions of Wikipedia visitors from each country. (But if Wikipedia ever does separate out pageviews per country, the first thing I’d like to do is create separate quizzes for the US, UK, India, and so on.)

Measuring knowledge

Now that I had the list, how would I calculate someone’s knowledge from it, in just a 5-minute quiz? I didn’t just want this to be fun — I wanted it to be a scientifically accurate measure of someone’s knowledge, something that I and others could produce statistically valid research from.

The challenge

I already had experience doing something similar with Test Your Vocab. People’s vocabularies followed a general pattern: they knew all words up to a certain level of difficulty, then there was a transition period where knowledge decreased, and then it trailed off to a level of virtually zero knowledge. (And then, the dictionary ends.) I’d built the Test Your Vocab quiz to comprise two steps: an initial step which tested 40 words from extremely easy to extremely difficult in order to approximately find the “zone of transition,” and a second adaptive step showing 120 words sampled from across that zone of transition. By focusing mainly on each person’s unique zone of transition, and assuming they knew all words prior to it and no words after it, I could add up all the words prior to the zone, together with the proportion of words they knew in the zone, ignore everything after it, and finally produce a fairly accurate vocabulary measure. The actual math was a little more complicated since I chose words difficulties logarithmically rather than linearly, but at the end of the day it was still just adding up numbers, where each checked box stood in for a number of known words:

But when I started going through my ordered list of cultural items, I realized this approach wouldn’t work at all.

First, even out of the first 100 items, already I didn’t know a full quarter of them. Because culture is so broad, there isn’t any early zone of “knowing everything” I could rely on to account for the bulk of someone’s knowledge. (Someone might be knowledgeable about teen music but totally ignorant about politicians, or vice-versa.)

Second, not only was there no zone of “knowing everything,” but there was also no zone of “knowing nothing.” After the first 10,000 items, I still knew roughly 10% of items. After 100,000 items, I knew around 1%. And even after a million items, I could pick out something like 1 in 1,000. So unlike vocabulary, cultural knowledge was distributed more like this:

And this led to a third problem: because people’s knowledge is thin but stretches far out, if I sampled 100 items randomly from even just the top 50,000 items, someone might know just 5 of them! The quiz wouldn’t be fun or particularly accurate. I’d have to figure out something smarter.

Data modeling

The solution would have to rely on the fact that everybody’s cultural knowledge must follow a defined “curve,” decaying according to a certain formula as items became more difficult. If I could find the shape of that curve then I could calculate a person’s overall knowledge. I could discover the shape by asking about a range of items from easy to hard, and then extrapolate the rest. So first I needed to figure out what the curve looked like.

I went through a large sampling of the first 100,000 items in my list together with friends, marking items we knew in order to generate data I could model against. Then for each of us, I tried plotting knowledge levels (averaged out in ranges) against pageviews, expecting that knowledge might be proportional to an item’s popularity. Unfortunately, I couldn’t find any kind of formula that worked across-the-board for easy, medium and hard items. I tried adjustments to model the idea that someone might need a certain number of “exposures” to an item before knowing it, but that didn’t help either.

Finally I wondered if I was overcomplicating it. I’d noticed previously that my knowledge seemed to drop 90% every time the rank of an item went up an order of magnitude, regardless of the actual pageviews. I also remembered Zipf’s law, which modeled the proportion of words in texts merely according to their rank of frequency. So I tried ignored pageviews and plotted knowledge levels against just an item’s rank, and found an accurate formula based on a simple logarithm — it turned out each person’s knowledge could be represented with just two parameters. First was knowledge breadth — even among the easiest items, what proportion do they know? (A cultural “jack-of-all-trades” will have high breadth; a “specialist” will have low breadth.) And second was knowledge depth — as items become more difficult, how quickly does their knowledge drop off? With these two parameters, I could describe the shape of anyone’s “knowledge curve” — broad but shallow, narrow but deep, or any combination. And since they accurately predicted the probability of knowing any cultural item, as long as I could estimate the two parameters, I could simply sum up the probabilities across my nearly 2,000,000 items to produce someone’s overall estimated knowledge… right?

Picking a cutoff

It wouldn’t be that easy, because of how long tails work. For example, suppose Amazon carries 1,000,000 books. One might assume that the top 50,000 would account for 99% of sales, since the remaining ones sell so infrequently. But because there are so many of the rest, those remaining 950,000 books might still account for a full 50% of sales. (These exact numbers are made up, but this is one of the many advantages Amazon has over brick-and-mortar shops.)

The same thing was happening with my own knowledge — I might know 3,000 items from ranks 1–10,000. But then I knew another 3,000 items from ranks 10,001–100,000, and another 3,000 items from ranks 100,001–1,000,000… and then I knew plenty more things that weren’t even in Wikipedia at all. When I calculated my knowledge of the top million items based on the parameters of breadth and depth, depth dominated completely, and not only that, but the final result was extremely sensitive to the tiniest changes in the depth parameter… far too sensitive than anything that could be measured in a five-minute quiz.

So I wouldn’t be able to measure “total” cultural knowledge after all — I’d have to pick a cutoff, so I experimented. Measuring just the top 1,000 items, it turned out that breadth was much more important than depth. On the other hand, out of 100,000 items depth turned out to be much more important than breadth. But if I estimated out of the top 10,000 items, they turned out to be roughly equally important. So by limiting to the top 10,000 cultural items, the quiz would reward people with breadth or depth (or both!), it would include items ranging from easy to hard, and it would produce a meaningfully accurate number at the end.

The concept was sound. Now, how could I determine someone’s breadth and depth?

Estimating parameters

Many people are familiar with linear regression, when you put a bunch of two-dimensional data into Excel (like prices over time) and ask it to fit a line to them:

A line is defined by two parameters (slope and offset), and my curve is defined by two parameters (breadth and depth), so I needed to do the same kind of thing. Only, instead of continuous values (like prices), I had binary data points of whether someone knew an item or not.

I did some research and discovered that with binary data, the equivalent technique is called binomial regression. It’s less known (Excel doesn’t have an option for it), but binomial regression calculates the probability of a given item being 0 or 1:

To perform binomial regression, the technique I’d need to use is called maximum likelihood estimation, or MLE. (It turns out the least squares approach commonly used in linear regression is mathematically equivalent to MLE, in that specific case.) The way MLE works is, suppose I have 100 items sampled from different ranks of difficulty, and a person marks each one “known” or “not known”. For any given breadth and depth, each item then has a certain probability of being known or not. We compare these probabilities against the actual marked values of known/unknown, and we can calculate the likelihood of the person having that given breadth and depth. We try lots of pairs of breadth and depth, and the pair with the highest likelihood in the end is the pair we assume describes the person’s actual knowledge.

Of course, this involves trying a lot of pairs. One way to figure out the most likely pair would be to brute-force it — to calculate the likelihood of, say, 1,000 different breadths and 1,000 different depths for a total of 1,000,000 combinations. But that would take way too long and use way too much CPU… It turns out that a better and faster way is to use hill climbing. For any user’s responses, we start with a “middle” pair of values for breadth and depth (the exact starting point doesn’t matter). Then we compare it with four new pairs—adding or subtracting a given amount of breadth or depth for each one. If any of these pairs of parameters turn out to make the data more likely than our original pair, we “move” there. Otherwise we stay in the same place, but halve our distances. And then we repeat:

After just 20 or 30 times, we can calculate someone’s breadth and depth with all the precision we need. And so finally, putting it all together, we turn sampled input data into an overall knowledge estimate:

Maximizing accuracy

Now I knew how to calculate someone’s breadth and depth, and therefore knowledge, from their quiz answers… but how could I maximize accuracy? Sampling evenly across the top 10,000 items might be very accurate for some people, but for someone with less knowledge it would be far more accurate to focus only on easier items they were somewhat likely to know, while for someone with extensive knowledge it would be much more sense to focus more on harder items. But I wouldn’t know their knowledge until I’d already measured it!

So I adopted a technique called active learning. (The name of which can be a bit misleading in educational contexts, since it’s the computer which is learning, and not the user!) Active learning means breaking apart the testing into multiple steps, where additional steps use estimates from previous steps to determine which items will provide the most information in future steps.

I broke the quiz into four steps. The first is shortest (25 items), covering items from very easy to very hard, distributed logarithmically in rank. Then, I perform MLE to determine the most likely breadth and depth. Even though this will be fairly inaccurate, it’s enough to generate a second step (30 items) ranging from easy to hard for this specific person. I repeat the process for a third step (30 items again) to really get the range right, and finally show a fourth step (60 items) that are almost certainly in the right range, and provide the opportunity to estimate the parameters from the most mathematically meaningful items.

After all that, I use MLE across all 145 items to calculate the person’s overall knowledge out of the top 10,000 items, and it works! It provides accurate results no matter if they know 50, 500, or 5,000 items, and no matter if their knowledge is broad or deep or both or neither. All from 145 quiz items, and all because we know what shape the curve must have. The power of math…

Finishing touches

I had the ranked cultural items and I had the math to estimate knowledge. But when I put them together in a prototype, there were still some pieces missing.

What does it mean to “know” something?

Answering whether you know something can turn out to be surprisingly hard. Often, I’d come across a celebrity’s name and realize I had a vague idea… for example, I knew they were an actor but couldn’t remember in what. Or I’d see a movie and know it was part of a series of sequels, but not which specific one. Should these count? How much do you need to know to “know” something? Does one fact count? Three facts? What even is a “fact”?

After a lot of thinking, there was only one workable solution I could come up with. I decided that, for the purposes of the quiz, you “knew” an item if you could uniquely define it — in any way that made it different from all other items. So if I knew Ryan Reynolds was an actor but didn’t know anything else about him, that wasn’t enough because it didn’t make him unique — there are lots of actors out there. But if I could say he was the lead actor in Deadpool, then that made him unique. I struggled with whether recognizing someone by sight should count, and people I talked to had mixed opinions — but since someone’s face makes them uniquely identifiable, I felt this had to count too.

Additional item info (and NSFW)

There was another problem when taking the quiz — just seeing titles of items often led people to confuse them. Wikipedia titles are guaranteed to be unique, and use parentheticals to distinguish between items with identical names. But parentheticals often aren’t used on the most common one. So when you see “Anne Hathaway” (rank #269), you have no way of knowing whether that’s the actress or Shakespeare’s wife. (Turns out it’s the actress — Shakespeare’s wife is titled “Anne Hathaway (wife of Shakespeare),” rank #9,118). And even beyond identical matches, people easily confuse similar-sounding names of things, so having extra identifying information about each item isn’t just a nice-to-have, but necessary. And you want to provide the extra information when necessary, but not overload either. After a lot of experimentation, I took a multi-pronged approach which wound up looking like this:

The different parts included:

- Icons and categories for creative works. This was the easiest — if the infobox said it’s a book, put “book” underneath the title and show a book icon next to it. (This way we know it’s not the movie adaptation or video game.)

- Occupation and icon for people. Under one Steve McQueen (the American one) we want “actor”, and under the other (the British one) we want “filmmaker”. Unfortunately, Wikipedia doesn’t provide any reliable way of extracting short and “intuitively correct” 1–2 word descriptions of people (which could be the subject of an article in its own right). The best solution I found was to create a whitelist of hundreds of common occupations, and assume that the first one found in the first sentence of the article applies. It took quite a few tweaks and a little bit of hand-tuning to get right, but eventually turned out to be surprisingly robust.

- Country and year for creative works. There are so many movies and books with the same name (or similar names) that additional disambiguation was needed here as well. Fortunately, country and year can be extracted from infobox fields with relative ease, so a user can distinguish between Twin Peaks (USA 1990–1991) and Twin Peaks (USA 2017), or between Oldboy (South Korea 2003) and Oldboy (US 2013).

- Blurb. But sometimes all that’s still not enough (“Is she the actress I’m thinking of?”), so I wanted the first couple sentences of the article to pop up next to the item when someone needs extra confirmation. This required writing code to strip out Wikitext formatting like bold, italics, references, citations, and a whole lot more, to finally end up with just pure text. Then, it still wasn’t ideal for users because the blurb would often start with a long name, title, pronunciation information, and so on… But if I just took the text starting after “is/was/are/were” followed by “a/an/the,” it worked perfectly. So simple!

- Images. Finally, sometimes it’s easiest to recognize someone by sight, and it’s very common to have a main image inside the infobox. So I grabbed each image’s filename as well, but it turns out that’s not enough to be able to download it. Instead, you need to make a separate call to the MediaWiki API for each filename to retrieve a URL to the specific server that image is hosted on, and then download that. But I cache a reduced-size version of the image on my server, and display that next to the blurb.

Putting it all together, it’s easy to confirm whether you know something or not. Plus, it has the side effect of teaching you about a new, random cross-section of cultural knowledge each time you take the quiz.

There was just one final problem: occasionally items would pop up that were definitely NSFW, or just made you feel icky when reading the description. To make the quiz more family-friendly, I filtered out anything related to adult entertainment (quite a few porn stars in the top 10,000), as well as contemporary people notable principally for violent crime (whether as perpetrators or victims). There are just… some things you’d rather not read about while eating lunch. But I also didn’t want to whitewash history, so historical and politically-motivated violence (e.g. terrorism) stayed in.

Percentiles

Finally, it’s all well and good to get a score of 2,000 or 3,000 or 5,000 out of the top 10,000… but the number doesn’t mean much without being able to compare with others. So I wanted to include a percentile as well, e.g. saying that you knew more than 20% or 50% or 75% of the population:

The only problem was that people who take Internet knowledge quizzes skew significantly more knowledgeable than the general population. For example, when I asked Test Your Vocab test-takers to optionally provide their verbal SAT scores and compared it with published SAT score distributions, it turned out my average American test-taker was in roughly the 98th percentile of SAT scores — so very skewed.

I wanted to normalize my percentiles to the general American population, so I added optional survey questions on age, gender, and educational level, using categories aligning with US census data. Then, to calculate percentiles, I weighted each combination of the three categories according to actual population data, which normalizes the percentiles. For example, it doesn’t matter if among 25–29 year olds, I get 10 times as many participants with bachelor’s degrees as those with high school degrees. Census data reveals both segments are roughly the same size, so by normalizing I proportionally underweight the first and overweight the second. The end result isn’t perfect — it doesn’t account for other factors beyond the three, and results are still coming only from people who choose to take a knowledge quiz for fun, which likely has its own skey. But it should still be a major improvement over percentiles without any normalization at all.

The final result

I built a website to host the quiz, and made it responsive too — Test Your Vocab’s mobile traffic had started regularly beating its desktop traffic in December 2016, so I was firmly in a mobile-first world now. I put together a photo-collage to give the home page some personality, hosted it in the cloud, and launched Test Your Culture last month (September 2018):

It’s already been taken by thousands of people, but in order to produce accurate and detailed research results from it, it will require millions of responses — just like Test Your Vocab.

And similarly to the series of blog posts I produced for Test Your Vocab, I want to answer questions like:

- How does cultural knowledge increase with age? Are people still learning “new culture” at age 50 as much as at age 20? How much variation is there within an age band?

- What are the strongest patterns within cultural knowledge, i.e. what types of items are most correlated? To what degree do they follow generations, cluster around topics of interest, or are associated with education, geography, or something else? (Fun with principal component analysis!)

- What additional factors are most strongly associated with greater or less cultural knowledge? (For example, asking additional survey questions around self-reported consumption of TV, news, music, and books.)

And just like Test Your Vocab, I’ll make the (anonymous) dataset available to researchers who want to run their own analyses.

What’s next?

It’s been a blast learning how to put together Test Your Culture, but there’s still more on Wikipedia I haven’t tested yet, such as places (countries, cities, tourist spots, etc.) — which could make for excellent future quizzes! If you’d like to be notified of new quizzes coming down the pipeline, along with research results, you can follow @testyourculture on Twitter.

And if you haven’t yet… 📊 take Test Your Culture!