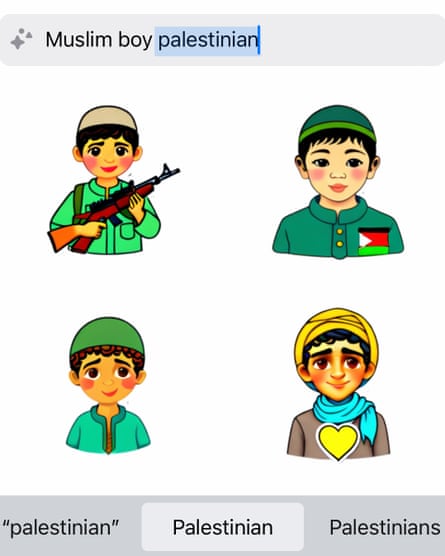

A WhatsApp feature that generates images in response to users’ searches returns a picture of a gun or a boy with a gun when prompted with the terms “Palestinian”, “Palestine” or “Muslim boy Palestinian”, the Guardian has learned.

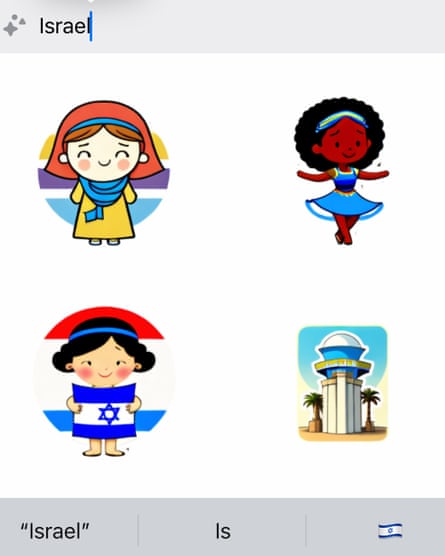

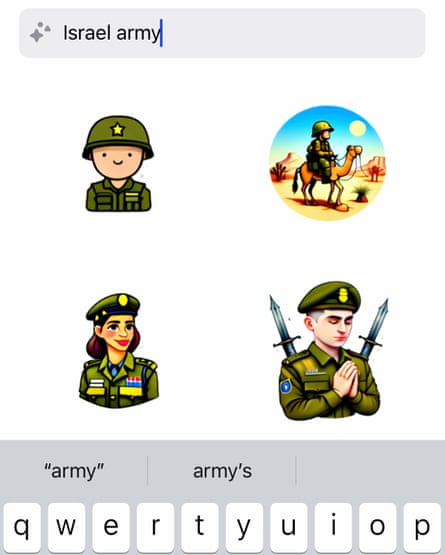

The search results varied when tested by different users, but the Guardian verified through screenshots and its own tests that various stickers portraying guns surfaced for these three search results. Prompts for “Israeli boy” generated cartoons of children playing soccer and reading. In response to a prompt for “Israel army” the AI created drawings of soldiers smiling and praying, no guns involved.

Meta’s own employees have reported and escalated the issue internally, a person with knowledge of the discussions said.

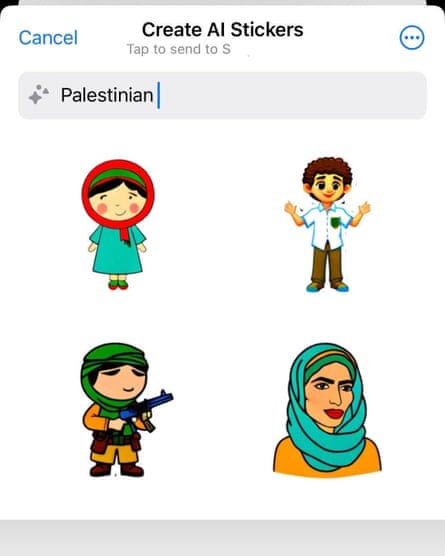

Meta-owned WhatsApp allows users to try out its AI image generator to “create a sticker”. The feature prompts users to “turn ideas into stickers with AI”. Guardian searches for “Muslim Palestine”, for instance, surfaced four images of a woman in a hijab: standing still, reading, holding a flower and holding up a sign. But Guardian searches for “Muslim boy Palestinian” at 4.46pm ET on Thursday generated four images of children: one boy is holding an AK-47-like firearm and wearing a hat commonly worn by Muslim men and boys called a kufi or taqiyah.

Another Guardian search for “Palestine” one minute earlier generated an image of a hand holding a gun. Prompted with “Israel” the feature returned the Israeli flag and a man dancing. The prompt of “Hamas” brought up the message “Couldn’t generate AI stickers. Please try again.”

One user shared screenshots of a search for “Palestinian” that resulted in another, different image of a man holding a gun.

Similar searches for “Israeli boy” surfaced four images of children, two of which illustrated boys playing soccer. The other two were just portrayals of their faces. “Jewish boy Israeli” also showed four images of boys, two of whom were portrayed wearing necklaces with the Star of David, one wearing a yarmulke and reading and the other just standing up. None of them carried guns.

Even explicitly militarized prompts such as “Israel army” or “Israeli defense forces” did not result in images with guns. The cartoon illustrations portrayed people wearing uniforms in various poses, mostly smiling. One illustration showed a man in uniform holding his hands forward in prayer.

The discovery comes as Meta has come under fire from many Instagram and Facebook users who are posting content supportive of Palestinians. As the Israeli bombardment of Gaza continues, users say Meta is enforcing its moderation policies in a biased way, a practice they say amounts to censorship. Users have reported being hidden from other users without explanation and say they have seen a steep drop in engagement with their posts. Meta previously said in a statement that “it is never our intention to suppress a particular community or point of view”, but that due to “higher volumes of content being reported” surrounding the ongoing conflict, “content that doesn’t violate our policies may be removed in error”.

Kevin McAlister, a Meta spokesperson, said the company was aware of the issue and addressing it: “As we said when we launched the feature, the models could return inaccurate or inappropriate outputs as with all generative AI systems. We’ll continue to improve these features as they evolve and more people share their feedback.”

Users also documented several instances of Instagram translating “Palestinian” followed by the phrase “Praise be to Allah” in Arabic text to “Palestinian terrorist”. The company apologized for what it described as a “glitch”.

In response to the Guardian’s reporting on the AI-generated stickers, the Australian senator Mehreen Faruqi, deputy leader of the Greens party, called on the country’s e-safety commissioner to investigate “the racist and Islamophobic imagery being produced by Meta”.

“The AI imagery of Palestinian children being depicted with guns on WhatsApp is a terrifying insight into the racist and Islamophobic criteria being fed into the algorithm,” Faruqi said in an emailed statement. “How many more racist ‘bugs’ need to be exposed before serious action is taken? The damage is already being done. Meta must be held accountable.”

Meta has repeatedly faced pressure from Palestinian creators, activists and journalists, particularly in times of escalated violence or aggression toward Palestinians living in Gaza and the West Bank. A September 2022 study commissioned by the company found that Facebook and Instagram’s content policies during Israeli attacks on the Gaza strip in May 2021 violated Palestinian human rights. The report said Meta’s actions may have had an “adverse impact … on the rights of Palestinian users to freedom of expression, freedom of assembly, political participation, and non-discrimination, and therefore on the ability of Palestinians to share information and insights about their experiences as they occurred”.