Abstract

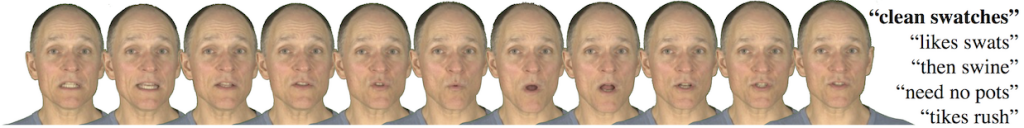

This paper introduces a method for automatic redubbing of video that exploits the many-to-many mapping of phoneme sequences to lip movements modeled as dynamic visemes [1]. For a given utterance, the corresponding dynamic viseme sequence is sampled to construct a graph of possible phoneme sequences that synchronize with the video. When composed with a pronunciation dictionary and language model, this produces a vast number of word sequences that are in sync with the original video, literally putting plausible words into the mouth of the speaker. We demonstrate that traditional, many-to- one, static visemes lack flexibility for this application as they produce significantly fewer word sequences. This work explores the natural ambiguity in visual speech and offers insight for automatic speech recognition and the importance of language modeling.

Additional Content

Copyright Notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author’s copyright. These works may not be reposted without the explicit permission of the copyright holder.